If you've been building with OpenAI's tools, you know the drill: just when you get comfortable, something new and powerful comes along. This time, it's the new OpenAI Responses API. It looks like OpenAI is trying to bring together the best parts of the older Chat Completions and Assistants APIs into a single, more powerful way to build AI agents.

But what does that actually mean for you as a developer? Well, it’s a pretty big deal. This change affects everything from how you manage conversation history to the tools you can give your AI.

In this guide, we'll walk through what the OpenAI Responses API is, its key features, how it compares to the APIs you might already be using, and some of the real-world headaches you might run into building with it. Because while it's incredibly powerful, building directly on the API isn't always the fastest route, and it helps to know what you're getting into.

What is the OpenAI Responses API?

Simply put, the OpenAI Responses API is the new, more advanced way to get responses from their models. Think of it as the upgraded engine for building apps that need to do more than just answer one-off questions.

The big idea that sets it apart is "statefulness." The older, and very popular, Chat Completions API is stateless. Every time you send a request, you have to include the entire conversation history from the beginning. It's like talking to someone with no short-term memory; you have to constantly remind them of what you just said. It works, but it can get inefficient and expensive with long conversations.

The Responses API changes that completely. It's stateful, which means OpenAI can manage the conversation history for you. Instead of resending the whole chat log, you just pass a "previous_response_id". This lets the API keep track of the context on its own, which can save you tokens and a lot of engineering effort. This is a clear signal that OpenAI is focused on making it easier to build more complex, multi-turn AI agents.

It's also set to replace both the Chat Completions and the Assistants API. OpenAI has already said they plan to deprecate the Assistants API in the first half of 2026, making the Responses API the clear path forward.

Key features of the OpenAI Responses API

The API isn't just about managing state; it comes with some powerful built-in features designed to help you build more capable AI agents right out of the box.

Simplified conversation state management

The headline feature is how it handles conversations. By using the "previous_response_id" parameter, you can string together multi-turn conversations without having to manually bundle up and resend the entire chat history. For more complicated setups, there's also a "conversation" object that gives you more structured control.

This is super handy, but there’s a catch that some devs are pointing out. When you let OpenAI manage the state on their servers, you're creating a bit of "vendor lock-in." Your conversation history lives with OpenAI, which could make it tricky to switch to another provider or an open-source model down the line without rebuilding your state management system. It's the classic trade-off between convenience and control.

Built-in tools for enhanced capabilities

One of the most exciting parts of the Responses API is its set of ready-made tools that give the model extra abilities.

-

Web Search: This gives the model a live connection to the internet. Instead of being stuck with its training data, your AI can look up current events, find recent info, and give answers that are actually up-to-date.

-

File Search: This is a huge deal for building knowledge-based agents. It lets the model search through documents you've uploaded to a vector store, so it can answer questions based on your company's internal docs, help articles, or any other private data.

-

Code Interpreter: This tool provides a secure, sandboxed Python environment where the model can run code. It’s perfect for tasks like data analysis, solving tough math problems, or even generating charts and files on the fly.

While these tools are great, they aren't exactly plug-and-play. You still have to configure them in your API calls and build the logic to handle what they return, which adds another layer to your development work.

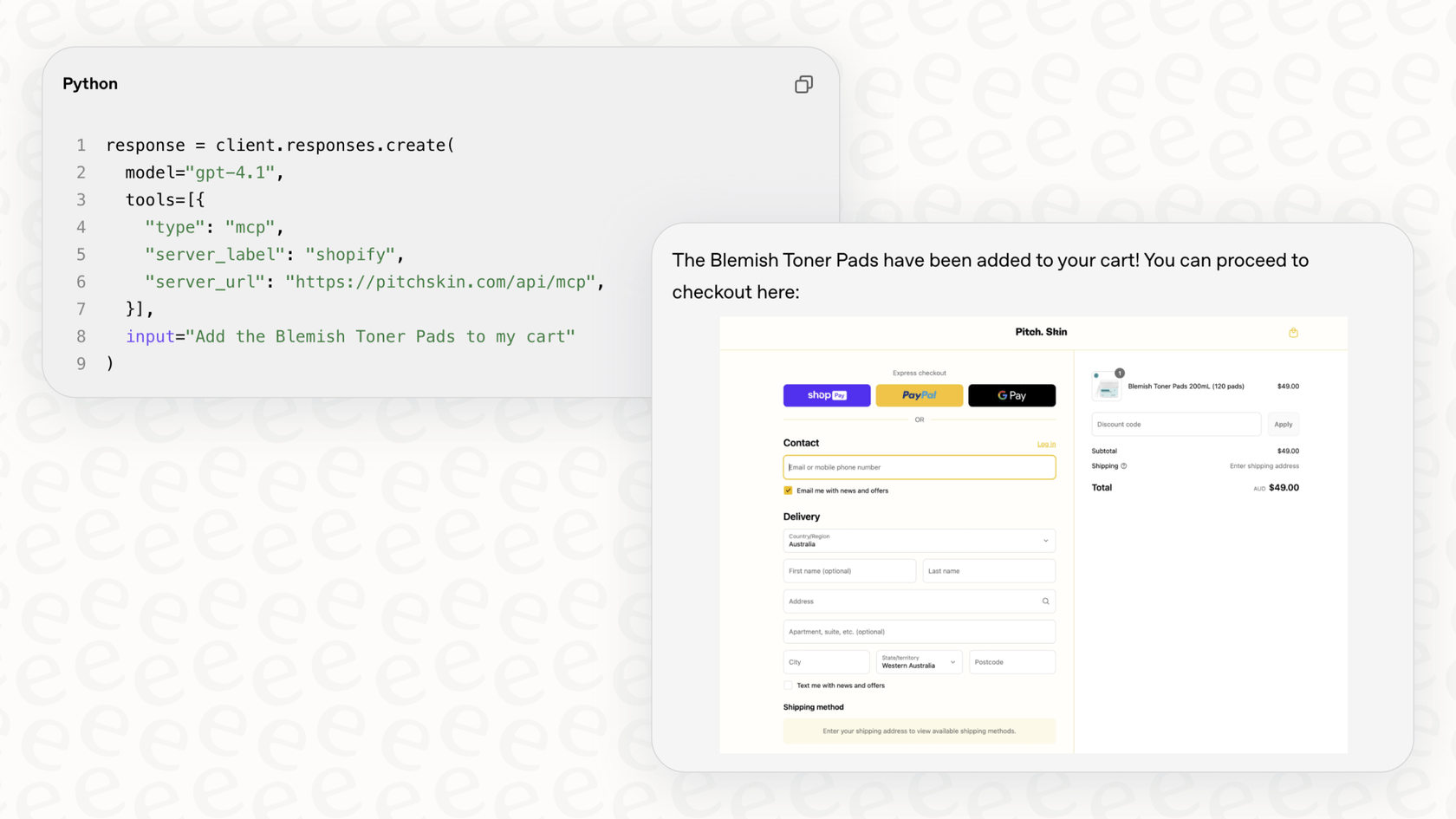

Advanced function calling and tool use

Beyond the built-in options, the API lets you define your own custom "function" tools. This is where you can really connect the AI to your own apps and services.

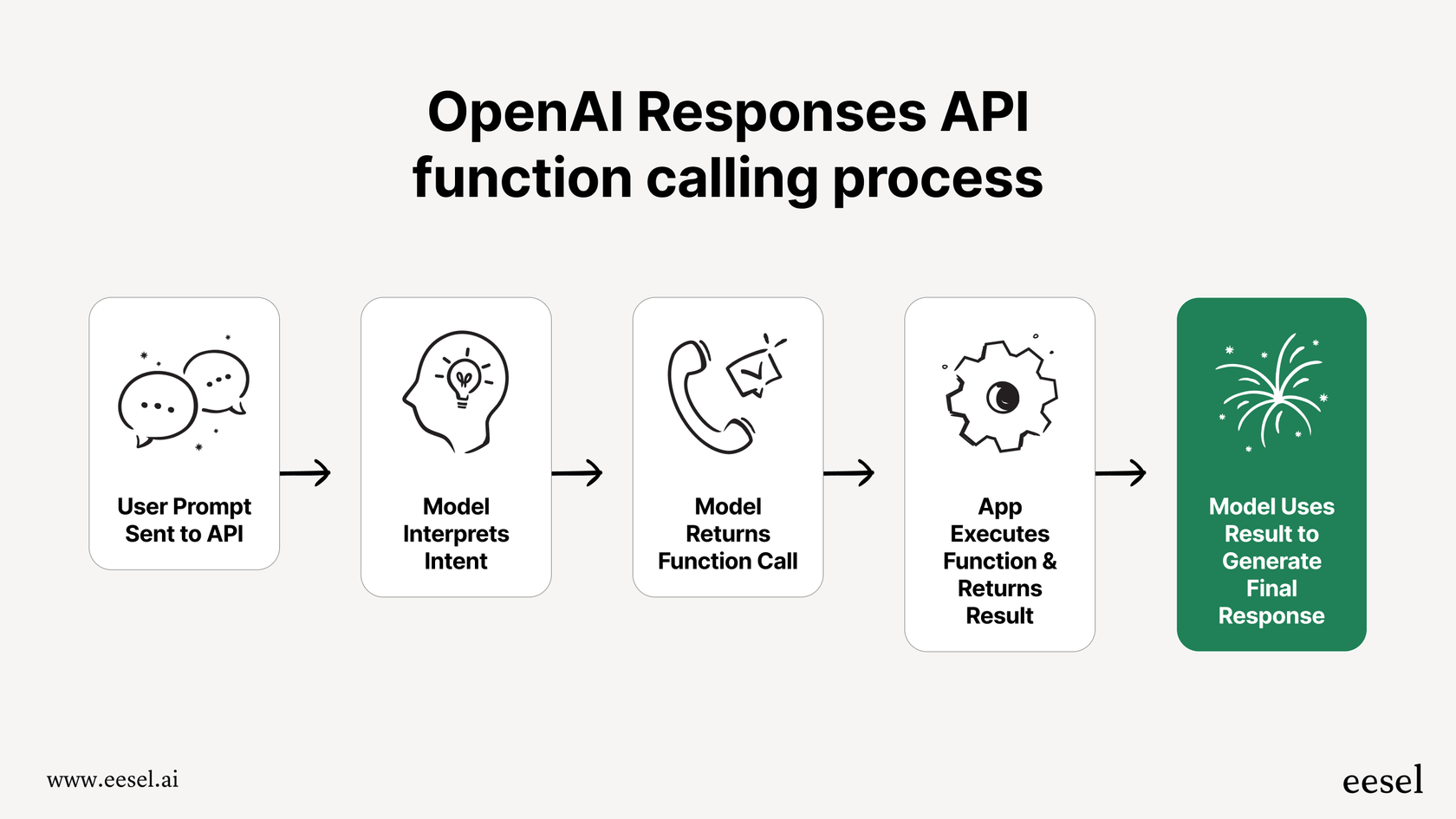

The process is pretty simple, but it's incredibly powerful:

-

Your app sends a user's prompt to the model, along with a list of custom functions it's allowed to use.

-

The model looks at the prompt and figures out if one of your functions could help.

-

If it finds a match, it doesn't answer the user. Instead, it sends back a JSON object with the name of the function to call and the right arguments.

-

Your app gets this, runs your actual function (like calling an internal API to get order details), and sends the result back to the model.

-

Finally, the model uses that result to give the user a complete, helpful answer.

This lets you build agents that can take real action, like checking a customer's order history, processing a refund, or booking an appointment.

OpenAI Responses API pricing

Alright, let's talk money. A big part of building with any API is understanding how much it's going to cost. OpenAI's pricing is mostly based on "tokens," which are basically pieces of words. You pay for the tokens you send in (input) and the tokens the model sends back (output).

The Responses API uses the same token-based pricing as the other APIs, but the exact cost depends on which model you use. Here’s a quick look at some of the popular models you can use with the Responses API, based on their standard rates per million tokens.

| Model | Input (per 1M tokens) | Output (per 1M tokens) |

|---|---|---|

| gpt-4o | $2.50 | $10.00 |

| gpt-4o-mini | $0.15 | $0.60 |

| gpt-5 | $1.25 | $10.00 |

| gpt-5-mini | $0.25 | $2.00 |

On top of the token costs, some of the built-in tools have their own fees.

-

Code Interpreter: $0.03 per container session.

-

File search storage: $0.10 per GB per day (you get 1GB free).

-

Web search: $10.00 per 1,000 calls.

While the stateful feature of the Responses API can help lower your input token costs, using the advanced tools will add to your bill. It’s important to think about both token use and tool fees when you're estimating costs. You can always find the full details on the official OpenAI pricing page.

OpenAI Responses API vs. Chat Completions vs. Assistants API

With three different APIs on the table, it can be tough to figure out which one you should be using. Here’s a simple breakdown of how they compare.

-

Chat Completions API: This is the classic, stateless workhorse. It’s simple, fast, and really flexible, which is why so many open-source tools and libraries are built for it. The main drawback is that you have to manage the entire conversation history yourself. There's also a more subtle problem: it can't preserve the "reasoning traces" of OpenAI's newest models, which can make them seem less smart than they actually are.

-

Assistants API: This was OpenAI's first attempt at a stateful API for building agents. It introduced useful concepts like Threads and Runs to manage conversations. The feedback from many developers, though, was that it felt slow and a bit clunky. It’s now on its way out to make room for the more flexible Responses API.

-

OpenAI Responses API: This is the new flagship. It's designed to give you the best of both worlds, combining the state management of the Assistants API with a speed and flexibility that's closer to the Chat Completions API, all while adding those powerful new built-in tools.

Here’s a quick comparison table:

| Feature | Chat Completions API | Assistants API (Legacy) | OpenAI Responses API |

|---|---|---|---|

| State Management | Stateless (you handle it) | Stateful (via Threads) | Stateful (via "previous_response_id") |

| Speed | Fast | Slow | Fast |

| Built-in Tools | No | Code Interpreter, Retrieval | Web Search, File Search, Code Interpreter |

| Flexibility | High (open standard) | Lower (rigid structure) | High (combines simplicity & power) |

| Conversation History | Sent with each call | Managed by OpenAI | Managed by OpenAI |

| Future Support | Maintained | Deprecation planned | Actively Developed |

The challenges of building directly with the OpenAI Responses API

While the OpenAI Responses API gives you an amazing set of building blocks, let's be real: stringing together a few API calls is a world away from having a support bot you can actually trust with your customers.

Complexity and orchestration The API gives you the tools, but you have to build all the logic that ties them together. For a support agent, that means creating some pretty complex workflows. When should it try to answer a question directly? When should it use a tool to look up an order? And most importantly, when should it give up and hand the conversation over to a human? That orchestration layer takes a ton of engineering time to build and get right.

Lack of a user-friendly interface An API is just an API. To actually manage a support agent, your team needs a dashboard. They need a place to tweak prompts, manage knowledge sources, look at analytics, and see how the agent is doing. Building all of that internal tooling is like developing a whole separate product just to manage your first one.

Connecting and managing knowledge The "file_search" tool is cool, but it means you have to upload and manage all your files and vector stores through the API. For most support teams, knowledge is spread out all over the place: in Google Docs, Confluence, and past tickets in Zendesk. Trying to manually gather, upload, and constantly sync all of that is a huge data management headache.

Testing and deployment risk How do you know if your AI agent is ready for prime time? There's no built-in way to simulate how it would have handled thousands of your past support tickets. You either have to build a complicated testing setup from scratch or just cross your fingers and risk deploying an agent that gives bad answers and frustrates your customers.

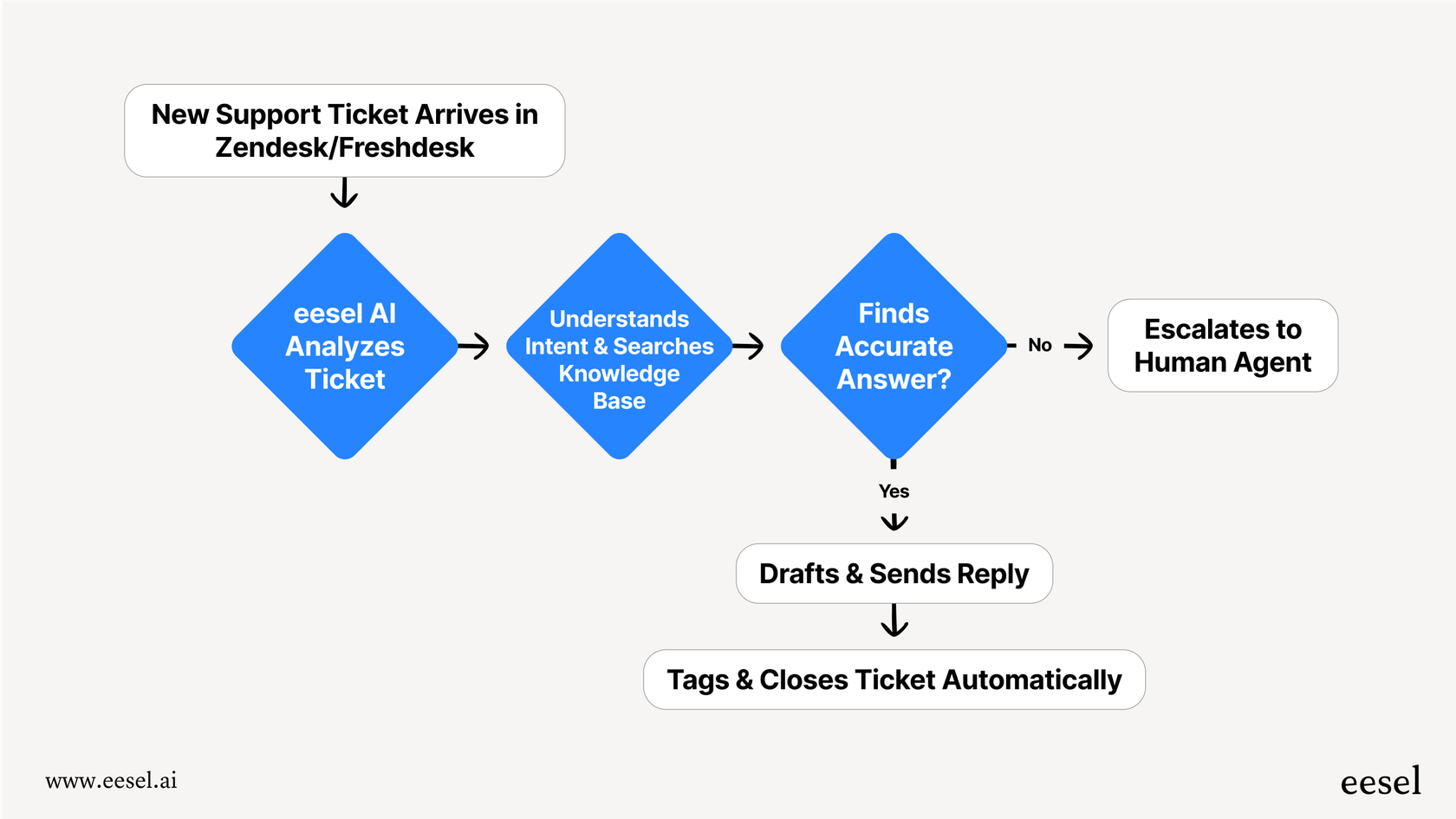

This is where a platform built specifically for support automation can make a world of difference. For instance, eesel AI uses powerful models like OpenAI's under the hood but gives you a self-serve platform that takes care of all this complexity for you.

How eesel AI simplifies building powerful AI support agents

Instead of wrestling with APIs, eesel AI offers a much smarter path to building and deploying AI agents for your support team. It’s not about replacing powerful models but about making them truly work for support teams without needing a dedicated crew of AI engineers.

Here’s how eesel AI handles the challenges of building from scratch:

-

Instead of writing complex code for orchestration, eesel AI provides a visual, fully customizable workflow engine. You can set up rules and actions without writing a single line of code.

-

Instead of managing files through an API, eesel AI offers one-click integrations. You can instantly connect and sync your knowledge from helpdesks like Zendesk and Freshdesk, wikis like Confluence, and collaboration tools like Slack.

-

Instead of risky, untested launches, eesel AI’s simulation mode lets you test your agent on thousands of your real historical tickets. You can see exactly how it would have performed and get a solid forecast of your automation rate before it ever talks to a single customer.

Final thoughts on the OpenAI Responses API

The OpenAI Responses API is a big step forward. It gives developers a stateful, tool-rich environment to build the next wave of AI agents, successfully blending the best aspects of the Chat Completions and Assistants APIs into one.

But the power of a raw API comes with the responsibility of building everything else around it. For a specialized job like customer support, the journey from a simple API call to a reliable, production-ready agent is packed with challenges in orchestration, knowledge management, and testing.

This is where platforms like eesel AI offer a real advantage. By handling the underlying complexity, they let teams deploy powerful, custom-trained AI support agents in minutes, not months.

Get started with AI support automation today

The OpenAI Responses API opens up a ton of possibilities. But you don't have to build it all from the ground up.

With eesel AI, you can deploy an AI agent trained on your company's knowledge and integrated with your helpdesk in minutes. You can even simulate its performance on your past tickets to see the potential ROI before you commit.

Start your free trial today or book a demo to see how it works.

Frequently asked questions

The OpenAI Responses API is OpenAI's new advanced, stateful API designed to build more complex and multi-turn AI agents. It's significant because it aims to replace both the Chat Completions and Assistants APIs, becoming the standard for future development.

The OpenAI Responses API simplifies history management by being "stateful." Instead of manually sending the entire conversation history with each request, you can use a "previous_response_id" parameter, allowing OpenAI to track context on its servers and save you tokens and engineering effort.

The OpenAI Responses API offers powerful built-in tools such as Web Search for live internet access, File Search for searching private documents, and a Code Interpreter for running Python code in a sandboxed environment. These tools enhance the model's capabilities beyond its training data.

Pricing for the OpenAI Responses API is primarily token-based, meaning you pay for the input and output tokens based on the model used. Additionally, certain built-in tools like Code Interpreter, File Search storage, and Web Search have separate fees.

The OpenAI Responses API is stateful and manages conversation history for you, while the Chat Completions API is stateless, requiring you to manage history. The Responses API also includes powerful built-in tools and is actively being developed as the flagship API, whereas the Chat Completions API will be maintained but lacks these advanced features.

Building directly with the OpenAI Responses API can present challenges such as significant complexity in orchestration logic, the need to develop a custom user interface for management, difficulties in integrating and syncing knowledge from various sources, and the lack of built-in robust testing and deployment tools.

Platforms like eesel AI simplify the complexities of the OpenAI Responses API by providing visual workflow engines for orchestration, one-click integrations for knowledge management, and simulation modes for testing agent performance. This allows teams to deploy powerful AI agents quickly without extensive engineering.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.