Typing questions into a chatbot feels pretty normal now, doesn't it? But the next big thing in AI isn't about typing; it's about talking. We're on the cusp of having real-time, conversational voice AI that can understand us, interrupt when needed, and respond just like another person would. It’s a shift that promises a much more natural way to interact with technology.

Powering this evolution are tools like the OpenAI Realtime API, which gives developers the building blocks for these fluid, voice-first experiences. But here’s the thing: while the tech itself is incredible, turning it into a polished business tool that’s ready for prime time is another story entirely. It's a journey that usually requires serious technical know-how, a lot of development hours, and a healthy dose of patience.

So, let's pull back the curtain on the OpenAI Realtime API, see what it can do, and talk honestly about what it takes to build with it.

What is the OpenAI Realtime API?

At its core, the OpenAI Realtime API is a tool that lets developers create applications with low-latency, speech-to-speech conversations. If you’ve ever used ChatGPT’s Advanced Voice Mode, this is the engine that makes that kind of interaction possible, but now it’s available for anyone to use.

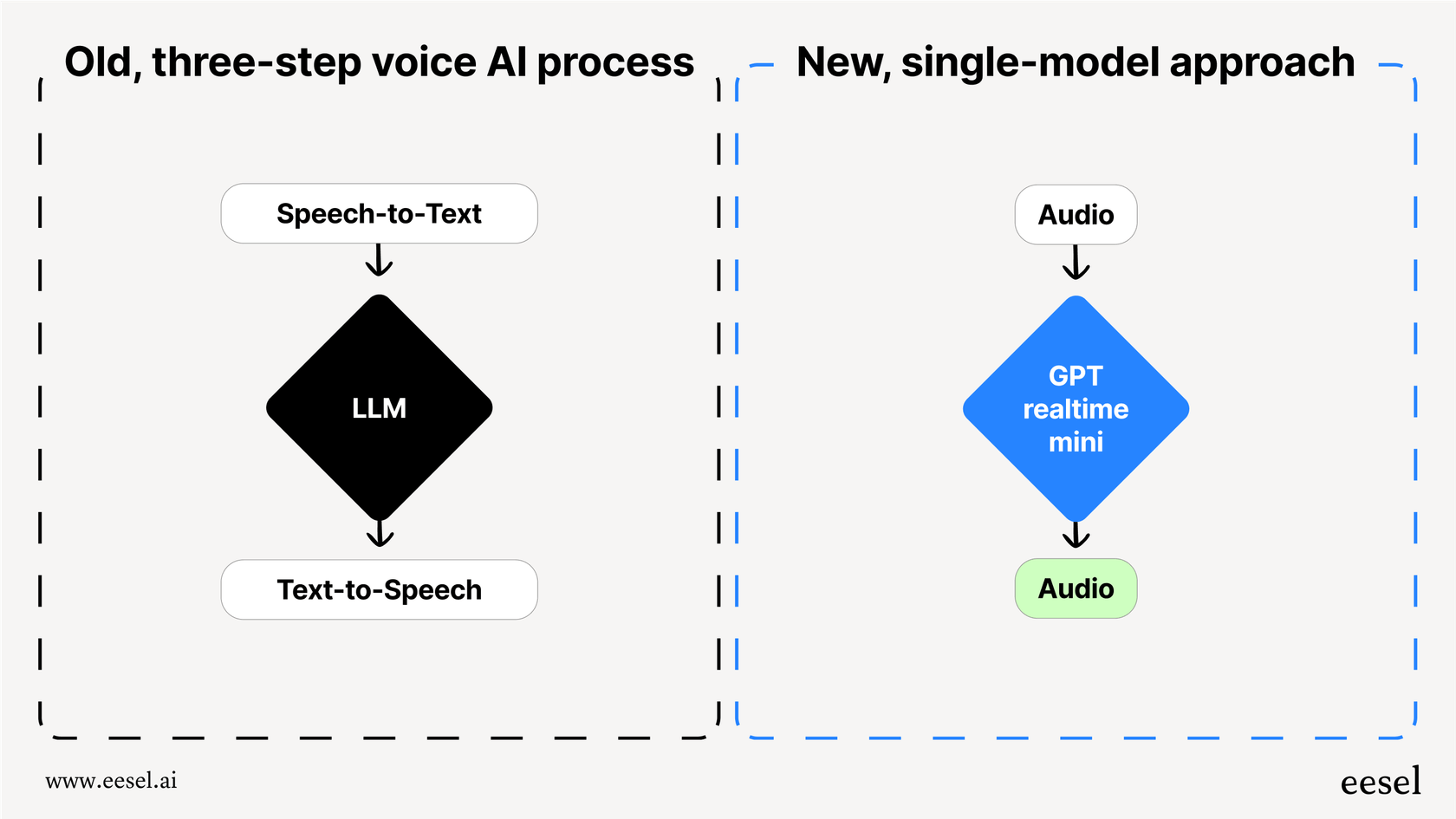

Before this API came along, building a voice agent was a clunky, multi-step headache. You had to string together several different APIs:

-

Speech-to-Text (STT): First, you'd use an API like Whisper to turn the user's spoken words into text.

-

Large Language Model (LLM): Next, you'd send that text to an API like GPT-4 to figure out what to say back.

-

Text-to-Speech (TTS): Finally, you'd use another API to convert the text response back into audio.

This chain of events worked, but it was slow. Each handoff added a little delay, creating an awkward lag that made conversations feel stilted and unnatural. More importantly, it stripped away all the nuance of human speech. Things like tone, emotion, and emphasis were lost the moment audio became text, leaving you with a flat, robotic interaction.

The Realtime API completely changes this by using a single, unified model (like "gpt-realtime") that handles audio from start to finish. It listens to audio and generates an audio response directly. This slashes the latency and preserves the richness of speech, paving the way for faster, more expressive, and genuinely conversational AI.

How the OpenAI Realtime API works

The API isn’t just about voice; it’s designed for low-latency, multimodal communication. That’s a fancy way of saying it can juggle different types of information at once, making the agents you build much smarter and more aware of their surroundings.

Multimodal capabilities of the OpenAI Realtime API

The Realtime API runs on models like GPT-4o, which are "natively multimodal." Think of it this way: the AI wasn't just taught to read text. It was trained from day one to understand and process a mix of audio, images, and text together. This allows for interactions that are far more dynamic than what a simple voice-only agent could ever manage.

Connection methods

To achieve that real-time speed, you need to keep a constant connection open to the API. OpenAI provides a few connection methods, and the right choice really depends on what you're building and your team's tech stack.

| Connection Method | Ideal Use Case | Technical Overhead |

|---|---|---|

| WebRTC | Browser and client-side apps that need the absolute lowest latency. | High (Involves managing peer connections and SDP offers/answers). |

| WebSocket | Server-side apps where low latency is still a priority. | Medium (Simpler than WebRTC but still requires managing a persistent connection). |

| SIP | Integrating with VoIP phone systems (like in a call center). | High (Requires knowledge of telephony protocols and infrastructure). |

Just picking and setting up the right connection method is no small task. It takes a good bit of technical planning and development effort, and it’s often one of the first hurdles teams run into when trying to build a custom voice agent.

Key features of the OpenAI Realtime API

Beyond its core design, the Realtime API includes a set of features that make it a powerful toolkit for developers. These are the pieces you'll use to create smart and dynamic voice agents.

Speech-to-speech interaction

This is the main event. Because a model like "gpt-realtime" works with audio directly, it can pick up on subtle cues that text-based systems always miss, like laughter, sarcasm, or emotional shifts. It can then generate a response that sounds much more natural and expressive. With the API's general release, OpenAI even added two new voices, Marin and Cedar, that are exclusively available here and sound incredibly realistic.

Voice Activity Detection (VAD)

Voice Activity Detection is what makes an AI conversation feel less like a transaction and more like a real chat. It's the feature that lets the AI know when someone has started or stopped talking. This is absolutely essential for natural turn-taking. If a user wants to jump in and change the subject, they can just start talking. The agent will recognize it and adapt instead of plowing ahead or waiting for an awkward pause.

Function calling and tools

A voice agent is only useful if it can actually do things. The Realtime API supports function calling, which allows the agent to connect to outside tools and data sources to fetch information or complete tasks. For instance, a support agent could use a function to look up a customer's order status in your system or process a refund on the spot. While this is incredibly powerful, it's up to you, the developer, to build, connect, and maintain every single one of these tool integrations.

Image and text inputs

Since the API is multimodal, users aren't stuck with just their voice. They can add other information to the conversation. A customer could be on a call with a support agent, send a screenshot of an error message, and ask, "What am I looking at here?" The agent can see the image, understand the context, and give a helpful spoken answer.

Common OpenAI Realtime API use cases and limitations

The potential for low-latency voice AI is huge, but a few use cases have quickly become the most popular. It's also worth being realistic about the hurdles you'll face building these applications from scratch.

Use cases

-

Customer support agents: Answering inbound calls, handling common questions, and routing trickier issues to the right human agent.

-

Personal assistants: Helping with scheduling, setting reminders, and getting information hands-free.

-

Language learning apps: Creating realistic conversation partners to help users practice speaking a new language.

-

Educational tools: Building interactive tutors that can verbally explain complex topics and answer student questions.

Limitations of a DIY approach

Building a voice agent with the raw API might sound exciting, but it's a massive engineering project that's about much more than just calling an endpoint.

-

The development lift is huge: You’re not just plugging in an API; you’re building an entire application. This means managing infrastructure, handling the state of the conversation, designing the logic, and making sure the whole system is reliable and can scale.

-

No business workflows included: The API gives you the engine, but you have to build the car. All the business-specific logic for triaging tickets, escalating to the right team, tracking interactions, and reporting on how well it’s working has to be built from the ground up.

-

No built-in analytics or testing: How do you know if your agent is actually any good? Without dedicated tools, there’s no easy way to test your agent on past conversations, measure its accuracy, or figure out where your knowledge base is falling short.

This is where the classic "build vs. buy" debate comes in. For teams that need a production-ready AI support solution without waiting months for development, a platform like eesel AI offers a much more direct path. It provides a no-code workflow engine, one-click helpdesk integrations, and powerful simulation tools, letting you go live in minutes, not months.

OpenAI Realtime API pricing

The API is priced based on audio tokens, which are calculated differently than text tokens. You’re billed for both the audio you send to the model (input) and the audio the model sends back to you (output). This can make it difficult to predict costs, since they depend on how long and complex each conversation is.

Here’s a quick look at the pricing for the "gpt-realtime" model (standard tier), which is 20% cheaper than the preview version:

| Token Type | Price per 1M tokens |

|---|---|

| Audio Input | $32.00 |

| Cached Audio Input | $0.40 |

| Audio Output | $64.00 |

(Pricing information based on OpenAI's pricing page.)

While token-based pricing is flexible for developers who are just experimenting, it can create unpredictable bills for businesses with high-volume support channels. One busy month could result in a surprisingly large invoice, making it tough to budget effectively.

The simpler alternative to the OpenAI Realtime API: AI support agents with eesel AI

Building directly with the OpenAI Realtime API is a fantastic option for developers creating brand-new applications from scratch. However, for businesses looking to automate customer support, improve their IT service management, or power internal Q&A, a dedicated platform is almost always the faster, more cost-effective, and more powerful choice right out of the box.

eesel AI is a complete AI support platform that uses the power of advanced models like those behind the Realtime API, but it saves you from having to write a single line of code for integrations or workflow management.

Here’s how it addresses the challenges of the DIY approach:

-

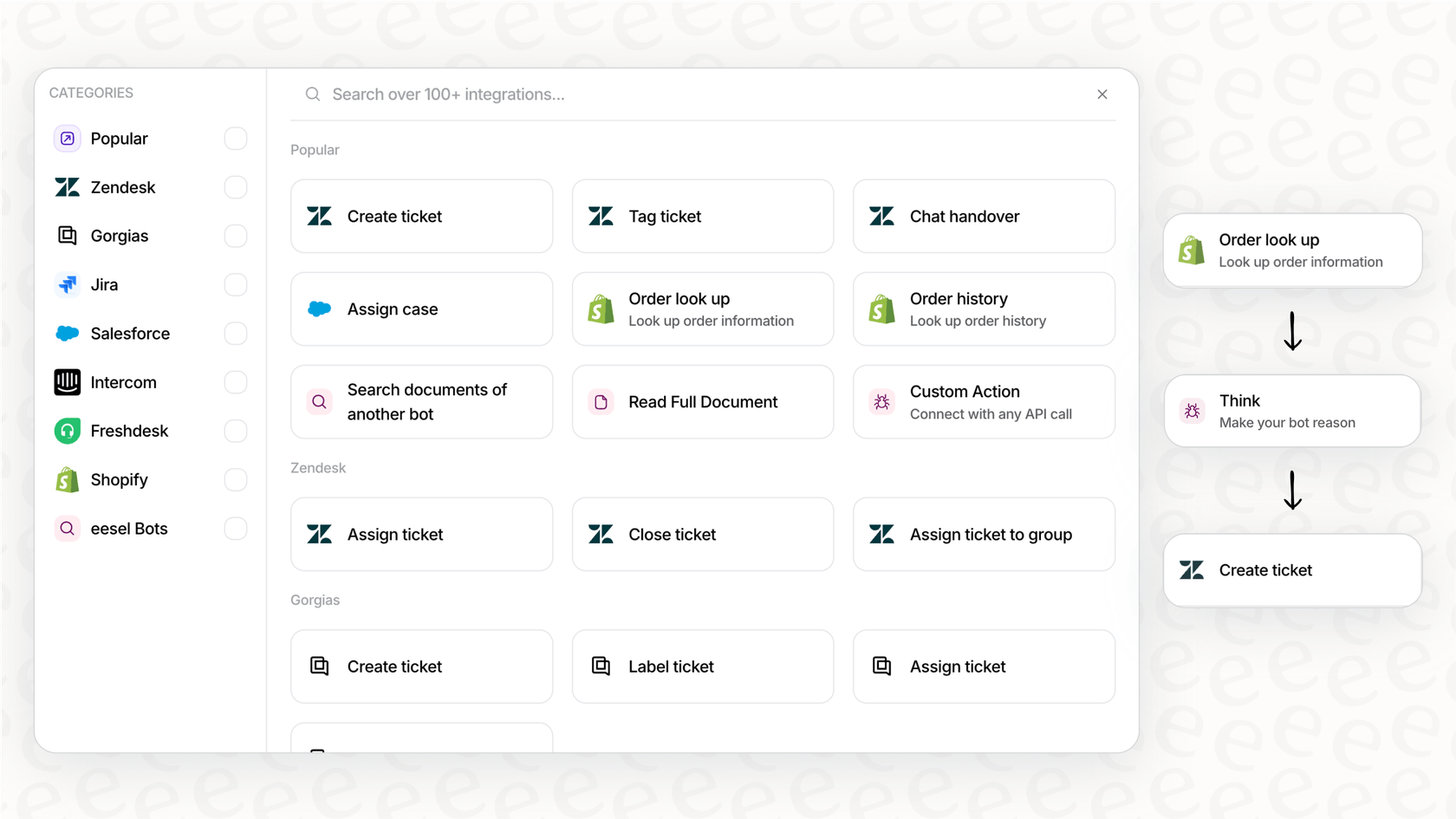

Go live in minutes: Instead of spending months wrestling with WebSockets and infrastructure, you can connect your helpdesk (like Zendesk, Freshdesk, or Intercom) and knowledge sources with a single click. Your AI agent can start learning from your past tickets, help center articles, and internal documents right away.

-

Test with confidence: eesel AI’s simulation mode lets you test your agent on thousands of your real historical tickets in a safe environment. You can see how it would have responded, tweak its behavior, and get accurate forecasts on resolution rates and cost savings before it ever interacts with a live customer.

-

Total control and customization: With a simple prompt editor and a no-code workflow engine, you decide exactly which tickets your AI handles and what actions it can take. You can set up rules to escalate complex issues, tag tickets automatically, or even call external APIs to get order information.

-

Predictable pricing: eesel AI plans are based on a fixed number of monthly AI interactions, with no surprise per-resolution fees. This makes your budget simple and transparent, getting rid of the guesswork that comes with a variable, token-based model.

Final thoughts on the OpenAI Realtime API

The OpenAI Realtime API is a genuinely impressive piece of technology. It’s closing the gap between how humans and machines talk, paving the way for a future where voice-first AI feels completely natural. It gives developers an incredibly powerful engine to build amazing things.

However, the road from an API key to a reliable, production-ready business tool is long and full of technical challenges. For most businesses, especially those focused on customer service and IT support, a platform built for that specific purpose just delivers value faster and more reliably. You get all the power of the underlying AI, but wrapped in a suite of tools designed for the exact job you need to do.

Ready to see what a purpose-built AI support agent can do for you? Start your free trial with eesel AI and automate your frontline support in minutes.

Frequently asked questions

The OpenAI Realtime API is designed to let developers build applications that support low-latency, speech-to-speech conversations. It utilizes a single, unified model to process audio directly, enabling fluid and natural voice-first experiences.

Unlike previous multi-step approaches, the OpenAI Realtime API handles audio from start to finish, significantly reducing latency and preserving subtle cues like tone and emotion. This unified processing leads to much more natural and expressive AI interactions.

To ensure real-time speed, the OpenAI Realtime API supports several connection methods. These include WebRTC for browser-based applications, WebSockets for server-side use, and SIP for integration with VoIP phone systems.

Yes, the OpenAI Realtime API supports function calling, which allows the AI agent to connect to external tools and data sources. Developers are responsible for building, connecting, and maintaining these integrations to enable specific tasks or information retrieval.

The OpenAI Realtime API is priced based on audio tokens for both input and output, which can make predicting costs challenging. For businesses with high-volume usage, this token-based model can lead to variable and potentially high monthly invoices.

The OpenAI Realtime API runs on natively multimodal models like GPT-4o, allowing it to process a mix of audio, images, and text. This means users can provide visual context, such as a screenshot, alongside their spoken questions for richer and more comprehensive interactions.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.