You're building something cool with AI. You’re solving a real problem, maybe even creating a tool that will change how your team works. Things are moving along, and then… you hit a wall. The dreaded "429: Too Many Requests" error. OpenAI Rate Limits are just a part of life when you're building at scale, but they can be a frustrating roadblock when you’re trying to create something reliable for your team or customers.

The good news is, they're completely manageable. This guide will walk you through what OpenAI’s Rate Limits are, why they’re there, and the practical steps you can take to work around them. And while you can build all the necessary plumbing yourself, you'll see how modern platforms are designed to handle this complexity for you, so you can get back to what you do best: building.

What are OpenAI Rate Limits and why do they matter?

Simply put, rate limits are caps on how many times you can call the OpenAI API in a given timeframe. Think of it as a speed limit for your app. These limits aren't there to arbitrarily slow you down; they actually serve a few important purposes.

According to OpenAI’s own documentation, they exist to:

-

Prevent misuse: Capping requests helps stop bad actors from overwhelming the servers and causing problems for everyone.

-

Ensure fair access: If one app could send a million requests a second, it would bog down the service for everyone else. Rate limits make sure everyone gets a fair shot.

-

Manage the load: The demand for AI models is huge. Rate limits help OpenAI manage the immense traffic to their servers, keeping things stable for all users.

But when you do hit them, it hurts. It can lead to your application going down, a terrible user experience, and failed automations. If you’re using AI to power your customer support, a rate limit error could mean a customer's urgent question goes unanswered, which is the last thing anyone wants.

How OpenAI Rate Limits work

Working with "OpenAI Rate Limits" isn't as simple as watching a single number. The limits are measured in a couple of different ways, and you can hit any of them first. It’s a bit like a faucet with limits on both how fast the water can flow and how many times you can turn it on per minute.

Here are the two main metrics you need to get familiar with:

-

RPM (Requests Per Minute): This is the total number of API calls you can make in a minute. It doesn't matter if you’re asking for a one-word answer or a 1,000-word essay, each time you call the API, it counts as one request.

-

TPM (Tokens Per Minute): This is the total number of tokens your application can process in a minute. Tokens are just small chunks of words (about four characters each), and they’re the currency you spend with large language models.

Here's the catch: TPM includes both your input (your prompt) and the output (the model's response). If you send a prompt with 1,000 tokens and get a 500-token response, you've just used 1,500 tokens from your limit.

And here’s another detail that trips up a lot of developers: the "max_tokens" parameter you set in your request also counts toward your TPM limit, even if the model doesn't actually generate that many tokens. Setting this number way too high is a common way to burn through your TPM limit without realizing it.

Different models have different rate limits. A powerhouse model like GPT-4 will naturally have lower limits than a zippier, cheaper one. You can always see the specific limits for your account by heading over to the limits section in your OpenAI settings.

Understanding your usage tier and how to increase OpenAI Rate Limits

So, you need higher limits. How do you actually get them? The good news is that OpenAI has an automated system for this based on your usage history. As you use the API more and pay your invoices, you’ll automatically get bumped up to higher usage tiers, which come with bigger rate limits.

Here’s a rough breakdown of how the tiers work:

| Tier | Qualification (Paid History) | Typical Result |

|---|---|---|

| Free | $0 | Limited access |

| Tier 1 | $5+ paid | Increased RPM/TPM across most models |

| Tier 2 | $50+ paid & 7+ days since payment | Further increases |

| Tier 3 | $100+ paid & 7+ days since payment | Higher capacity for scaling |

| Tier 4 | $250+ paid & 14+ days since payment | Production-level limits |

| Tier 5 | $1,000+ paid & 30+ days since payment | Enterprise-level limits |

If you need a limit increase faster than the automated system provides, you can submit a request directly through your account. Just know that these requests are often prioritized for users who are already using a high percentage of their current quota.

Another path some developers take is the Azure OpenAI Service. It uses the same models but has a different way of handling quotas. This can give you more fine-grained control but also adds another layer of complexity to your setup.

Strategies for managing OpenAI Rate Limits

Alright, so what do you do when you see that "429" error pop up? Here are a few solid strategies for managing your API calls and keeping your application from falling over.

Implement retries with exponential backoff

When a request fails, your first instinct might be to just try again immediately. Don't. You can end up causing a "thundering herd" problem, where a stampede of retries hammers the API all at once, keeping you stuck in a rate-limited loop.

A much better way to handle this is with exponential backoff. The idea is pretty simple: when a request fails, you wait for a short, slightly randomized period before retrying. If it fails a second time, you double the waiting period, and so on. You keep doing this until the request goes through or you hit a max number of retries.

This strategy works so well because it helps your app gracefully recover from temporary traffic spikes without making the problem worse.

Optimize your token usage

Since TPM is often the first limit you’ll hit, it pays to be smart about your token use.

Batch your requests. If you have a lot of small, similar tasks, try bundling them into a single API call. For example, instead of sending 10 separate requests to summarize 10 customer comments, you could combine them into one. This helps you stay under your RPM limit, but just be aware that it will increase the token count for that single request.

Be realistic with "max_tokens". Always set the "max_tokens" parameter as close as you can to the actual length of the response you expect. Setting it way too high is like reserving a giant block of tokens you might not even use, which eats into your TPM limit for no reason.

Use a cache. If your application gets the same questions over and over, you can cache the answers. Instead of calling the API every time for a common query, you can just serve the saved response. It's faster for the user and saves you API costs and tokens.

The hidden challenge of OpenAI Rate Limits: Scaling beyond the basics

Okay, so you’ve set up retries and you’re watching your tokens. You're all set, right? For a while, maybe. But as your application grows, you'll find that managing rate limits in a real production environment is about more than just a simple retry script.

You’ll start running into new, more complex problems, like:

-

Building and maintaining custom logic for backoff, batching, and caching everywhere in your app.

-

Trying to keep track of API usage across multiple keys, models, and different environments (like staging versus production).

-

Having no central dashboard to see how your AI workflows are actually performing or to spot which ones are hitting limits.

-

Guessing how your app will perform under a heavy load before you launch it to real customers.

This is usually the point where teams realize they need an AI integration platform. Instead of getting bogged down in infrastructure, you can use a tool that handles these operational headaches for you.

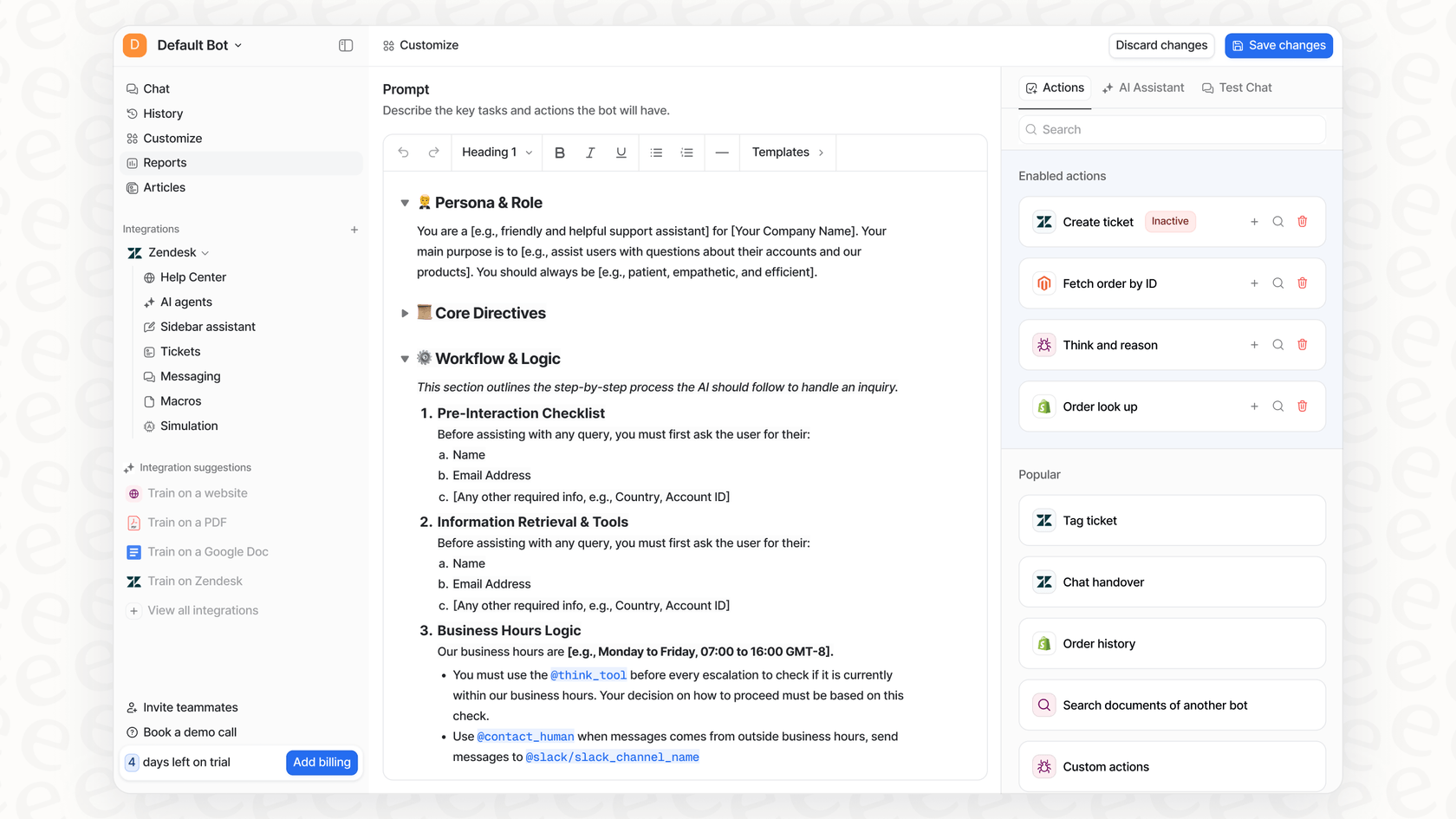

Platforms like eesel AI are designed to be an intelligent layer between your business tools and the AI models, managing the tricky parts of API calls, error handling, and scaling. Here’s how that helps:

-

Go live in minutes, not months. With eesel AI, you can connect your helpdesk (like Zendesk or Freshdesk) and knowledge sources with just a click. All the gnarly API integration and rate limit logic is handled behind the scenes, so you can focus on what your AI should actually do.

-

Test with confidence. eesel AI’s simulation mode lets you test your AI agent on thousands of your own historical tickets in a safe environment. You can see exactly how it will perform and forecast resolution rates before a single customer ever interacts with it. This takes the guesswork out of wondering if you’ll hit rate limits in production.

- Stay in control. Instead of writing low-level code to manage API calls, you manage high-level business rules. A simple dashboard lets you define exactly which tickets the AI should handle and what actions it can take, while eesel AI takes care of managing the API traffic efficiently.

Focus on your customers, not on OpenAI Rate Limits

"OpenAI Rate Limits" are a fundamental part of building with AI, and getting your head around them is important. You can definitely manage them on your own with techniques like exponential backoff and request batching, but this path often leads to a growing pile of technical chores that pull you away from what you should be focused on: building a great product.

The goal isn't to become an expert in managing API infrastructure; it's to solve real problems for your users. By using a platform that handles the complexities of scaling for you, you can stay focused on what really matters.

Ready to deploy powerful AI agents without worrying about rate limits and complex code? Try eesel AI for free and see how quickly you can get your support automation up and running.

Frequently asked questions

OpenAI Rate Limits are caps on how many API calls or tokens your application can process within a specific timeframe. They are crucial for preventing misuse, ensuring fair access to OpenAI's services for all users, and helping manage the overall server load. Hitting these limits can cause "429: Too Many Requests" errors, leading to application downtime and a poor user experience.

OpenAI Rate Limits are primarily measured in two ways: Requests Per Minute (RPM) and Tokens Per Minute (TPM). RPM counts the total number of API calls made, while TPM measures the total number of tokens processed, including both your input prompt and the model's generated response. Your application can hit either limit first.

Your OpenAI Rate Limits automatically increase as your account progresses through usage tiers, based on your paid API history and time since payment. For faster increases, you can submit a direct request through your OpenAI account. Alternatively, the Azure OpenAI Service offers different quota management options.

The most effective strategy for handling errors due to OpenAI Rate Limits is implementing retries with exponential backoff. This involves waiting a slightly randomized, increasing period before retrying a failed request, preventing your application from overwhelming the API during traffic spikes.

Yes, you can optimize usage by batching multiple small requests into a single API call, setting the "max_tokens" parameter realistically to avoid reserving unused tokens, and caching responses for frequently asked questions. These methods help conserve both RPM and TPM.

Yes, the "max_tokens" parameter directly affects your OpenAI Rate Limits, specifically your Tokens Per Minute (TPM). Even if the model doesn't generate that many tokens, the maximum value you set counts towards your TPM limit, so it's best to set it as close as possible to your expected response length.

Absolutely. Platforms like eesel AI act as an intelligent layer that automatically handles the complexities of API calls, including implementing retry logic, optimizing requests, and managing usage across various models. This allows you to focus on your application's core functionality rather than infrastructure challenges.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.