Trying to keep up with OpenAI's models can feel like a full-time job. Just when you’ve finally figured out GPT-4o, you hear whispers about a whole new family of models on the horizon. It’s one thing to know the names, but it’s another thing entirely to figure out which one is right for your project without accidentally racking up a massive bill.

If you’re nodding along, you’re in the right place. Think of this as your no-nonsense guide to the current OpenAI models list. We'll walk through what each model does, make sense of the (often confusing) pricing, and help you figure out what’s actually best for your team.

What are OpenAI models?

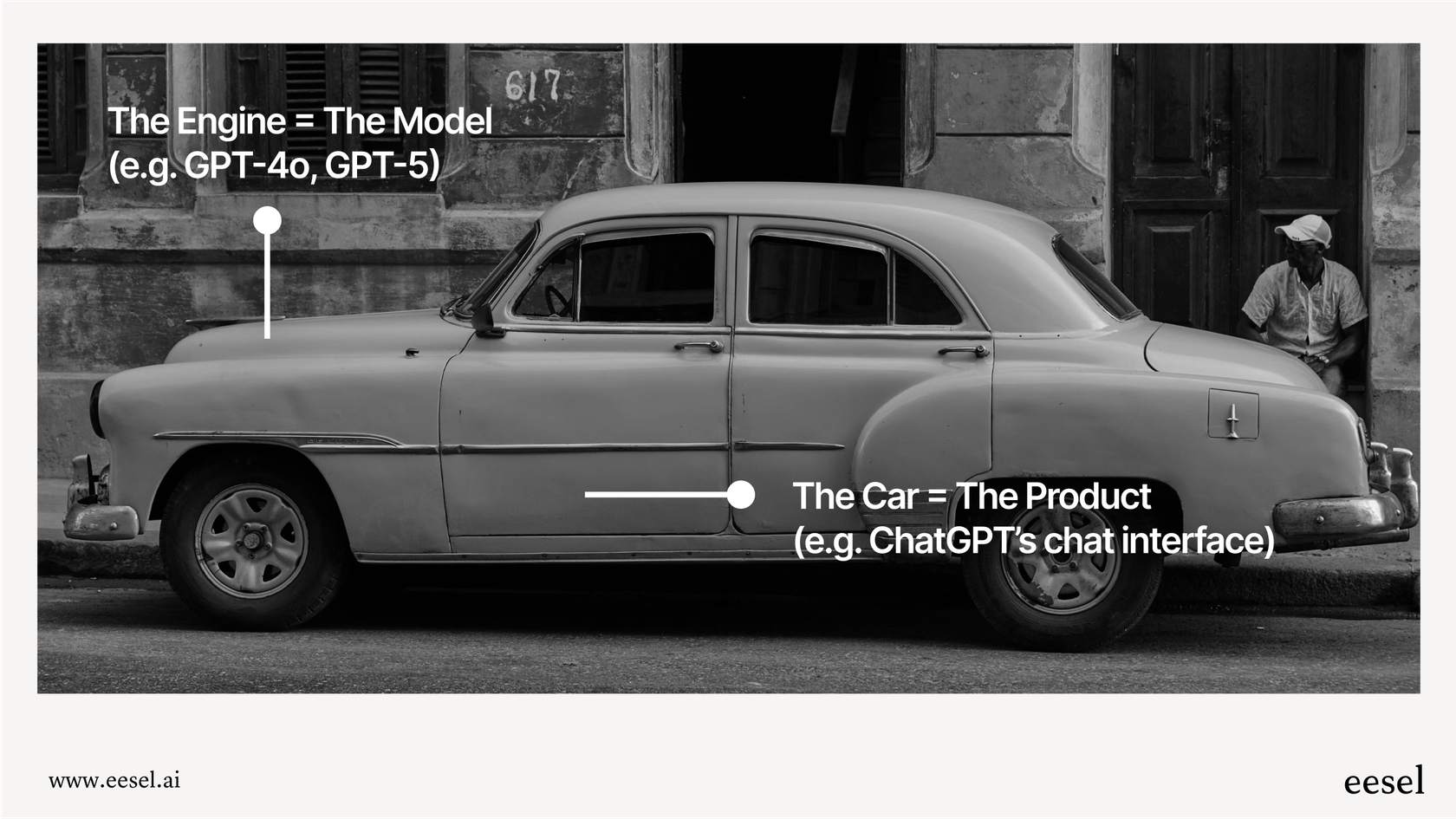

At its heart, an OpenAI model is a type of AI called a large language model (LLM). You can picture it as a super-smart engine that has been trained on a mind-boggling amount of text and data. This training allows it to understand what we say, generate human-like text, and even interact with us.

But they're not all the same. OpenAI groups them into a few different buckets, each built for different kinds of jobs:

-

Reasoning models: These are the heavy lifters. They’re built for complex, multi-step problem-solving, like writing and debugging code or digging into dense scientific problems.

-

General-purpose models: These are your versatile all-rounders. They can handle a huge range of tasks involving text and images, from whipping up marketing copy to analyzing customer feedback.

-

Specialized models: These models are fine-tuned for one specific task and they do it incredibly well. Think generating images, creating video clips, or transcribing audio files with near-perfect accuracy.

-

Open-weight models: For the tech-savvy folks, these are models you can actually download, customize, and run on your own computers. This gives you total control over how they work.

A breakdown of the current OpenAI models

The lineup is always evolving, but let's look at the main players you can work with through OpenAI’s API right now.

The GPT-5 family: For advanced reasoning and logic

This is OpenAI's top-shelf series, designed for tasks that need some serious brainpower, coding skills, and technical analysis. If you’re tackling really tricky problems, this is your starting point.

-

GPT-5 & GPT-5 pro: These are the most powerful (and priciest) models available. They're best for groundbreaking work where you can’t afford any mistakes, like developing complex software or conducting deep research.

-

GPT-5 mini: This one hits a sweet spot, giving you powerful reasoning capabilities at a much lower cost and a faster speed. It’s a fantastic choice for building smart internal tools, analyzing data, or powering chatbots that need to understand subtle conversations.

-

GPT-5 nano: As the speediest and most affordable reasoning model, GPT-5 nano is built for tasks you need to do over and over again. Think summarizing thousands of documents, classifying content, or running simple, logic-based workflows.

The GPT-4.1 family: Powerful and versatile

Think of this family as the default choice for most general business tasks. These models are more than capable of handling a huge variety of jobs and are often the perfect fit for anything that doesn't require the intense, step-by-step logic of the GPT-5 series.

-

GPT-4.1: This is the best non-reasoning model, a true Swiss Army knife. It’s excellent at analyzing complex text, creating high-quality content, and even understanding what’s going on in an image.

-

GPT-4.1 mini & nano: These are the workhorses that won’t break the bank. They offer a great mix of power, speed, and affordability. For a lot of businesses just getting started with AI, GPT-4.1 mini is the ideal place to begin, delivering solid results without the premium price.

Specialized and multimodal models

Sometimes you just need a tool that’s a master of one trade. These models are built for specific types of media that go beyond just text.

-

GPT-4o & GPT-4o mini: While the GPT-4.1 family has largely taken over for text-based tasks, these older models still have one unique trick up their sleeve in the API: they can process and generate audio. This makes them the go-to for building voice-based apps, like real-time voice assistants or transcription tools.

-

GPT Image 1 & Sora: These are OpenAI’s wizards for visual content. GPT Image 1 can create incredibly detailed images from a simple text description, while Sora generates high-quality video clips. It's worth noting that Sora isn't available for developers to use just yet.

-

Whisper: This is a dedicated and super-affordable model with one job: transcribing and translating audio. If you need to turn speech into text, it’s an amazingly efficient and accurate choice.

Open-weight models

For teams that want to get their hands dirty and have full control, OpenAI offers open-weight models. This means you can download them, train them on your own private data, and run them on your own servers.

- gpt-oss-120b & gpt-oss-20b: Released with a friendly Apache 2.0 license, these models are for organizations that have the technical chops to build and run their own AI setup. They offer the ultimate flexibility for very specific or sensitive use cases.

How to choose the right OpenAI model for your business

Picking a model isn't a one-and-done decision. It's a constant balancing act between performance, cost, speed, and the hidden work of keeping everything running smoothly.

The classic tug-of-war: Power vs. price

The most powerful model isn't always the right answer. Using GPT-5 pro to summarize your internal meeting notes is like using a sledgehammer to crack a nut, it's expensive overkill. But on the flip side, trying to get GPT-4.1 nano to analyze a complex legal document might not give you the detailed accuracy you need.

Speed considerations: Don't make your users wait

For things that happen in real-time, like a customer-facing chatbot, speed is everything. A slow, lagging response is a surefire way to frustrate people. The "nano" and "mini" versions of these models are specifically built for these situations where a quick reply is essential.

The hidden cost of managing OpenAI models

Here’s the part that often gets overlooked: choosing, implementing, and monitoring these models isn't a "set it and forget it" project. The AI world moves incredibly fast. The best model for your needs today might be replaced by something better (and cheaper) tomorrow, forcing your developers to constantly tweak and maintain your setup.

Understanding OpenAI API pricing

This is where things can get a bit hairy. OpenAI’s pricing is based on "tokens," which are basically pieces of words. You pay for the number of tokens in your input (the question you ask the AI) and the number of tokens in the output (the AI's answer).

Here’s a quick look at the pricing for the main models:

| Model | Input Price (per 1M tokens) | Output Price (per 1M tokens) | Best For |

|---|---|---|---|

| GPT-5 pro | $15.00 | $120.00 | Advanced, high-stakes reasoning |

| GPT-5 | $1.25 | $10.00 | Complex coding and logic tasks |

| GPT-5 mini | $0.25 | $2.00 | Affordable reasoning and chatbots |

| GPT-5 nano | $0.05 | $0.40 | Fast, low-cost summarization |

| GPT-4.1 | $2.00 | $8.00 | General high-quality text/image tasks |

| GPT-4.1 mini | $0.40 | $1.60 | Balanced performance and cost |

| GPT-4.1 nano | $0.10 | $1.40 | Speed and cost-sensitive tasks |

| GPT-4o (Text) | $2.50 | $10.00 | Multimodal tasks (legacy) |

| GPT-4o mini (Text) | $0.15 | $0.60 | Budget multimodal tasks (legacy) |

Data compiled from OpenAI's API and pricing information.

The real challenge here is that this system can lead to unpredictable costs. A sudden rush of customer questions or a new team member using the tool more than expected can lead to a surprisingly high bill at the end of the month. This makes it tough to budget properly.

This token-based pricing is flexible, but it can be volatile. For businesses that need predictable spending, platforms built on top of OpenAI are a great alternative. eesel AI, for instance, has clear, subscription-based plans. You pay one flat monthly fee and never get charged per ticket or resolution. This lines up your costs with your budget, not your customer support volume.

The smarter way: Focusing on outcomes, not models

For most businesses, the end goal isn't to become an expert on every single OpenAI model. The real goal is to solve a business problem, whether that's answering customer questions faster, automating tedious tasks, or giving your team instant access to the information they need.

Instead of getting tangled up in the details of APIs and models, it might make more sense to use an AI integration platform. These tools act as an intelligent layer on top of all this complex technology. They connect directly to your business data and workflows, and then use the right AI model behind the scenes to get the job done.

This is exactly where a tool like eesel AI comes in. It’s built from the ground up for support and internal knowledge teams, giving you a complete solution, not just a key to a raw AI model.

-

Get started in minutes: It plugs into your existing helpdesk like Zendesk or Freshdesk with a single click. There's no heavy lifting with APIs or a long setup process.

-

Unify your knowledge: It doesn't just use generic information. It learns from your actual past support tickets, help articles in Confluence, and internal notes from Google Docs to provide answers that are specific to how your business works.

- Test with confidence: Before you even show it to customers, you can run a simulation to see how the AI would have performed on thousands of your past tickets. This gives you a risk-free way to see how well it works and forecast its impact, a level of certainty you just can't get by playing with an API.

Move beyond the OpenAI Models List

OpenAI has built an incredibly powerful suite of tools, but it's also complex and constantly changing. Getting a handle on the OpenAI models list is a great first step, but the real win for your business comes from putting this technology to work on real, meaningful problems.

Instead of getting stuck managing APIs, comparing models, and worrying about unpredictable bills, consider a platform that handles all that for you. When you focus on the outcome you want, you can get all the benefits of AI without the headaches.

Ready to see what AI can do for your support team, minus the technical hassle? Try eesel AI for free and you can have your first AI support agent running in minutes.

Frequently asked questions

OpenAI generally categorizes its models into reasoning, general-purpose, specialized, and open-weight models. Each category is designed for different types of tasks, from complex problem-solving to focused media generation.

You should balance performance requirements with cost and speed. For simple, repetitive tasks, a "nano" or "mini" model is often sufficient and more cost-effective, while complex problems might require a "GPT-5" series model.

Pricing is based on "tokens," which are pieces of words. You pay for both the input tokens you send to the AI and the output tokens it generates in response. This system can lead to variable costs depending on usage.

Yes, models like GPT Image 1 and Sora are designed for visual content, while Whisper is dedicated to audio transcription and translation. The GPT-4o family can also process and generate audio.

Open-weight models (like gpt-oss-120b) can be downloaded, customized, and run on your own servers. They are ideal for organizations with the technical expertise to manage their own AI setup for ultimate control, privacy, or highly specific use cases.

Not always. Using the most powerful models for simple tasks can be expensive overkill. It's crucial to match the model's capabilities to the task's complexity to optimize for both performance and cost-efficiency.

Rather than managing individual models, consider using an AI integration platform like eesel AI. These platforms abstract away the underlying model complexities, automatically using the best tool for the job and offering predictable pricing.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.