We all know large language models (LLMs) can talk a big game. They can churn out emails, answer trivia, and even whip up a sonnet on command. But what about actually doing things? How do we get them to stop just talking and start interacting with the real world, like looking up an order, updating a support ticket, or querying a database?

That's the job of OpenAI Function Calling (which they've now officially renamed "Tools"). It’s the piece of the puzzle that connects an AI's conversational skills to real-world action. It's what turns a simple Q&A bot into an agent that can actually work with your business software.

In this guide, we’ll walk through what function calling is, how it works, and some of the hidden complexities you'll run into if you try to build with it from scratch. More importantly, we'll look at how modern platforms can handle the heavy lifting, letting you build powerful AI agents without needing a dedicated engineering team on standby.

What is OpenAI Function Calling?

Put simply, OpenAI Function Calling is a feature that lets you tell an LLM about the tools you have available. You describe your functions to the model, and it can then analyze a user's request and ask to use one of them. It does this by spitting out a neat JSON object with all the details your app needs to run the function.

A common mix-up is thinking the model runs the code itself. It doesn't. It just tells your application what code it should run and what information to use.

Think of the LLM as a really smart assistant who knows your entire toolbox inside and out. When a task comes up, they can't use the tools themselves, but they can hand you the right one (the function name) and tell you exactly how to use it (the arguments). Your application is the one that actually gets its hands dirty and does the work.

While OpenAI recently started calling this feature "Tools" to better capture what it can do, most people still use the term "function calling" to describe the concept.

How does the OpenAI Function Calling flow work?

Getting an AI to use a tool isn't a single API call; it’s more of a back-and-forth conversation between your app and the model. It’s a little dance that follows a pretty predictable pattern.

Here’s a step-by-step look at how it plays out:

-

You show the model your tools. First, you give the model a list of the functions it can use. This includes the function's name, a clear description of what it does, and the specific parameters it needs to work (all laid out in a format called a JSON schema). The quality of your description is everything, it's how the model figures out when to suggest a tool.

-

The user asks for something. A user types a request in plain English, like, "What's the status of my order, #12345?"

-

The model picks a tool. The model reads the request. If it spots a match with one of your function descriptions, it responds with a special message asking your application to call that function. It also provides the arguments it pulled from the user’s prompt (for example, "{"function": "get_order_status", "arguments": {"order_id": "12345"}}").

-

Your app does the work. Your code grabs this structured response and runs the actual "get_order_status" function. It might connect to your database or an external API to get the info, which in this case is "Shipped."

-

You pass the result back to the model. Finally, you make one last API call to the model, this time including the function's result in the chat history. Now the model has the final piece of the puzzle and can give the user a friendly, natural answer, like, "Your order #12345 has been shipped."

This little round trip allows the AI to blend its conversational skills with real-time data and actions, making it a whole lot more useful.

Common use cases for OpenAI Function Calling

Function calling is what takes a chatbot from a simple novelty to a powerful agent that can handle real business workflows. Here are a few of the most common ways people are putting it to work.

Plugging into your live data

This is the most straightforward use case. It lets an AI access real-time, private, or dynamic information that wasn't in its original training data. An AI can become an expert on your specific business operations almost instantly.

For example, a customer support AI could look up an order status in a Shopify database, check flight availability through an airline's API, or pull up account details from your internal CRM.

Getting things done in other apps

Beyond just fetching information, function calling lets an AI trigger workflows and make changes in other systems. It can become an active part of your business processes.

For instance, an IT support bot could get a request and automatically create a new ticket in Jira Service Management. Or, a sales assistant could take details from a conversation and add a new lead to your CRM without anyone having to do manual data entry.

Turning messy text into clean data

Sometimes, the goal isn't to answer a question but to reliably pull out and format information. Function calling is great for this because you can define a function's parameters as a blueprint you want the data to fit into.

A perfect example is processing an inbound support email. The AI can read a wall of text, pull out the user's name, company, issue type, and urgency level, and then format it all into a perfect JSON object. That object can then be used to create a new, perfectly categorized ticket in your help desk.

The hidden challenges of building with OpenAI Function Calling

Going from a cool demo to a production-ready application that uses function calling is a pretty big leap. Building directly with the API is powerful, but it comes with some serious engineering headaches that many teams don't see coming.

Orchestration challenges

What happens when a task needs multiple steps? To find a customer's order, you might first need to find their customer ID using their email, and then use that ID to look up their orders. The model doesn't handle this kind of multi-step logic on its own. You have to build and maintain a "state machine" in your own code to manage the sequence.

Reliability and error handling

Models don't always act the way you expect. They can sometimes "hallucinate" arguments that don't exist, call the wrong function, or just fail to call one when it's obviously needed. This means your team has to write a ton of validation, error-checking, and retry logic to make the system dependable.

Some developers even resort to practically yelling at the model in their system messages, writing things like "DO NOT ASSUME VALUES FOR PARAMETERS" in all caps, just to get it to behave. That’s hardly a scalable way to build a production system.

Chaining functions: A known weak spot

Even with the best prompts, models often struggle to plan and execute a task that requires several different function calls in a specific order. They're pretty good at picking the next best tool for a single step but can get lost in a more complex workflow. Again, this means you have to write custom, often complicated, code to manage the flow and guide the model from one step to the next.

Development and maintenance can be a real drag

Defining function schemas in JSON is tedious work and it's easy to make a syntax error. Every time you want to add a new tool or update an old one, you have to dive back into the code. Keeping this code in sync with your actual tools, managing different versions, and just keeping up with frequent changes to the OpenAI API becomes a constant drain on engineering time.

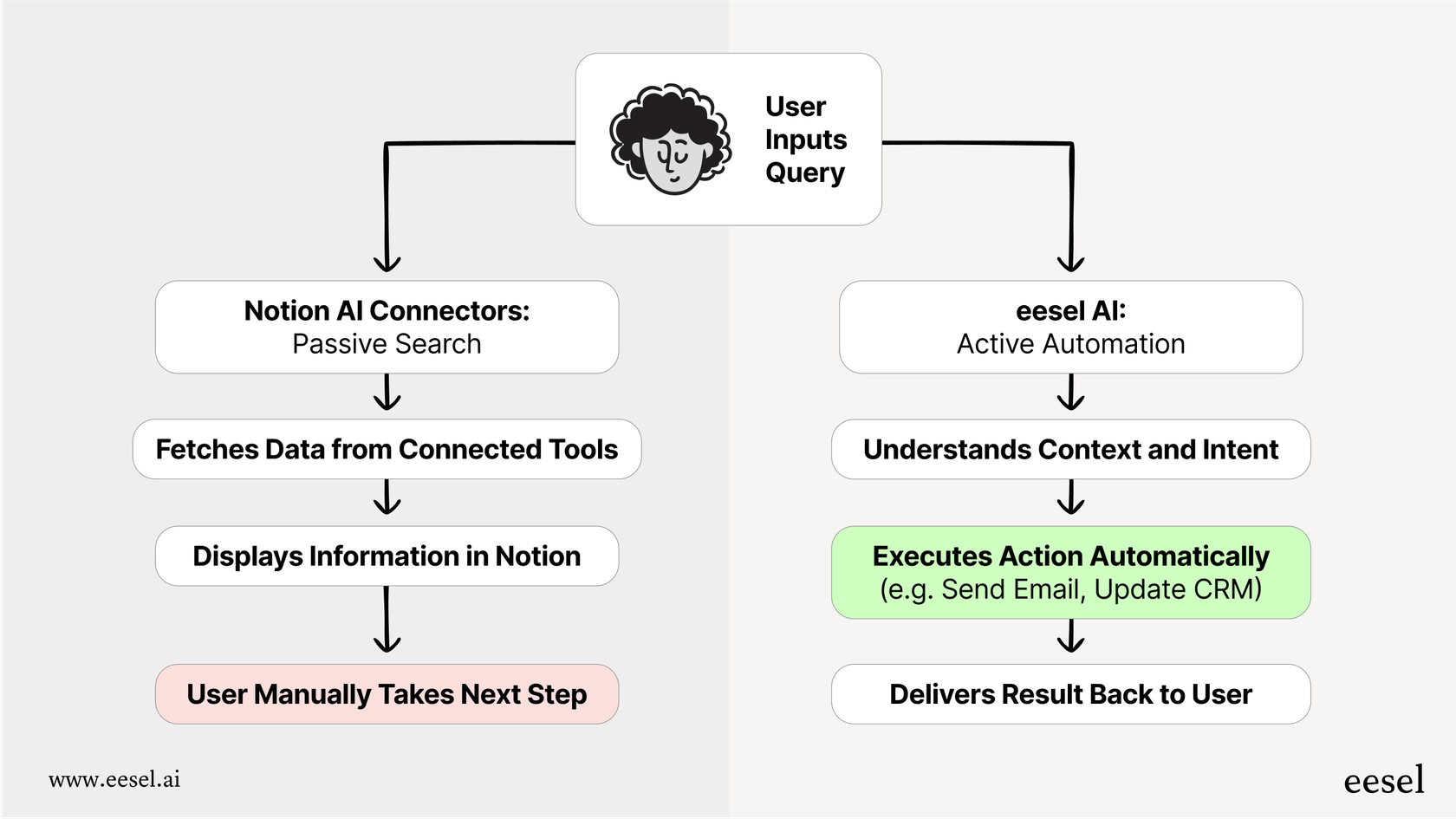

A simpler way to build AI agents

The DIY approach to function calling is powerful, but it isn't practical for most support and IT teams who need reliable solutions without a dedicated engineering crew. This is where platforms like eesel AI can make a huge difference. eesel AI handles all the messy, behind-the-scenes work of function calling through an intuitive interface, turning a difficult coding challenge into a simple workflow.

From complex code to a no-code workflow engine

Instead of writing Python scripts and state machines to manage function calls, eesel AI gives you a visual workflow builder. You can set up rules, escalation paths, and sequences of actions without writing a line of code. This gives you total control over your AI's behavior, letting you design sophisticated workflows that run reliably every single time. You decide exactly which tickets the AI should handle and what it should do, step by step.

Pre-built actions and easy custom connections

eesel AI comes with a library of ready-to-use actions for popular help desks like Zendesk and Freshdesk. Common tasks like "tag ticket," "add internal note," or "close ticket" are just a click away.

Need to connect to a custom tool or an internal API? eesel AI makes that simple, too. You can easily set up custom actions to look up information or trigger workflows in any external system, taking care of all the tedious JSON schema creation for you. This is how teams can go live in minutes, not months.

Test your implementation with confidence before you launch

One of the biggest worries with the DIY approach is the uncertainty. Will the agent actually work as expected on real customer issues? eesel AI solves this with its simulation mode. Instead of deploying code and crossing your fingers, you can run your AI agent on thousands of your past tickets in a safe environment. You can see exactly how it would have responded, what actions it would have taken, and what your automation rate would be. This lets you fine-tune everything and test with confidence before it ever interacts with a live customer.

Go beyond conversation and start doing

OpenAI Function Calling is the engine that lets AI do real work, but the raw API is really just a box of parts. Building a reliable, production-grade AI agent from scratch gives you ultimate flexibility, but it costs a lot in terms of complexity, unreliability, and ongoing maintenance.

Platforms like eesel AI deliver all the power of function calling without the engineering headache. They give teams the ability to deploy capable, custom AI agents that are tightly integrated with their business tools, finally closing the gap between just talking and actually doing.

Ready to build an AI agent that takes real action?

If you're ready to build an AI agent that connects to your tools and automates your support, sign up for eesel AI for free and launch your first AI agent in minutes. No coding required.

Frequently asked questions

OpenAI Function Calling (now often called "Tools") is a feature that allows you to describe your available functions to an LLM. It solves the problem of connecting an AI's conversational abilities to real-world actions, enabling it to ask your application to perform tasks like looking up data or updating systems.

No, the LLM does not run your code. It simply analyzes a user's request and, if appropriate, responds with a structured JSON object indicating which function your application should run and what arguments to use. Your application then executes that function.

The process involves your app first describing its tools to the model. When a user makes a request, the model suggests a function and its arguments. Your application then runs that function, and finally, you pass the result back to the model so it can formulate a natural-language response to the user.

Businesses commonly use it to connect AIs to live data, such as looking up order statuses in a database or account details in a CRM. It's also used to trigger actions in other apps, like creating a Jira ticket, or to extract and format specific data from messy text.

Key challenges include orchestrating multi-step workflows, ensuring reliability with robust error handling, and the model's difficulty with chaining multiple functions. There are also significant development and maintenance overheads due to manual JSON schema definition and API changes.

Platforms like eesel AI provide visual workflow builders and pre-built actions, abstracting away the complex coding, orchestration, and error handling. They simplify the definition of custom tools and offer simulation modes to test agents confidently before deployment.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.