So, you want to get your own documents into OpenAI's models. It's a pretty common goal: you want to build a customer support bot that actually knows your help docs, or fine-tune a model on your company's private data. The OpenAI Files API is your ticket to making that happen.

But here's the catch: working directly with the API can feel like you're building a ton of plumbing just to get the water running. It's a code-heavy process with a lot of steps, waiting around for things to process, and some tricky file management.

This guide will walk you through all of it, from the basic API calls to the common headaches developers run into. We'll cover how to upload, manage, and use files the DIY way, and then explore a much simpler path for businesses that just want to get the job done.

What is the OpenAI Files API?

The OpenAI Files API is a set of tools that lets you upload, manage, and delete files on OpenAI's servers. Think of it as a cloud drive specifically for the documents you want your AI applications to use.

When you upload a file, you have to give it a "purpose", which is just a way of telling OpenAI how you plan to use it. The main purposes you'll see are:

-

"assistants": This is for giving documents to the Assistants API. It's often used with the "file_search" tool (which used to be called retrieval), letting an assistant look up information in your files to answer questions.

-

"fine-tune": You’ll use this when you're providing training data to create a custom, fine-tuned model.

-

"vision": As you might guess, this is for uploading images for models like GPT-4o to analyze.

-

"batch": This is for feeding input files to the Batch API, which is handy for running large jobs without waiting for them in real-time.

Basically, the Files API is the first step to building AI that knows more than just what it learned from the public internet.

How to use the OpenAI Files API (the developer-heavy way)

Going directly through the API gives you total control, but it also means you're responsible for writing the code to manage everything. Let's break down what that looks like for a couple of common scenarios.

Uploading and managing files programmatically

The core of the API comes down to a few main commands. Before you start, you'll need the OpenAI SDK for your language of choice. For Python, that's just a quick "pip install openai".

Alright, let's get our hands dirty with some code. Here's what the basic workflow looks like:

- Uploading a file: First, you open a file from your computer and upload it, telling OpenAI what its purpose is.

from openai import OpenAI

client = OpenAI()

# Upload a file with an "assistants" purpose

file = client.files.create(

file=open("my-knowledge-base.pdf", "rb"),

purpose="assistants"

)

print(file.id)

- Listing your files: Need to see everything you've uploaded? You can pull a list of all the files in your organization.

all_files = client.files.list()

print(all_files)

- Checking on a file: After you upload a file, you can check its metadata to see its status, which will be "uploaded", "processed", or "error".

file_info = client.files.retrieve("YOUR_FILE_ID")

print(file_info.status)

- Deleting a file: If you don't need a file anymore, you can remove it to clean up your storage.

client.files.delete("YOUR_FILE_ID")

These steps look simple enough for one or two files. But what if you have hundreds? Or thousands? The API doesn't have any bulk operations, so you'd have to build your own scripts to loop through every single file. This is where the developer-led approach starts to feel like a real chore.

Using the API with assistants and file search

Strap in, because this next part is a multi-step dance. If you want an Assistant to actually use one of your uploaded files to answer questions, you have to work with something called Vector Stores. A vector store is basically a processed and indexed collection of your files that’s optimized for fast searching.

Here’s the typical workflow you'd have to build from scratch:

-

Upload your file(s) with the Files API.

-

Create a Vector Store.

-

Add your uploaded file(s) to that new Vector Store.

-

Wait. And keep checking (or "polling") the file's status until it's fully processed.

-

Create an Assistant and make sure to enable the "file_search" tool.

-

Attach your Vector Store to the Assistant.

-

Create a Thread to start a conversation.

-

Add the user's question to the Thread.

-

Create a "Run" to get the Assistant to process the question.

-

Poll the Run's status until it finally shows as "completed".

-

And at last, you can grab the Assistant's answer from the Thread.

Notice all the polling? After you add a file to a vector store, you can't use it right away. Your application has to keep asking the API, "Is it done yet?" This adds a layer of complexity and means you have to build solid error handling and state management just to get it working reliably.

For teams that don't have dedicated AI engineers, building and maintaining this whole system is a pretty big ask. It's a common complaint in developer communities, where people are often surprised by how much work it takes to do something that sounds simple: "answer questions from my documents."

OpenAI Files API pricing and limitations

Before you dive in and start building, it's a good idea to know what you're getting into with costs and limitations.

How much does using the OpenAI Files API cost?

When you use the Files API, you're not just paying for the model's tokens. You also get billed for the storage your files take up.

-

Storage Cost: OpenAI charges for storing your files and their indexed versions in vector stores. The current rate is $0.10 per GB per day, though you do get the first 1 GB for free.

-

Token Costs: When an assistant uses "file_search" to find an answer, the relevant bits of your document are stuffed into the model's context window. This uses up prompt tokens, which you get billed for.

This two-part pricing can make it tricky to predict your monthly bill, since it depends on how much data you store and how often your assistant uses it.

Common challenges and limitations

The raw API is powerful, but it has a few quirks that can slow you down.

-

You can't do things in bulk: The API doesn't support uploading or deleting multiple files at once. You have to write a script to loop through them one by one, which can be painfully slow.

-

There's no visual interface: You can't just log into a dashboard to see all your uploaded files, check their status, or figure out which assistants are using them. This makes managing and debugging a real headache.

-

You have to wait... and check... and wait some more: As we mentioned, file processing is asynchronous. Your code has to constantly check in with the API to see if a file is ready, which complicates your logic and can introduce delays.

-

Knowledge management gets messy: Connecting specific files to specific assistants involves juggling "Vector Store" IDs and "tool_resources" objects. There isn't an easy way to just say, "Hey, answer this question using only these three documents," without some careful coding.

These limitations often mean a project that seems simple at first can balloon into a major engineering effort. You end up spending more time building basic file management tools than you do on your actual application.

The easier way: Managing your knowledge with an integrated platform

Building everything yourself on the OpenAI Files API gives you flexibility, but it's a huge time sink. For most businesses, especially those in customer support or IT, a ready-made platform that hides all that complexity is a much better fit.

This is where a solution like eesel AI comes in. It's built to connect your company's existing knowledge to an AI agent, without you having to write a single line of API code.

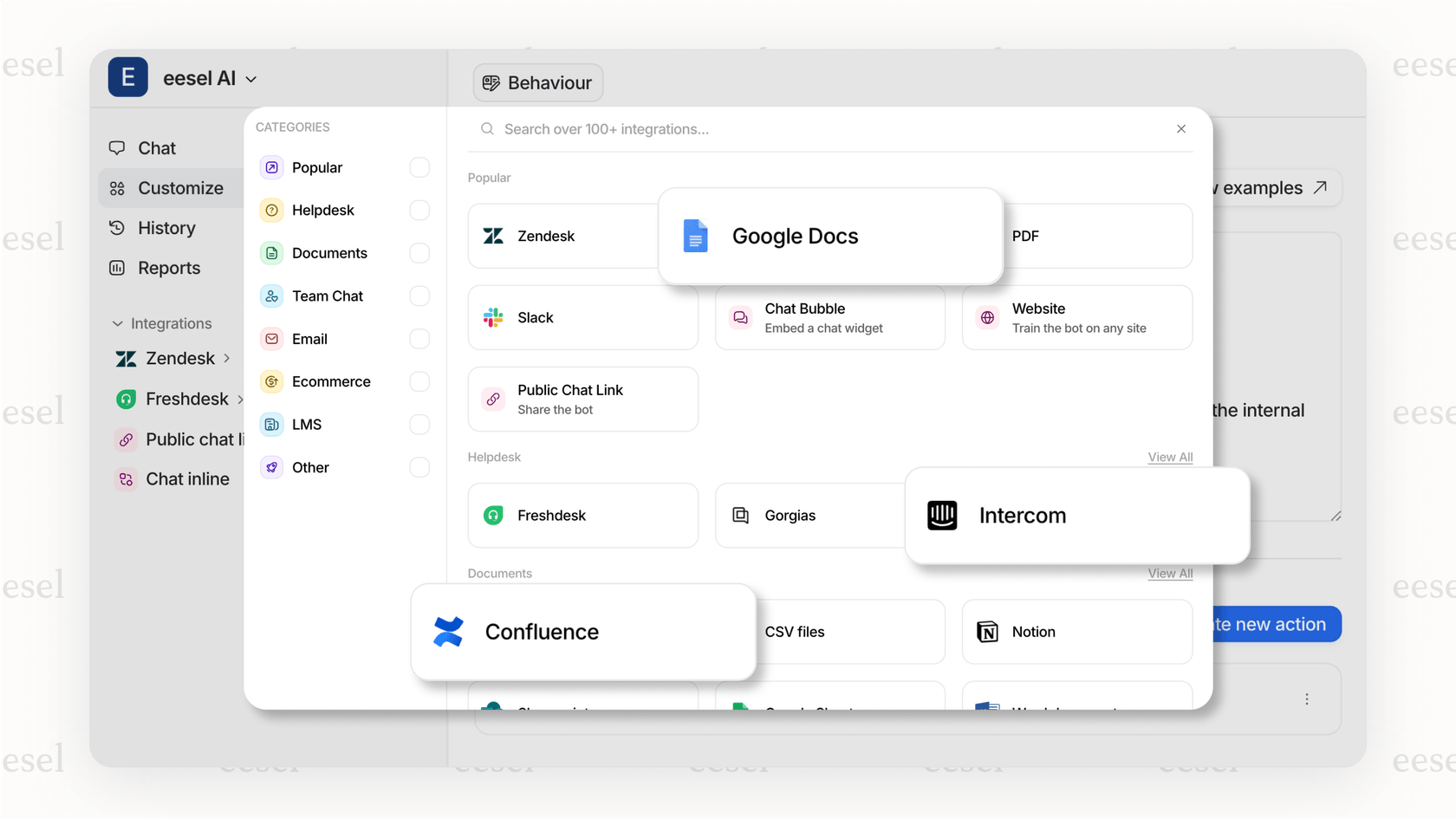

Go live in minutes with one-click integrations

Instead of wrestling with scripts to upload files one by one, eesel AI has simple integrations for the tools you already use. You can connect your help desk (like Zendesk or Freshdesk), your knowledge base (like Confluence or Notion), and your shared documents (like Google Docs) in just a few clicks. eesel AI handles all the file syncing, processing, and indexing behind the scenes.

Unify all your knowledge without the manual labor

The OpenAI Files API makes you upload and organize every single document. With eesel AI, your knowledge is unified from the start. It can even learn from your team's past support tickets to pick up on your brand voice and common customer problems. That means your AI agent is trained on your specific business context from day one, without you having to manually prep and upload thousands of files.

Test with confidence before you launch

One of the scariest parts of building on the raw API is not knowing how your AI will actually perform with real questions. eesel AI's simulation mode takes care of that by testing your AI agent on thousands of your historical support tickets. You can see how it would have responded and get solid forecasts on how many tickets it can resolve and how much money you'll save. You can even start by automating just one category of tickets and expand from there, keeping you in full control.

Final thoughts

The OpenAI Files API is a fundamental piece of the puzzle for creating AI that understands your unique data. For developers who need to build something completely custom, it offers all the power and control you could want.

But for most businesses, the goal isn't to become experts in API orchestration. It's to solve a problem, like freeing up your support team from repetitive questions. The complexity of managing files, polling for statuses, and the lack of a user interface make the direct API a tough road for that.

Platforms like eesel AI handle all that mess for you, offering a dead-simple, self-serve way to connect your knowledge and automate support. Instead of spending months building infrastructure, you can get a solution up and running in minutes and focus on what really matters: making your customers happy.

Ready to put your company's knowledge to work without the engineering headache? Get started with eesel AI for free.

Frequently asked questions

The OpenAI Files API is designed to let you upload, manage, and delete files directly on OpenAI's servers. These files serve as the data source for various AI applications, such as assistants, fine-tuning models, or processing large batches.

To upload a document for an AI assistant, you use the client.files.create command, specifying "purpose="assistants"". After uploading, you then need to create a Vector Store, add your file(s) to it, and attach that Vector Store to your Assistant for "file_search" functionality.

No, the OpenAI Files API does not natively support bulk operations for uploading or deleting files. Developers must write custom scripts to loop through and manage files one by one, which can be time-consuming for large datasets.

Costs for using the OpenAI Files API include both storage and token usage. You are charged $0.10 per GB per day for file storage (with the first 1 GB free), and also for the prompt tokens consumed when an assistant uses "file_search" to access your documents.

Common limitations include the lack of bulk operations, the absence of a visual management interface, and the asynchronous nature of file processing which requires constant polling. Additionally, managing knowledge by linking specific files to assistants can become complex.

Currently, there is no direct visual interface or dashboard provided by OpenAI to manage files uploaded via the OpenAI Files API. All file management, uploading, listing, checking status, and deleting, must be done programmatically through API calls.

Yes, integrated platforms like eesel AI significantly simplify the process by abstracting away the direct API complexity. They offer one-click integrations with existing knowledge sources, handle file syncing and indexing automatically, and provide tools for testing and deployment without manual API coding.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.