A practical guide to OpenAI evaluation best practices for support teams

Stevia Putri

Katelin Teen

Last edited November 14, 2025

Expert Verified

So, you’ve brought an AI support agent onto the team. That's a big step. But how do you really know if it's helping your customers or just creating more headaches for human agents? Going with your "gut feeling" or spot-checking a few conversations isn't going to cut it. Without a solid way to measure performance, you're essentially flying blind. You need real data to feel confident that your AI is accurate, helpful, and staying on-brand.

This guide is here to clear up the confusion around OpenAI Evaluation Best Practices. We'll translate the developer-heavy concepts into a framework that actually makes sense for business and support leaders. We'll walk through the core ideas of AI evaluation and then show you a much more practical way to test and deploy AI confidently, right from your helpdesk.

What are OpenAI evaluation best practices?

Let's break it down. "Evals" are just structured tests to see how well an AI model is doing a specific job. Think of it as a report card for your AI, grading it on things like accuracy, relevance, and reliability.

According to OpenAI’s own documentation, running these evals is essential for improving any app that uses a large language model (LLM). It’s how you stop the AI from sending weird or wrong answers to customers, keep quality consistent, and track whether things are getting better over time, especially when the underlying models are updated.

But here’s the thing: frameworks like the OpenAI Evals API are built for developers. They involve writing code, formatting data in special files (like JSONL), and analyzing the results with scripts. For a business leader, the goal isn't to learn how to code. It's to move from "I think it's working" to "I have the data that proves our AI is hitting our goals and keeping customers happy."

The core evaluation process

If you look at the guidelines from folks like OpenAI and Microsoft, a good evaluation process usually has four main steps. Following this cycle helps make sure your tests are actually useful and lead to real improvements.

graph TD A[1. Define Goal] --> B[2. Gather Data]; B --> C[3. Choose Metrics]; C --> D[4. Test & Iterate]; D --> A;

1. Define your goal

First, you need to decide what "success" looks like for a specific task. And you have to be specific. "Answers questions well" is too vague. A better goal would be, "The AI should accurately explain our 30-day return policy by referencing the official help center article." Now that’s something you can actually measure.

2. Gather your data

To test your AI, you need a "ground truth" dataset. This is just a fancy term for a collection of questions paired with perfect, expert-approved answers. This data should look like the real questions your customers ask, covering the common stuff, the weird edge cases, and everything in between.

3. Choose your metrics

How are you going to score the AI's answers? It could be a simple pass/fail on whether the information is correct, a rating for how well it matches your brand's tone of voice, or checking if it did something specific, like tagging a ticket correctly. Whatever you choose, it should tie directly back to the goal you set in step one.

4. Test, check, and repeat

The last step is to run your tests, look at the results, and use what you learn to tweak your AI. Maybe you need to adjust a prompt, point it to a better knowledge source, or change a workflow rule. Evaluation isn't something you do once; it's a loop of testing and improving that keeps your AI performing at its best.

Key evaluation strategies and metrics

There are a few different ways to grade an AI's performance, and each has its ups and downs. Knowing the options helps you pick the right tool for the job.

Human evaluation

This is the gold standard for quality. You have a human expert read the AI's response and grade it against a set of criteria. It’s fantastic for judging nuanced things like empathy or tone, but it's also incredibly slow, expensive, and a pain to scale. For everyday use, it’s just not practical.

Traditional metric-based evaluation (ROUGE/BLEU)

These are automated systems that score an AI's answer by comparing its text to a "perfect" reference answer. They basically count how many words and phrases overlap.

The catch: As many in the industry point out, these metrics aren't great with understanding meaning. An AI might give a perfectly correct answer using different words, but a ROUGE or BLEU test would fail it. That rigidity makes them less useful for judging conversational AI.

LLM-as-a-judge

This is a newer approach where you use a powerful AI model (like GPT-4) to act as a "judge" and grade the output of your support AI. It's faster and cheaper than using people, and it understands context way better than simple text-matching tools.

The catch: This method can have its own biases (for example, it sometimes prefers longer answers for no good reason) and still needs some careful setup to work well. It's a definite improvement, but it isn't a silver bullet and often still needs a technical eye on it.

| Evaluation Method | Speed | Cost | Scalability | Nuance |

|---|---|---|---|---|

| Human Evaluation | Slow | High | Low | High |

| Metric-based (ROUGE) | Fast | Low | High | Low |

| LLM-as-a-Judge | Fast | Medium | High | Medium |

Practical limitations of developer-focused OpenAI evaluation

While the theory behind OpenAI Evaluation Best Practices is solid, the tools themselves are often a poor fit for a busy support team. Here’s where the textbook approach tends to fall apart in the real world.

Requires developer expertise

To run evals with the standard frameworks, you have to be comfortable with APIs, command-line tools, and formatting data in JSONL. That's just not realistic for most support leaders, who need tools they can manage themselves without filing a ticket with the engineering team and waiting.

The process is slow and disconnected

The typical workflow involves pulling data out of your helpdesk, running tests in a completely separate place, and then trying to make sense of the results. It's clunky and doesn't give you feedback where you actually work: inside your helpdesk. This creates a gap between testing and actually running your support operations.

Test datasets are often too small or generic

Building a good set of test data is tough. A lot of teams end up either testing on a handful of examples they wrote themselves or using generic industry benchmarks. Neither one really captures the unique, and often messy, variety of your real customer conversations, which can give you a false sense of security.

A better approach: Business-focused evaluation with eesel AI

Instead of making you learn a developer's toolkit, some platforms build evaluation right into a simple workflow that anyone can use. eesel AI was designed from the ground up to solve these practical problems for support teams.

Get started in minutes: No-code evaluation

Forget about complicated setups. eesel AI is a truly self-serve platform with one-click helpdesk integrations. You can connect your knowledge from places like Zendesk or Confluence and start evaluating your AI's potential without writing a single line of code or sitting through a sales demo.

Test with confidence: Use past tickets for evaluation

This is where it gets really powerful. eesel AI's simulation mode can run your AI setup on thousands of your real, historical tickets. This gives you an accurate, data-backed forecast of how your AI would have performed on real customer issues. No more guessing and no more building test datasets by hand.

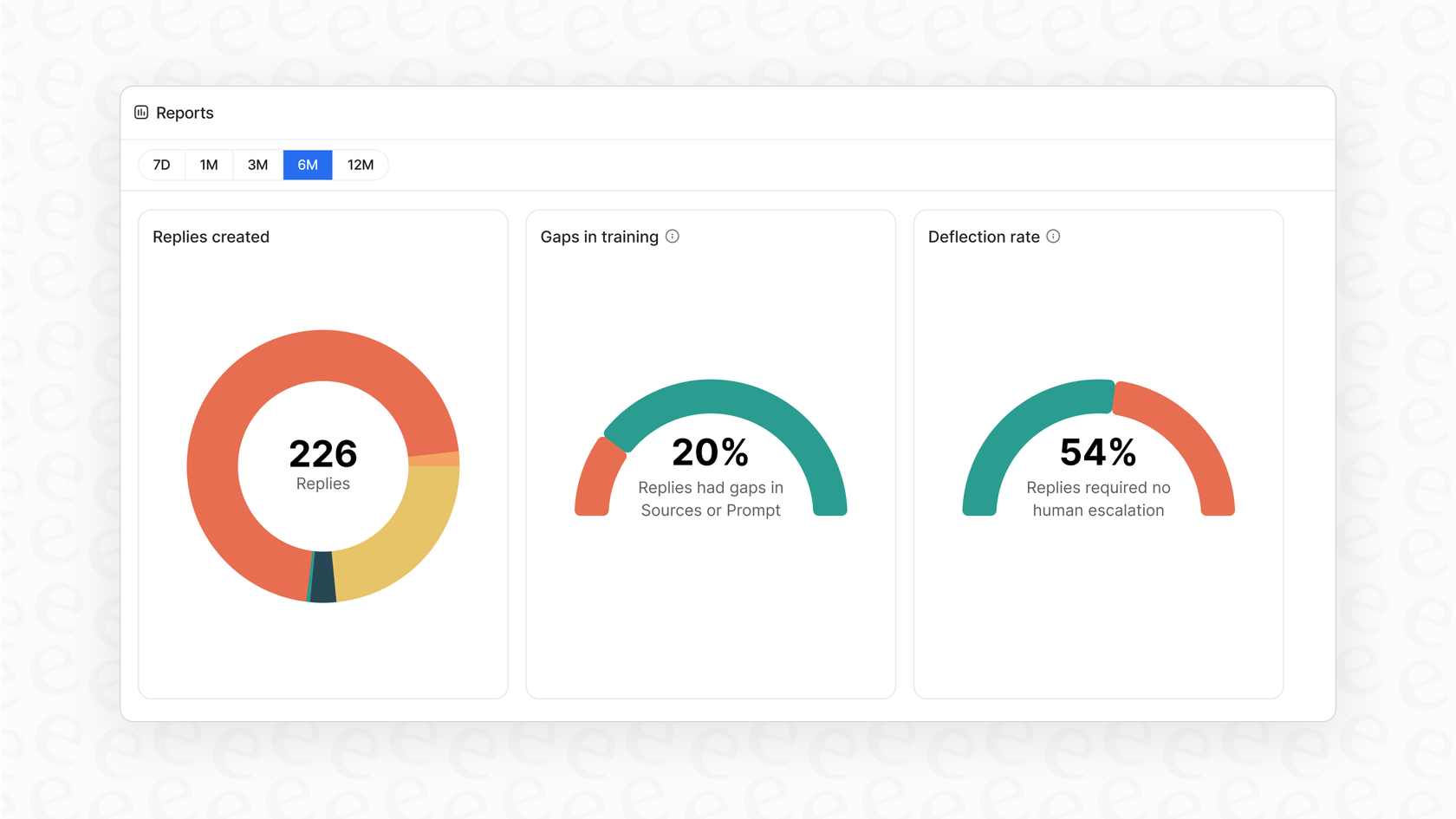

Get clear next steps, not just a score

The actionable reporting in eesel AI does more than give you a pass/fail grade. It analyzes the simulation to show you which topics are prime for automation. Even better, it points out the gaps in your knowledge base, giving you a clear to-do list for what help articles to write next, all based on real customer questions.

Roll out gradually and safely

With eesel AI, you can launch without the risk. After running a simulation, you can choose to automate just a small slice of tickets, like only inquiries about "order status." You can watch how it performs in real-time and expand the scope as you get more comfortable. This kind of careful control gives you a smooth, safe rollout that you just can't get with platforms that demand an all-or-nothing approach.

Stop guessing, start measuring

Putting AI to work in customer support isn't a matter of if anymore, but how. A huge part of the "how" is having a dependable way to evaluate it. While the concepts behind OpenAI Evaluation Best Practices point us in the right direction, the standard tools are often too technical and disconnected for business teams.

The right platform makes sophisticated evaluation a simple, built-in part of your operations. By embedding simulation and reporting directly into a self-serve workflow, eesel AI lets you test on your own data and deploy with confidence. You can finally stop hoping your AI works and start proving it.

Frequently asked questions

OpenAI Evaluation Best Practices refer to structured tests used to measure an AI model's performance on specific tasks, like answering customer questions. They are crucial for ensuring your AI support agent is accurate, reliable, and consistent, preventing poor customer experiences and building trust.

While many frameworks are developer-focused, platforms like eesel AI offer no-code solutions. These tools integrate directly with your helpdesk, allowing you to simulate AI performance on historical tickets and get actionable insights without technical expertise.

Standard OpenAI Evaluation Best Practices often require coding skills, involve slow and disconnected workflows, and rely on potentially small or generic test datasets. These limitations make them challenging for busy support teams without dedicated developer resources.

To apply OpenAI Evaluation Best Practices, you need a "ground truth" dataset. This consists of real customer questions paired with expert-approved, perfect answers, reflecting the diverse inquiries your customers typically ask.

Yes, human evaluation is the gold standard within OpenAI Evaluation Best Practices for nuanced judgments like tone or empathy. However, it is slow, expensive, and difficult to scale for continuous, large-volume testing.

LLM-as-a-Judge is a contemporary method within OpenAI Evaluation Best Practices where a powerful AI grades your support AI's output. It's faster and understands context better than traditional metrics, though it can have biases and requires careful setup.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.