Let's be honest, voice is the new keyboard. We're all talking to our devices constantly, whether it's asking a smart speaker for a recipe or getting stuck in a customer support phone menu. But if you've ever actually tried to build an app with voice features, you know it can be a real headache, super complex and often expensive.

The OpenAI Audio Speech API is changing that. It’s the same tech that powers cool stuff like ChatGPT’s voice mode, and it gives you a solid toolkit to bring voice into your own products without pulling your hair out.

In this guide, I'll break down everything you need to know. We'll look at its two main tricks (turning text into speech and speech into text), check out its features, see what people are building with it, and talk about pricing. Most importantly, we'll cover the gotchas you should know about before you write a single line of code.

What is the OpenAI Audio Speech API?

So, what is this thing, really? The OpenAI Audio Speech API isn't just one tool; it's a whole suite of models designed to both understand what we say and speak back like a human. Think of it as having two main jobs that work together to create conversational experiences.

Turning text into lifelike speech

This is the text-to-speech (TTS) side of things. You give it some written text, and it spits out natural-sounding audio. OpenAI has a few models for this, like the newer "gpt-4o-mini-tts" and older ones like "tts-1-hd" if you need top-tier audio quality. It also comes with a handful of preset voices (Alloy, Echo, Nova, and more) so you can pick a personality that fits your app.

Converting audio into accurate text

On the flip side, you have speech-to-text (STT), which does the opposite. You feed it an audio file, and it transcribes what was said into written text. This is handled by models like the well-known open-source "whisper-1" and newer versions like "gpt-4o-transcribe". And it's not just for English; it can transcribe audio in dozens of languages or even translate foreign audio directly into English, which is incredibly handy.

Key features and models of the OpenAI Audio Speech API

The real magic of the OpenAI Audio Speech API is how flexible it is. Whether you're analyzing recorded calls after the fact or building a voice assistant that needs to think on its feet, the API has you covered.

Realtime vs. standard processing

You have two main ways to handle audio. For standard processing, you just upload an audio file (up to 25 MB) and wait for the transcription to come back. This works perfectly for things like getting transcripts of meetings or reviewing customer support calls.

For more interactive apps, you’ll want to use realtime streaming. This is done through the Realtime API and uses WebSockets to transcribe audio as it's being spoken. This snappy, low-latency approach is what you need if you're building a voice agent that has to understand and reply in the moment, just like a real conversation.

Voice, language, and format customization

Customization is a big deal here. For text-to-speech, you can pick from 11 built-in voices. They’re mainly tuned for English, but they can handle a bunch of other languages pretty well. If you're curious, you can give them a listen on the unofficial OpenAI.fm demo. On the speech-to-text side, Whisper was trained on 98 languages, so the language support is seriously impressive.

You also get control over the file formats. TTS can create audio in MP3, Opus, AAC, and WAV. Each has its use; WAV, for example, is great for real-time apps because it doesn't need any decoding. For speech-to-text, you can get your transcript back as plain text, a JSON object, or even an SRT file if you need subtitles for a video.

Advanced options: Prompting and timestamps

Two of the most useful features for getting better transcriptions are prompting and timestamps.

The "prompt" parameter lets you give the model a cheat sheet. If your audio has specific jargon, company names, or acronyms, you can list them in the prompt to help the model catch them correctly. For example, a prompt can help it transcribe "DALL·E" instead of hearing it as "DALI."

For really detailed analysis, the "timestamp_granularities" parameter (on the "whisper-1" model) can give you word-by-word timestamps. This is a lifesaver for support teams reviewing calls, as they can click to the exact moment a specific word was said.

| Feature | "whisper-1" | "gpt-4o-transcribe" & "gpt-4o-mini-transcribe" |

|---|---|---|

| Primary Use Case | General-purpose, open-source based transcription. | Higher quality, integrated with GPT-4o architecture. |

| Output Formats | "json", "text", "srt", "verbose_json", "vtt" | "json" or "text" only. |

| Timestamps | Supported at segment and word level. | Not supported (requires "verbose_json"). |

| Streaming | Not supported for completed files. | Supported with "stream=True". |

| Realtime Streaming | No | Yes, via the Realtime API. |

Common OpenAI Audio Speech API use cases in customer support and beyond

While you could use the OpenAI Audio Speech API for almost anything, it's a real game-changer for customer support and business communication. Here are a few ways people are using it.

Building interactive voice agents (IVAs)

The coolest use case is probably building interactive voice agents (IVAs) that can handle customer calls. A customer rings up, the Realtime API transcribes what they're saying instantly, an LLM figures out what they want, and the TTS API speaks back with a human-like voice. This allows you to offer 24/7 support and give immediate answers to simple questions like, "Where's my package?" or "How do I reset my password?"

Transcribing and analyzing support calls

For any business with a call center, being able to transcribe and analyze calls is like striking gold. With the speech-to-text API, you can get a written record of every single conversation automatically. This is amazing for quality control, training new agents, and making sure you're staying compliant. By scanning transcripts for keywords or overall sentiment, you can get a much better feel for what your customers are happy (or unhappy) about.

Creating accessible and multi-format content

The TTS API makes it super easy to turn your written content into audio. You can create audio versions of your help center articles, blog posts, and product docs. This makes your content accessible to people with visual impairments or anyone who just likes to listen to articles while they're driving or doing chores.

Practical limitations of building with the OpenAI Audio Speech API

So, while the API gives you the raw power, building a truly polished AI agent that's ready for real customers has a few hidden hurdles. It's good to know about these before you go all-in.

Implementation complexity

Making a few API calls is easy. But building a voice agent that doesn't feel clunky? That's a whole different story. You have to juggle real-time connections, figure out how to handle interruptions when a customer talks over the AI, keep track of the conversation's context, and have developers on hand to fix things when they break. It adds up.

This is why a lot of teams use a platform like eesel AI. It takes care of all that messy backend stuff for you. You can get a voice agent up and running in minutes and focus on what the conversation should be, not why your WebSockets are dropping.

The knowledge and workflow gap

The OpenAI Audio Speech API is great at understanding words, but it doesn't know the first thing about your business. To answer a customer's question, it needs access to your company's knowledge. This usually means you have to build a whole separate Retrieval-Augmented Generation (RAG) system to pipe in information from your helpdesk, internal wikis, and other docs.

An integrated platform sidesteps this whole problem. eesel AI connects to all your knowledge sources, from tickets in Zendesk to articles in Confluence and even files in Google Docs, to give your AI agent the context it needs to provide smart, accurate answers right away.

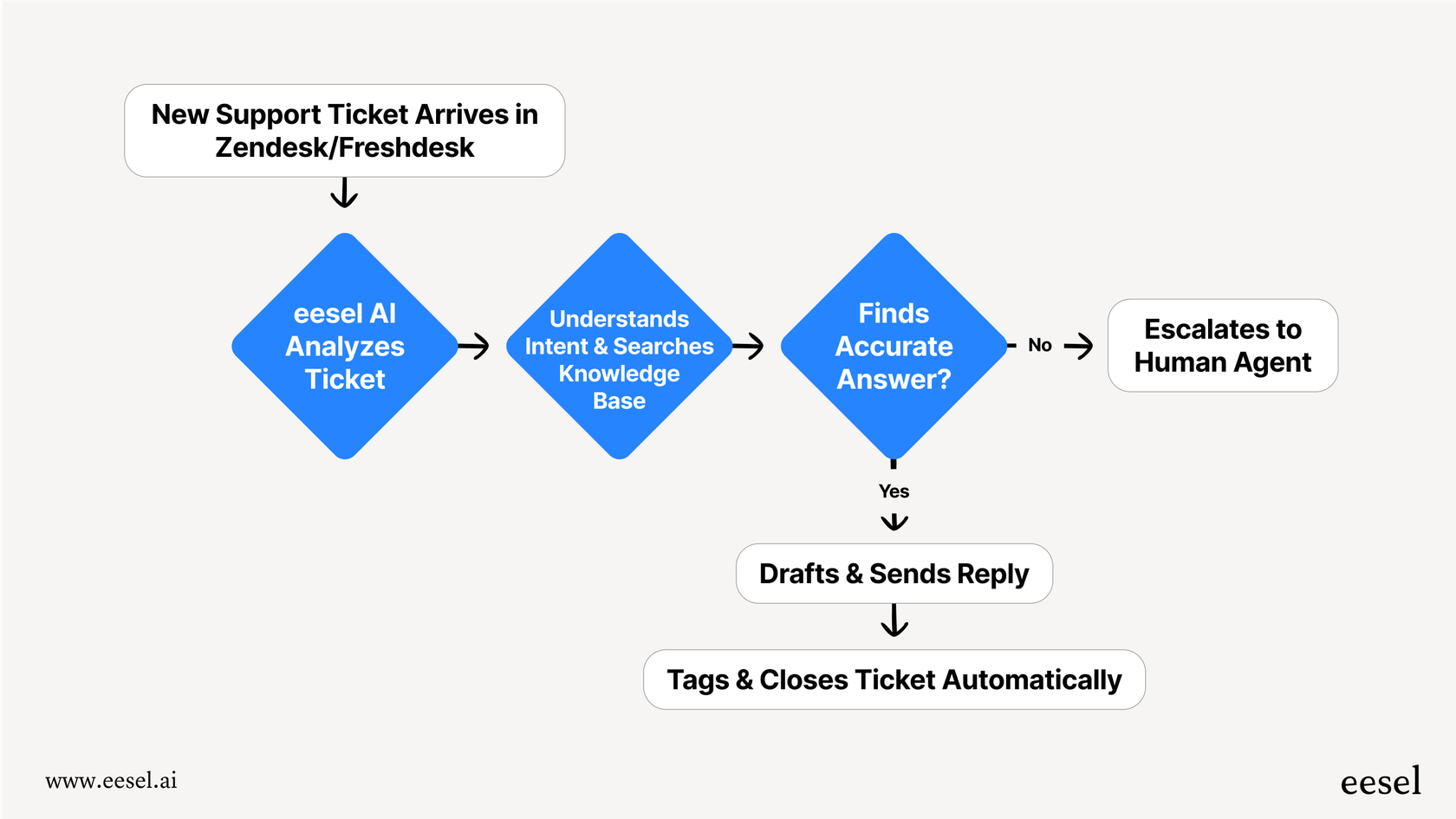

Lack of support-specific features

A good support agent does more than just talk. It needs to be able to do things like triage tickets, escalate tricky issues to a human agent, tag conversations, or look up order information in a platform like Shopify. The raw API doesn't have any of this logic built-in; you'd have to code all of those workflows from scratch.

In contrast, eesel AI comes with a workflow engine that lets you customize exactly how your agent behaves. It includes pre-built actions for common support tasks, giving you full control without needing to write a bunch of code.

OpenAI Audio Speech API pricing

OpenAI’s pricing is split up by model and how you use it. Here’s a quick look at what you can expect to pay for the different audio services.

| Model / API | Service | Price |

|---|---|---|

| Text-to-Speech | "tts-1" (Standard) | $0.015 / 1,000 characters |

| "tts-1-hd" (HD) | $0.030 / 1,000 characters | |

| Speech-to-Text | "whisper-1" | $0.006 / minute (rounded to nearest second) |

| Realtime API (Audio) | Audio Input | ~$0.06 / minute ($100 / 1M tokens) |

| Audio Output | ~$0.24 / minute ($200 / 1M tokens) |

Note: This pricing is based on the latest info from OpenAI and could change. Always check the official OpenAI pricing page for the most current numbers.

The OpenAI Audio Speech API: Powerful tools, but only part of the puzzle

There's no question that the OpenAI Audio Speech API gives you incredibly powerful and affordable tools for building voice-enabled apps. It's lowered the barrier for entry in a huge way.

But it’s important to remember that these APIs are just the building blocks, not a finished house. Turning them into a smart, context-aware AI support agent that can actually solve customer problems takes a lot more work to connect knowledge, build workflows, and manage all the infrastructure.

Putting it all together with eesel AI

This is exactly where eesel AI fits in. While OpenAI provides the powerful engine, eesel AI gives you the whole car, ready to drive.

Instead of spending months building out custom infrastructure, you can use eesel AI to launch a powerful AI agent that plugs right into your existing helpdesk and instantly learns from all your company knowledge. You get all the benefits of advanced models like GPT-4o without the development headaches.

Ready to see how simple it can be? Start your free trial and you can have your first AI agent live in just a few minutes.

Frequently asked questions

The OpenAI Audio Speech API offers two main capabilities: text-to-speech (TTS), which converts written text into natural-sounding audio, and speech-to-text (STT), which transcribes spoken audio into written text. These functions allow for the creation of engaging and interactive voice-enabled applications.

The API supports real-time streaming via its Realtime API, using WebSockets for low-latency transcription as audio is being spoken. This allows voice agents to understand and respond instantly, crucial for interactive voice applications and conversational AI.

In customer support, it's highly impactful for building interactive voice agents (IVAs) that handle immediate customer queries. It's also excellent for transcribing and analyzing support calls for quality control and training, and for creating accessible audio versions of content.

While the API provides core functionality, implementing a robust voice agent involves managing real-time connections, handling interruptions, maintaining conversational context, and extensive custom development. These complexities often require significant engineering effort beyond just API calls.

The raw OpenAI Audio Speech API only handles audio processing; it doesn't inherently connect to your business knowledge. To enable smart answers, you typically need to integrate a separate Retrieval-Augmented Generation (RAG) system that feeds relevant company information to an LLM.

Pricing for the OpenAI Audio Speech API is usage-based and varies by model and service. Text-to-speech is typically charged per 1,000 characters, while speech-to-text (Whisper) is charged per minute of audio. Realtime API usage has separate charges for audio input and output.

For text-to-speech, you can choose from 11 distinct built-in voices, primarily tuned for English but capable of other languages. For speech-to-text, the Whisper model supports transcription in 98 languages, and you can also specify output formats like plain text, JSON, or SRT.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.