So, AI agents are everywhere now. And if you're thinking about using one (or already have), you’ve probably hit the big, looming question: "How do we actually know if this thing is working?" It’s easy enough to get a bot up and running, but trusting it to handle customer issues correctly, stick to your brand voice, and not quietly set things on fire is a whole different ballgame.

This is the exact problem OpenAI is trying to solve with a toolkit called OpenAI Agent Evals. It’s designed to help developers test and tune their agents. But what does that really mean for you?

Let's cut through the jargon. This guide will give you a straight-up, practical look at OpenAI Agent Evals, what it is, what’s inside, who it’s for, and where it falls short. This is especially for the busy customer support and IT teams out there who just need something that gets the job done without a six-month engineering project.

What are OpenAI Agent Evals?

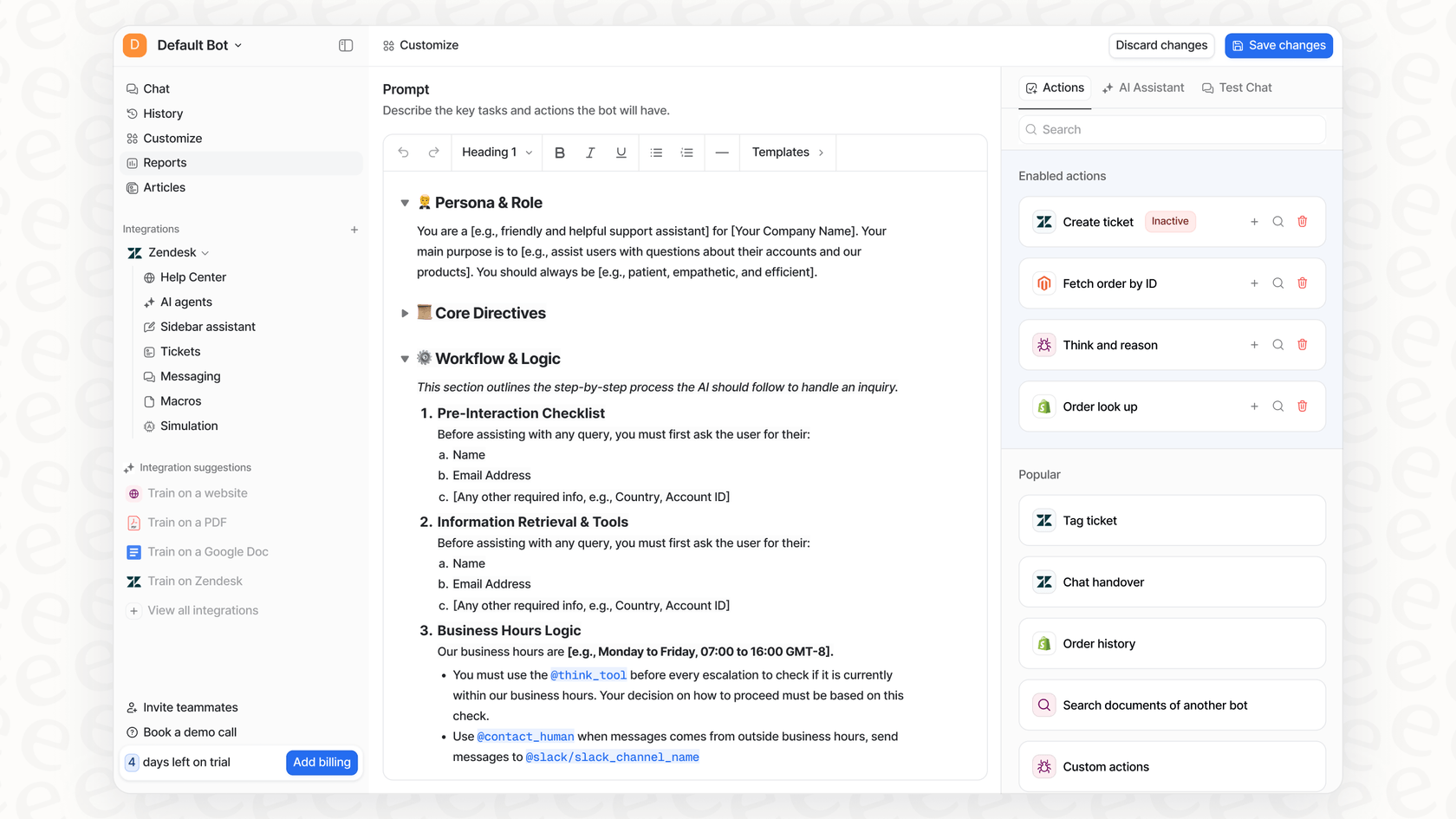

Simply put, OpenAI Agent Evals is a specialized set of tools for developers. It lives inside OpenAI’s broader developer platform, AgentKit, and its whole purpose is to help you test and verify the behavior of an AI agent you’ve built yourself.

Think of it less like a polished performance dashboard and more like a box of high-tech LEGOs for QA testing. It doesn't give you an AI agent. It gives you the low-level building blocks to create your own testing system for an agent you've coded from the ground up using OpenAI's APIs.

The main goal here is to let developers write code to check if their agents are following instructions, using the right tools, and hitting certain quality benchmarks. It’s a powerful setup if you’re building something truly unique, but it’s a "bring-your-own-agent" party. You have to build the agent, and then you have to build the entire system to test it, too.

The core components of the OpenAI Agent Evals framework

The framework isn’t one single thing you can click on. It’s a collection of tools for developers that work together to create a testing cycle. Once you see how the pieces fit together, it becomes pretty clear why this is a tool for engineers, not for the average support manager.

Building test cases with datasets in OpenAI Agent Evals

Everything starts with good test data. In the OpenAI world, this means creating a "dataset." These are usually JSONL files, which is just a fancy way of saying it's a text file where each line is a self-contained test case written in a specific code format. Each line might have an input, like a customer email, and a "ground truth," which is the expected correct outcome, like the right ticket tag or the perfect reply.

Here’s the catch: creating, formatting, and updating these datasets is a completely manual and technical job. You can't just upload a spreadsheet. An engineer has to sit down and carefully craft these files, making sure they cover all the scenarios your agent is likely to face. If your test data is bad, your tests are useless. It takes a ton of planning and coding just to get to the starting line.

This is a world away from a platform like eesel AI, which connects to your help desk and automatically trains on thousands of your past support tickets. It learns your tone of voice, understands common problems, and sees what successful resolutions look like, all without you having to manually create a single test case.

Running programmatic evals and trace grading with OpenAI Agent Evals

Once you have a dataset, you can start running tests using the Evals API. A really neat feature here is "trace grading." It doesn’t just tell you if the agent got the final answer right or wrong; it shows you the agent's step-by-step thought process. You can see exactly which tools it decided to use, in what order, and what information it passed between steps. It’s like getting a full diagnostic report on every single test run.

But again, this all happens in code. You have to write scripts to kick off the tests, make API calls, and then parse the complex JSON files that come back to figure out what went wrong. It's an incredibly powerful way to debug, but it’s a workflow designed for someone who lives in a code editor, not for a team lead who just needs to see if their bot is ready for prime time.

Contrast that with the simulation mode in eesel AI. Instead of writing code, you can test your AI agent against thousands of your real historical tickets in a safe sandbox environment. With a few clicks, you can see exactly how it would have replied, review its logic in plain English, and get a clear forecast of its performance. No programming degree required.

Using automated prompt optimization in OpenAI Agent Evals

The Evals toolkit also includes a feature for automated prompt optimization. After a test run, the system can analyze the failures and suggest changes to your prompts (the core instructions you give the agent) to make it perform better. It’s a clever way to help you fine-tune the agent's internal logic by trying out different ways of phrasing your instructions.

While that sounds helpful, it’s just one piece of a very technical, rinse-and-repeat development cycle. Your engineer runs the eval, digs through the results, gets a prompt suggestion, writes new code to implement it, and then runs the whole thing all over again. It’s a continuous loop that requires constant attention from your dev team.

With eesel AI, tweaking your AI’s behavior is as simple as typing in a text box. You can adjust its personality, define when it should escalate a ticket, or tell it how to handle specific situations, all in plain language. You can then instantly run a new simulation to see the impact of your changes. It makes tuning your agent fast, easy, and accessible to anyone on the team.

Who should (and shouldn't) use OpenAI Agent Evals?

This toolkit is seriously powerful, but it’s built for a very specific crowd. For most support and IT teams, using OpenAI Agent Evals is like being handed a car engine and a box of tools when all you wanted to do was drive to the store.

The ideal OpenAI Agent Evals user: AI developers building from scratch

The people who will love OpenAI Agent Evals are teams of AI engineers and developers building complex, one-of-a-kind agent systems from the ground up.

We’re talking about teams trying to replicate complex AI behaviors from academic research papers, or those creating brand new workflows that don't fit into any existing product. These users need absolute, granular control over every tiny detail of their agent's logic, and they are perfectly happy to spend their days writing and debugging code.

The challenge of OpenAI Agent Evals for customer support and ITSM teams

The day-to-day reality for a support or IT manager couldn't be more different. Your goals are practical and immediate: cut down on repetitive tickets, help your team work faster, and keep customers happy. You likely don't have the time, the budget, or a dedicated team of AI engineers to spend months building a custom solution.

OpenAI Agent Evals gives you the engine parts, but you’re still on the hook for building the car, the dashboard, the seats, and the steering wheel. You have to create the agent, build the integrations with your help desk, design a user-friendly reporting interface, and then use the Evals framework to test it all.

This is exactly the problem that platforms like eesel AI were built to solve. It’s an end-to-end solution that gets you up and running in minutes. You get a powerful AI agent right out of the box, seamless one-click integrations with tools like Zendesk, Freshdesk, and Slack, and evaluation tools that are actually designed for support managers, not programmers.

| Feature | DIY with OpenAI Agent Evals | Ready-to-Go with eesel AI |

|---|---|---|

| Setup Time | Weeks, more likely months | Under 5 minutes |

| Technical Skill | You'll need a team of developers | Anyone can do it, no code needed |

| Core Task | Building an AI agent from scratch | Configuring a powerful, pre-built agent |

| Evaluation | Writing code to run programmatic tests | One-click simulations & clear dashboards |

| Integrations | Must be custom-built and maintained | 100+ one-click integrations ready to go |

Understanding OpenAI Agent Evals pricing

One of the trickiest parts of the do-it-yourself approach is the unpredictable pricing. While the "Evals" feature itself doesn't have a separate line item on your bill, you pay for all the underlying API usage needed to run your tests. And those costs can sneak up on you fast.

According to OpenAI's API pricing, your bill is broken down into a few moving parts:

-

Model Token Usage: This is the big one. You pay for every single "token" (think of them as pieces of words) that goes into and comes out of the model during a test run. If you're running thousands of tests against a large dataset with a powerful model like GPT-4o, this gets expensive. For context, the standard GPT-4o model costs $5.00 per million input tokens and a whopping $15.00 per million output tokens.

-

Tool Usage Costs: If you’ve built your agent to use OpenAI’s built-in tools like "File search" or "Web search", those have their own separate fees. A web search, for example, could add another $10.00 for every 1,000 times your agent uses it during testing.

-

Upcoming AgentKit Fees: OpenAI has mentioned that it will start billing for other AgentKit components, like file storage, in late 2025. This just adds another layer of cost complexity to budget for.

This usage-based model makes financial planning a nightmare. A single month of heavy testing and refinement could result in a surprisingly large bill. You're essentially punished for being thorough.

This is a huge reason why so many teams prefer the clear, predictable costs of eesel AI's pricing. Our plans are based on a fixed number of AI interactions per month. You get everything, unlimited simulations, reporting, all integrations, included in one flat fee. There are no hidden per-resolution charges or scary token costs. What you see is what you pay.

Is OpenAI Agent Evals the right tool for the right job?

Look, OpenAI Agent Evals is a fantastic and flexible toolkit for highly technical teams building the next big thing in AI. It offers the kind of deep, code-level control you need when you're exploring the absolute limits of what artificial intelligence can do.

But that control comes with a hefty price tag in the form of complexity, time, and a whole lot of engineering hours. For most businesses, especially those in customer support and IT, the mission isn't to conduct a science experiment. It's to solve real business problems, quickly and reliably.

That’s where a practical, all-in-one solution is simply the smarter path. eesel AI handles all the low-level complexity of building, connecting, and testing an AI agent for you. It gives you a business-focused platform with straightforward tools like simulation mode and clear reporting, so you can deploy a trustworthy AI agent in minutes, not months.

Ready to see how easy and safe it can be to launch an AI support agent? Sign up for eesel AI for free and run a simulation on your past tickets. You can see your potential resolution rate and cost savings today.

Frequently asked questions

OpenAI Agent Evals are a specialized toolkit crafted for developers to test and verify the behavior of custom-built AI agents. Their purpose is to provide the foundational tools necessary to create a testing system that ensures an agent consistently follows instructions and meets specific quality standards.

The ideal users for OpenAI Agent Evals are AI engineers and development teams who are building complex, unique agent systems from scratch. These users typically require deep, granular control over their agent's logic and are proficient in coding and debugging.

Building test cases with OpenAI Agent Evals is a highly technical and manual process. It requires engineers to carefully craft "datasets" using JSONL files, creating each test case with an input and the expected "ground truth" outcome.

Generally, no. For most customer support and ITSM teams, using OpenAI Agent Evals presents significant challenges because they are designed for engineers. A dedicated development team is needed to build the agent, integrations, and the entire testing infrastructure.

When using OpenAI Agent Evals, the primary cost drivers are underlying API usage, specifically model token usage (for both input and output), and tool usage costs. Heavy testing with advanced models can quickly accumulate unpredictable expenses due to this usage-based pricing.

OpenAI Agent Evals offer "trace grading," a powerful debugging feature that goes beyond simple pass/fail results. It provides a step-by-step diagnostic report of the agent's thought process, showing which tools were used, in what order, and what information was exchanged.

OpenAI Agent Evals include automated prompt optimization, which analyzes test failures and suggests changes to the agent's core instructions or "prompts." This feature helps developers fine-tune the agent's internal logic for improved performance in subsequent runs.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.