If you’re keeping an eye on the AI space, you’ve probably heard the name Mistral AI popping up everywhere. They've been consistently releasing impressive models that give the big players a run for their money. And with their latest announcements, they're not hitting the brakes.

So, let's cut through the hype. This guide is all about the practical side of the new Mistral AI models. We'll look at what's new, figure out the difference between their open-source and paid stuff, and explore how you can actually use them for your business, no developer army required.

Mistral’s new Medium 3 model outperforms GPT-4o and Claude 3.7 at a fraction of the cost.

What is Mistral AI?

You can think of Mistral AI as the European challenger in a field that's been mostly dominated by American giants like OpenAI and Google. They were founded in 2023 by a few folks who used to work at Google DeepMind and Meta, and they've grown incredibly fast, recently hitting a valuation of around $6 billion.

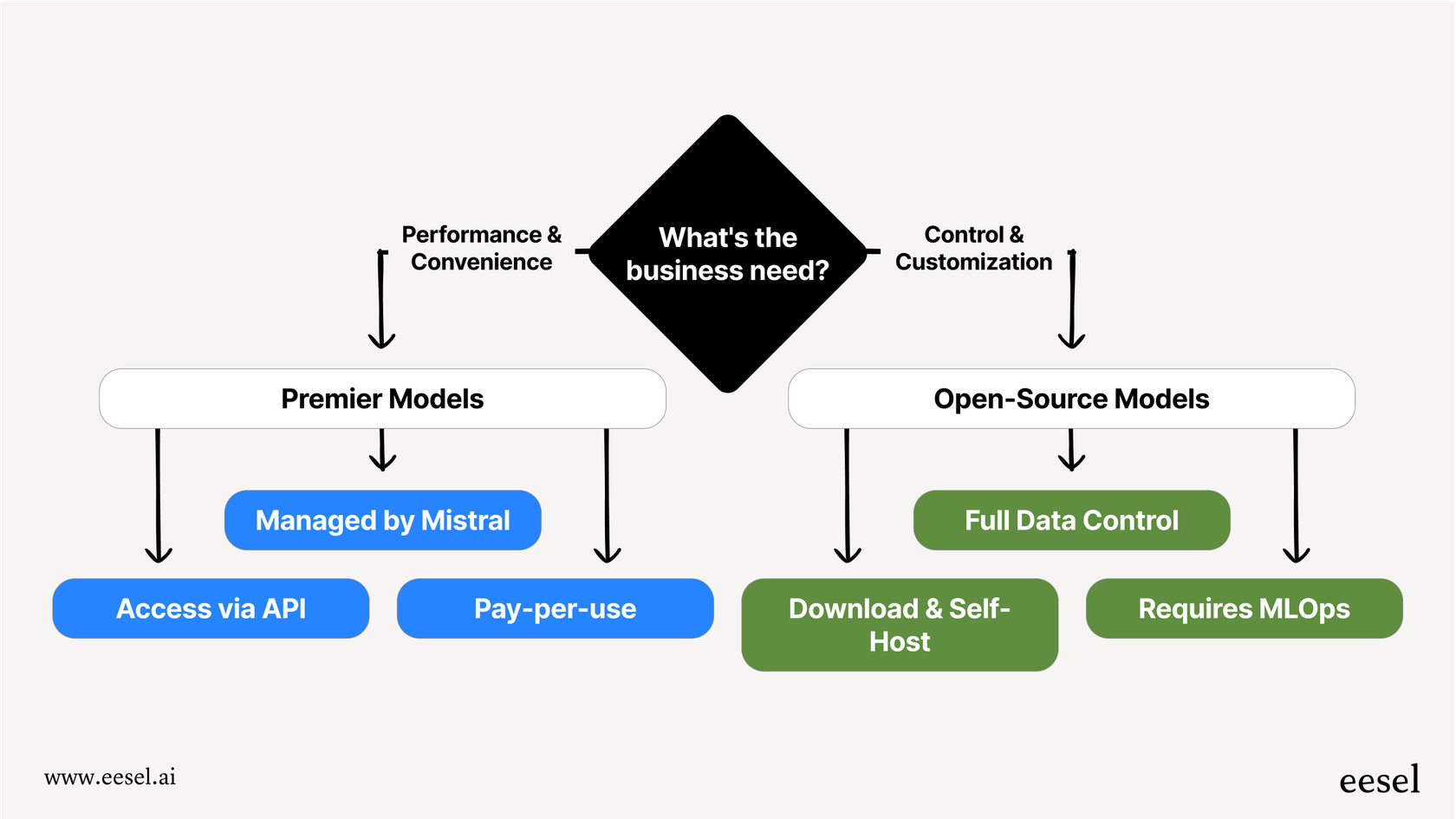

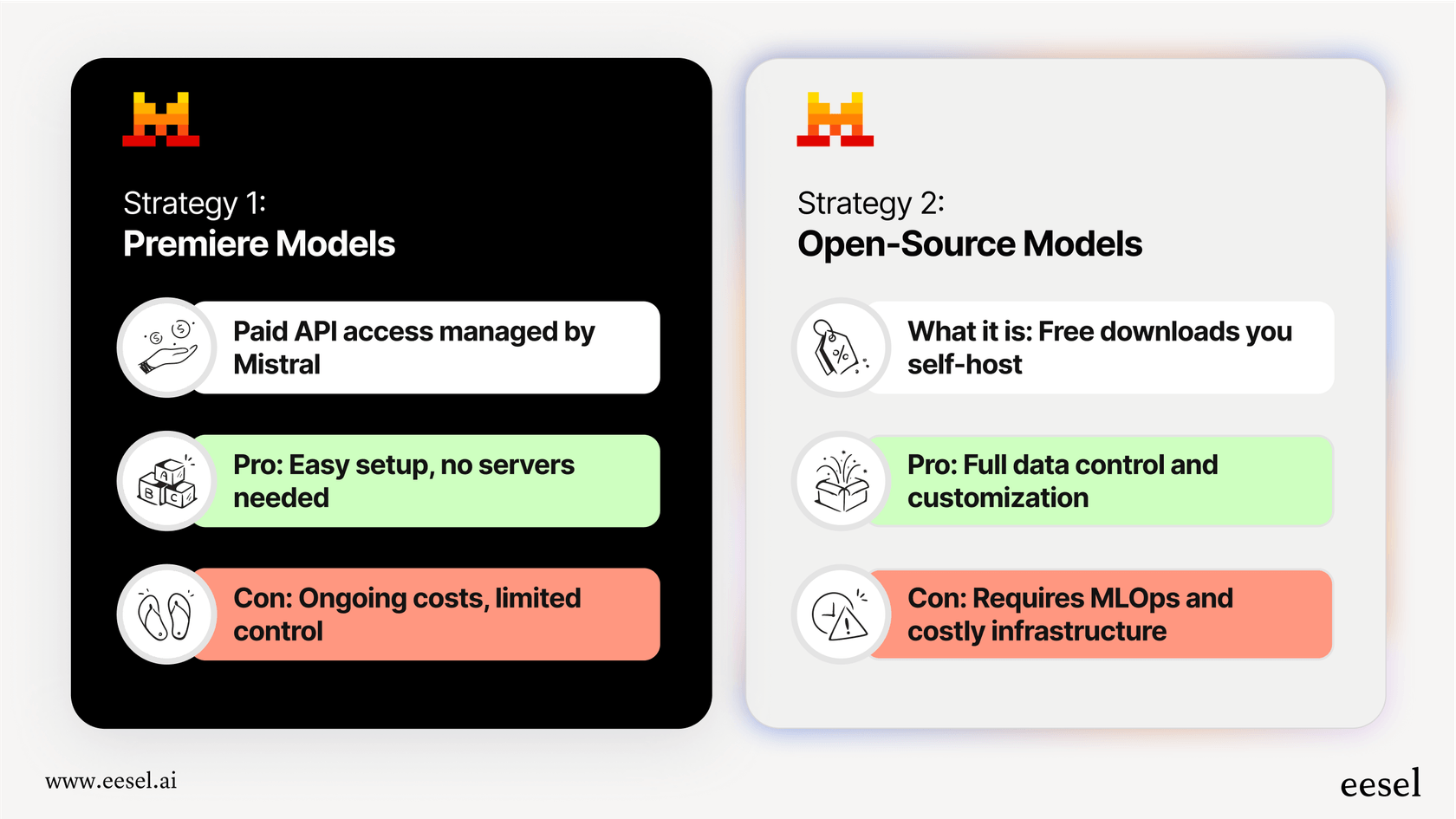

What makes Mistral really interesting is its two-pronged strategy. On one side, they have "premier" models that you pay to use, which are fine-tuned for the best possible performance. On the other side, they release powerful open-source models that anyone can download, tweak, and run on their own computers. This gives businesses a lot of flexibility, but it also brings up a big question: which option is right for you?

An overview of the latest Mistral AI new models

Mistral is putting together a whole family of models, with each one designed for a specific job. Instead of a single model that tries to do everything, they’re building specialized tools for tasks ranging from general problem-solving to heavy-duty coding. Let's meet the latest lineup.

The premier Mistral AI new models: Performance and convenience

These are Mistral's commercial models, which you can access through an API. They're built for businesses that need top-tier results without the headache of managing their own servers and hardware.

- Mistral Medium 3: This is their new go-to model. The goal here is to give you top-of-the-line performance without the eye-watering price tag you might see elsewhere. It’s especially good at coding and understanding things in multiple formats (like text and images), making it a solid all-around choice for a lot of business tasks.

- Magistral Medium: If you’ve ever wanted an AI that can "show its work," this is it. Magistral is a model that specializes in reasoning, built for jobs that need a clear, step-by-step logical trail. It’s perfect for things like legal research or financial modeling, where you need to be able to double-check how the AI got its answer.

- Devstral Medium: This one is all about what Mistral has dubbed "agentic coding." It doesn't just write a line of code here and there; it’s designed to act like a software engineering agent that can dig through an entire codebase, edit files, and handle pretty complex development work.

- Codestral 25.01: The name gives it away. This is a specialty model built from the ground up for coding. It's super fast for things like auto-completing code, fixing bugs, and writing tests. Plus, it speaks over 80 different programming languages.

The open Mistral AI new models: Customization and control

These are the models that Mistral gives away for free, often with flexible licenses like Apache 2.0. This means you can download them, modify them, and run them wherever you want.

- Devstral Small 1.1: This is the open-source version of their agentic coding model. It's considered one of the best open models out there for this kind of work. Because it’s released under the Apache 2.0 license, you could fine-tune it on your company's private code to create a developer tool that’s perfectly tailored to your team.

- Magistral Small: The open-source counterpart to Magistral Medium. It gives developers a powerful and transparent reasoning engine they can build on for free.

- Ministral 3B & 8B: These are what Mistral calls its "edge" models. They're tiny and incredibly efficient, which makes them great for running on devices that don't have a ton of power, like a smartphone or a laptop, without needing to be connected to the internet. In fact, Qualcomm is already working with Mistral to get these models onto devices with Snapdragon chips.

A quick summary of the latest Mistral AI new models

Here’s a simple table to help you keep everything straight.

| Model Name | Type | Primary Use Case | Key Feature |

|---|---|---|---|

| Mistral Medium 3 | Premier | High-performance enterprise tasks | Balances top performance with lower cost |

| Magistral Medium | Premier | Complex, transparent reasoning | Verifiable, step-by-step logic |

| Devstral Medium | Premier | Advanced software engineering agents | Great at exploring and editing codebases |

| Devstral Small 1.1 | Open-Source | Customizable code agents | Top-performing open model on SWE-Bench |

| Codestral 25.01 | Premier | Code generation & completion | Supports 80+ programming languages |

| Ministral 8B | Premier | On-device / Edge AI | Made for local use (like on a mobile phone) |

The business impact of choosing between open-source and premier Mistral AI new models

Mistral's approach gives you a genuine choice, and figuring out the pros and cons is the key to getting it right for your business.

The appeal of open-source Mistral AI new models

On the surface, open-source looks like a slam dunk. The models don't cost anything to download, and they come with some huge perks.

- Control & Privacy: When you host a model yourself, you’re in complete control. Your data stays within your four walls (digital or physical), which is a massive relief for any business that deals with sensitive customer info or operates in a regulated industry.

- Customization: This is the real killer feature. You can take an open-source model and train it on your company’s private data, whether that’s your internal documentation, your codebase, or all your past customer support chats. This lets you build a specialist AI that understands your business inside and out, giving you a real leg up on the competition.

- No Vendor Lock-in: You aren't stuck with one company's API, pricing plans, or rules. You have the freedom to change, deploy, and scale the model however you want.

The reality check: Challenges of self-deploying Mistral AI new models

While the benefits are tempting, the "free" part of open-source can be a bit of a mirage. Going the DIY route has some major strings attached.

- Technical Overhead: Running these models is not like installing an app. It takes a team of skilled engineers who know their way around machine learning operations (MLOps). You also need access to a lot of powerful GPUs, which are expensive and hard to get, not to mention a solid plan for keeping everything updated and maintained.

- Hidden Costs: The model might be free, but the server bill to run it definitely isn't. The costs for hosting, inference (the process of getting an answer from the model), and general upkeep can quickly balloon into thousands, or even tens of thousands, of dollars every month.

- Lack of Enterprise Guardrails: A raw model is basically just a brain in a jar. It doesn't have any of the business sense needed for real-world use. It doesn’t know when to pass a tricky question to a human, how to connect to your help desk, or how to adopt your company's specific tone of voice. You have to build all of that logic yourself.

How to apply Mistral AI new models to your support team (the smart way)

So, how do you tap into the power of something like Mistral Medium 3 without having to hire a whole new engineering team? This is where an AI integration platform becomes your best friend, especially for customer support.

The challenge of using raw Mistral AI new models for support

Let's be honest. If you just point a raw AI model at your public help center and cross your fingers, you're probably going to have a bad time. The model has no idea about your internal processes, how to check a customer's order status, or what to do when someone starts getting frustrated.

To make it work, you'd end up building a tangled web of custom code just to manage the basics.

This DIY setup is slow, expensive to put together, and an absolute pain to maintain. Every time you add a new tool or change a process, it means more custom coding.

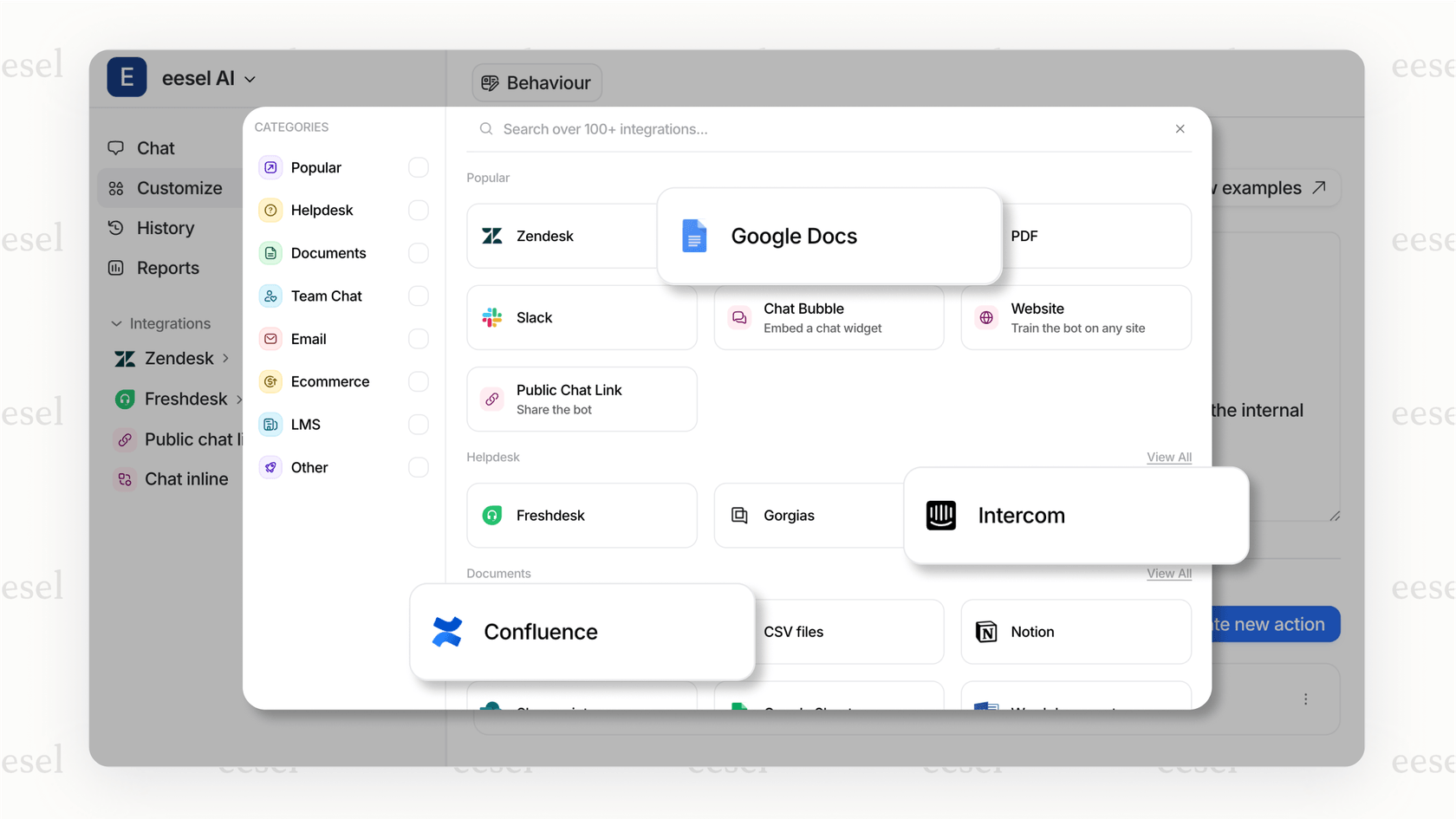

The platform approach: Why an integration layer is crucial

A much saner way to do this is to use a platform that sits on top of powerful models like Mistral's and handles all the messy parts for you. This is exactly what a tool like eesel AI is for. It manages all the complex integrations, business rules, and safety checks, so you can focus on making your customers happy instead of writing code.

Here’s how it sidesteps the problems of the DIY method:

- One-Click Integrations: Instead of spending weeks writing custom API connections, you can link your Zendesk, Freshdesk, or Intercom help desk in a few minutes. You can also have it learn from knowledge sources like Confluence, Google Docs, and Slack just as easily.

- Trains on Your Real Content: eesel AI goes way beyond your public FAQ page. It learns from the data that really matters: your team's past tickets, saved replies (macros), and internal-only documents. This makes sure the answers it gives are accurate, sound like your brand, and actually reflect how your best agents get things done.

- Human-in-the-Loop Controls: You don't have to be a coder to set the rules of engagement. You can use plain English to tell your AI agent when it should reply, when it needs to hand off to a human, and what actions it's allowed to take, like tagging, routing, or closing tickets.

- Simulation Before Scale: Nervous about unleashing an AI on your customers? I don't blame you. The simulation feature in eesel AI lets you test your agent on thousands of your past tickets in a safe environment. You can check its accuracy, see where it gets stuck, and calculate how much time and money it could save you before it ever interacts with a real customer.

Choosing the right path for your business

The new models from Mistral AI are genuinely impressive. They're giving businesses some incredible tools and a real choice, whether that's a convenient premier model or a fully customizable open-source one.

But at the end of the day, the magic isn't in the raw brainpower of the model itself. It's in how well you can plug that intelligence into your daily work. For most teams, especially in customer support, the smartest way to leverage this power, without getting bogged down in a massive engineering project, is to use a dedicated AI integration platform.

If you want to see how you can harness the power of the latest AI models for your support team, check out how eesel AI can help you get started in minutes, not months. Start your free trial or book a demo today.

Frequently asked questions

The open-source models like Devstral Small or Magistral Small are free to download, making them the most cost-effective starting point. However, remember to factor in the technical expertise and server costs required to run them yourself, which can be significant.

Mistral has several models focused on coding. Codestral 25.01 is a specialist for tasks like autocompletion and bug fixing, while the Devstral models (Medium and Small) are designed for more complex "agentic coding" where the AI can work across an entire codebase.

For maximum privacy, you should use the open-source models and host them on your own servers. This ensures your sensitive data never leaves your control, unlike using the premier models through an API, where your data is processed by Mistral.

Directly using the models requires technical skill, either through an API (for premier models) or self-hosting (for open-source). This is why the article recommends using an integration platform, which handles all the technical complexity so you can connect the AI to your business tools without writing code.

Generally, the premier models offer higher out-of-the-box performance for a wider range of tasks. The open-source models are incredibly powerful but may require fine-tuning on your own data to achieve peak performance for your specific use case.

Running models like Ministral on-device means they can function without an internet connection, offering faster response times and enhanced privacy. This is ideal for mobile apps or devices where constant cloud access isn't guaranteed or desired.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.