If you've dipped your toes into the world of AI for customer support, you've definitely heard about containment rate. It's often touted as the ultimate metric for chatbot success, promising a simple way to see your cost savings pile up. The logic seems solid: the more chats your bot handles solo, the less you spend on human agents.

But here's the catch: a high containment rate doesn't always mean you have happy customers or a winning AI strategy. It can easily hide user frustration, unresolved problems, and customers who just give up and quietly find another way to get help. When you chase this one number, you can end up optimizing for the wrong things, hurting the very customer experience you're trying to improve.

This guide is about looking beyond that single, often misleading, metric. We'll walk through a better way of measuring AI containment rate and escalation quality together, so you can be confident your AI is actually helping, not hindering.

What is AI containment rate? (And why it's not enough)

First, let's get on the same page. The AI containment rate is just the percentage of customer chats resolved by an AI agent without needing a human to step in.

The standard formula looks something like this:

"Containment Rate = (Total Interactions , Escalated Interactions) / Total Interactions * 100"

So, if your bot handles 1,000 chats and only 200 get passed to a human agent, you’ve got an 80% containment rate. Looks great on a dashboard, right? Not so fast.

The biggest flaw here is the assumption that any chat that isn't escalated is a success. This leads to what's known as "bad containment," where the numbers look fantastic, but the reality for your customers is anything but. Bad containment is what happens when:

-

A customer gets so annoyed with the bot that they just close the chat window in frustration.

-

The AI gives a wrong or unhelpful answer, but the conversation just fizzles out.

-

The customer gets stuck in a loop, gives up, and then immediately sends an email or calls, a channel switch that doesn't get counted as an escalation.

When you only look at the standard containment rate, you’re missing the full story. It’s an incomplete, and often flattering, picture of your AI's actual performance.

The problem with chasing a high containment rate

Focusing all your energy on pushing that containment number higher can backfire. It creates hidden problems and completely ignores the fact that sometimes, an escalation is the best possible outcome.

The hidden costs of "bad containment"

When your chatbot feels more like a roadblock than a helpful guide, there are real consequences for your business.

-

Customer frustration and churn: A clunky, unhelpful bot experience can be way more damaging than making a customer wait a few minutes for a human. It chips away at trust and can send people looking for your competitors.

-

More repeat contacts: If a problem isn't really solved, that customer is coming back, and they probably won't be in a great mood. This just inflates your ticket volume and agent workload, which is the exact opposite of what you wanted from automation.

-

Misleading performance data: If your team is high-fiving over an 80% containment rate, but half of those chats were actually unresolved issues, your ROI numbers are basically made up. You risk making big decisions based on bad data.

When escalations are a good thing

It's a common myth that every escalation is a failure. In reality, a smart escalation strategy is a sign that your AI system is working exactly as it should.

-

Complex or sensitive issues: Some problems just need a human. Things like empathy, creative problem-solving, and nuance aren't really in an AI's wheelhouse yet. A quick, smooth handoff for these issues isn't a failure, it's a win for the customer.

-

High-value customers or sales opportunities: Your AI should be smart enough to spot a high-stakes conversation. Whether it's a key customer about to churn or a warm sales lead, getting them to the right person right away is a huge advantage.

-

Building relationships: A great interaction with a skilled agent can turn a frustrated customer into a lifelong fan. Forcing them to battle a bot that can't help them achieves the complete opposite.

What most analytics tools don't tell you

The biggest issue with a lot of AI analytics dashboards is that they tell you what happened, but they have no idea why. You might see a 20% escalation rate, but you don't know anything about how the customer was feeling, if they were showing signs of frustration (like rephrasing a question five times), or why they abandoned the chat. Many platforms just don't give you the tools to dig deeper, leaving you to guess about the quality of it all.

A better way of measuring AI containment rate and escalation quality

To get a real feel for how your AI is doing, you need to look at more than one number. It’s time to shift from a simple containment metric to a framework that measures true resolution and the quality of every handoff.

Key metrics for "true resolution"

Let’s talk about a new concept: True Resolution Rate. This metric tries to answer the question that really matters: was the customer's problem actually solved, not just "did the chat end without anyone hitting the escalate button?"

To get the full picture, you should track a few things together.

| Metric | What it Measures | Why it Matters |

|---|---|---|

| True Resolution Rate | The percentage of issues fully solved by the AI, which you can often check with post-chat surveys or by seeing if the same customer contacts you again. | This separates actual successes from frustrating dead ends. It's the real measure of your AI's effectiveness. |

| CSAT/NPS on AI Interactions | Customer satisfaction scores specifically for chats that were handled only by the AI. | A high containment rate with a low CSAT score is a huge red flag. It means your bot is annoying people. |

| First Contact Resolution (FCR) | Whether the customer's issue was solved in a single interaction, whether that was with the AI or a human agent. | Your AI should help your overall FCR, not create complicated, multi-step support journeys for your customers. |

| Repeat Contact Rate | How often a customer gets in touch again about the same issue within a short period (like 48 hours). | A jump in repeat contacts after an AI chat is a clear sign that the first "resolution" didn't stick. |

How to measure escalation quality

Instead of just trying to force the number of escalations down, change your focus to improving the ones that do happen. A great escalation should be a smooth, positive experience for both the customer and your agent.

Here are the key things to track for escalation quality:

-

Context Pass-Through: Does the human agent get the full chat history and customer info? Nothing makes a customer angrier than having to repeat their whole story.

-

Agent Handle Time (Post-Escalation): If the AI did a good job of gathering info upfront, the agent's handle time on that ticket should be shorter than usual.

-

CSAT on Escalated Interactions: A high CSAT score here is a fantastic sign. It means the handoff was seamless and the agent had what they needed to solve the problem fast.

-

Agent Re-escalation Rate: How often does the first agent who takes an escalation have to pass it to someone else? A high rate could mean your AI isn't triaging issues correctly.

How to improve both containment and escalations

Building a system that delivers both real resolutions and high-quality escalations takes more than just a basic chatbot. You need a platform that’s built on a unified knowledge foundation and gives you robust tools for testing and flexible controls.

Start with a single source of truth

An AI is only as good as the information it can access. When your company knowledge is scattered everywhere, your bot can only give partial answers, which leads to a lot of escalations that could have been avoided.

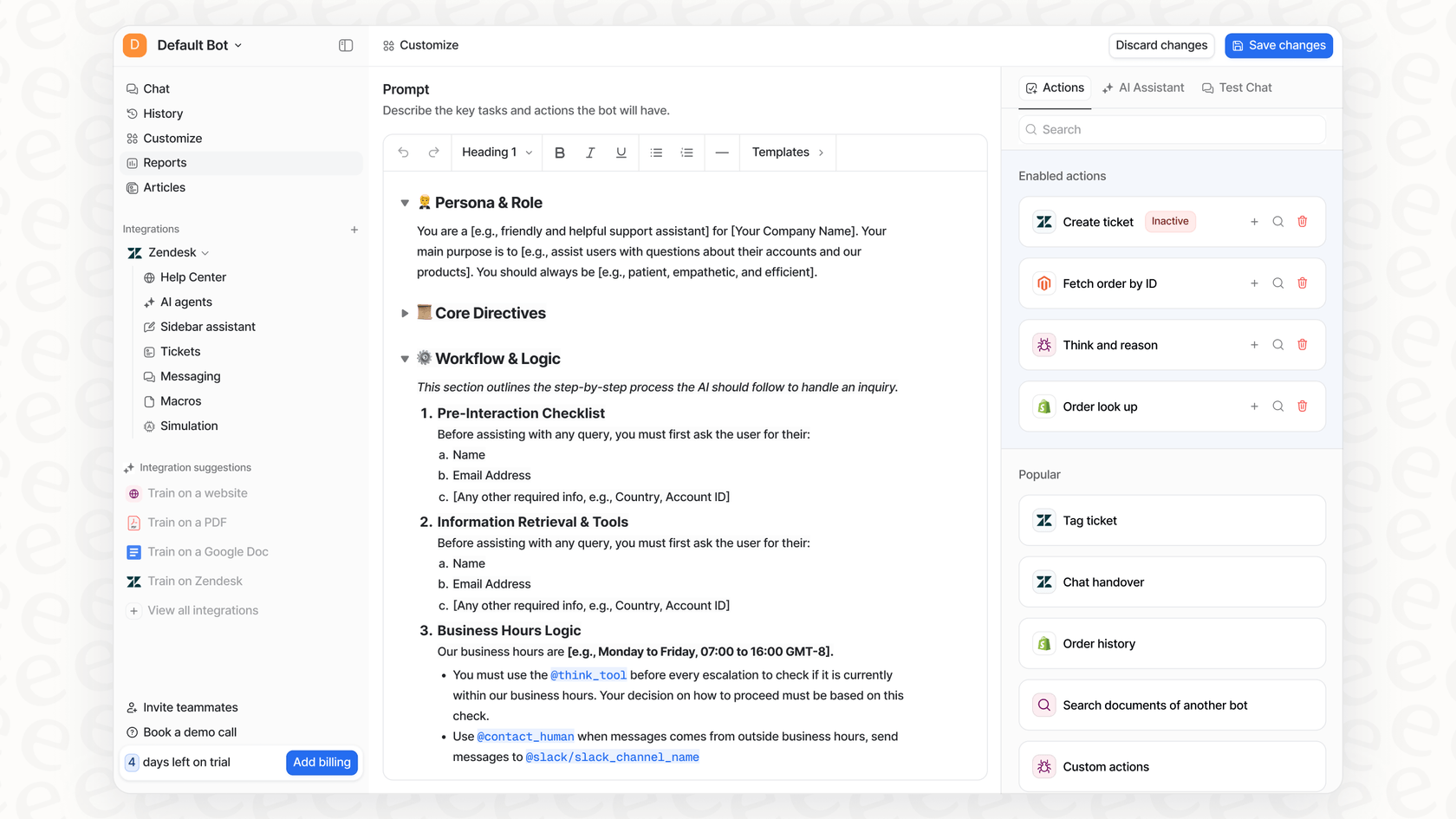

Most AI tools need you to create content from scratch or can only pull from a single help center. With a tool like eesel AI, you can instantly connect all of your knowledge sources, from past tickets and your Confluence pages to Google Docs and Notion. Our AI learns directly from your team's successful resolutions, so its answers are always accurate and sound like you. It even helps you find and fix gaps in your knowledge by suggesting new articles based on what customers are actually asking.

Test and simulate before you go live

Launching a new AI tool without knowing how it will actually perform is a massive gamble. Many platforms pretty much force you to go live to start gathering data, which means your customers are the guinea pigs.

You wouldn't launch a new website without testing it, right? The same goes for your AI. The simulation mode in eesel AI lets you test your setup on thousands of your own past tickets in a safe environment. You get a solid forecast of your resolution rate and can tweak the AI's responses before a single customer ever talks to it. This takes the risk out of the whole process and helps you launch with results you can count on.

Roll out with control

Rigid, all-or-nothing automation doesn't work for today's support teams. You need the freedom to choose what gets automated and how escalations are handled.

Unlike platforms that lock you into one way of doing things, eesel AI puts you in the driver's seat.

-

Selective Automation: Use our workflow engine to decide exactly which kinds of tickets the AI should handle. You can start small, with simple and repetitive questions, and have it automatically escalate everything else.

-

Custom Actions: Go beyond just answering questions. You can set up your AI to do things like look up order info from Shopify, update ticket fields in Zendesk, or triage issues based on what the customer wrote. This is how you really boost your "true resolution" rate and make sure that when an escalation is needed, it goes to the right team with all the right information.

Why measuring AI containment rate and escalation quality means focusing on outcomes

Measuring AI containment rate and escalation quality together is the only way to really understand the true impact of your automation strategy. A high containment rate means nothing if your customers are walking away unhappy and your agents are left cleaning up the mess.

A great AI doesn't just close chats. It solves problems effectively, makes customers' lives easier, and empowers your human agents by taking care of the routine stuff. This frees them up to use their expertise on the complex issues, armed with all the context they need. The goal isn't to get rid of human contact, but to make every single interaction, whether it's with a bot or a person, as helpful and efficient as possible.

Ready to measure what really matters?

Trying to improve your support with AI can feel like you're flying blind if you don't have the right analytics and controls. eesel AI gives you the simulation tools, detailed controls, and unified knowledge base you need to confidently automate support and improve both your resolution rates and escalation quality.

See how it works or book a demo to see our simulation mode in action.

Frequently asked questions

Focusing solely on containment can hide customer frustration and unresolved issues, leading to "bad containment." Combining it with escalation quality provides a comprehensive view of actual customer experience and true problem resolution, revealing the real impact of your AI.

Ignoring escalation quality while chasing a high containment rate can lead to increased customer churn due to frustration, more repeat contacts for unresolved issues, and misleading performance data that distorts your AI's true ROI and effectiveness.

Start by tracking "True Resolution Rate" using post-chat surveys or repeat contact rates. For escalations, focus on metrics like context pass-through, agent handle time after escalation, and CSAT specifically for escalated interactions to gauge their quality.

Key metrics include True Resolution Rate, CSAT/NPS specifically for AI-only interactions, and First Contact Resolution (FCR). For escalation quality, prioritize Context Pass-Through to agents and CSAT scores for escalated conversations.

A unified knowledge base ensures the AI has access to accurate and comprehensive information, allowing it to provide better answers and reduce unnecessary escalations. It also ensures that when escalations are necessary, agents receive full context, improving quality.

Yes, using simulation tools allows you to test your AI against historical customer tickets in a safe environment. This helps predict resolution rates and refine AI responses, ensuring better performance and higher quality outcomes before going live.

Selective automation enables you to introduce AI gradually, handling simple, repetitive questions first. This approach ensures the AI performs reliably where it's most capable, while allowing for proper escalation of complex issues with full context, improving both metrics over time.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.