So, you’ve set up an AI to help with ticket tagging. The dream was a perfectly organized queue where every customer issue gets sorted automatically. The reality? Well, it might be a bit of a mess. When your AI starts slapping the wrong tags on tickets, it’s not just a small hiccup, it’s a recipe for chaos. You end up with misrouted tickets, agents wasting time fixing the AI’s mistakes, and customers waiting longer for a response.

This guide is a straightforward plan for figuring out why your AI is getting it wrong and how to fix it. We’ll walk through how to clean up your data, decide what "good enough" actually looks like, and use the right tools to build a tagging system you don't have to constantly second-guess.

What you'll need to get started

Before we jump into fixing things, let's make sure you have a few things handy. You don't need a PhD in data science, but having the right information and tools makes all the difference.

-

Your helpdesk history: You'll want access to a backlog of tickets from your platform, whether it's Zendesk, Freshdesk, or something else. This is where you'll find the patterns.

-

An AI tool you can actually control: If your AI is a "black box" with no settings to tweak, you're going to have a bad time. You need a tool that lets you fine-tune how it behaves.

-

A clear idea of your goals: Why are you using these tags in the first place? Are they for routing tickets, spotting urgent problems, or running reports on bugs? Knowing the "why" is half the battle.

-

A basic handle on accuracy: You can't fix what you can't measure. We'll get into the simple difference between prioritizing fewer wrong tags (precision) versus catching every single relevant ticket (recall).

Step-by-step: How to reduce AI false positives in ticket tagging

Alright, let's get our hands dirty. Fixing false positives is more of a tuning process than a one-and-done fix. Follow these steps to make your AI tagging more reliable.

Step 1: Define what a ‘false positive’ means for your team

The term "false positive" sounds a bit technical, but for a support team, it’s a very real headache. It’s just when the AI puts the wrong tag on a ticket. Before you can reduce these mistakes, your team needs to agree on which ones hurt the most.

For instance, a false positive could be:

-

A simple pricing question gets tagged as a ‘Refund Request,’ sending it to the wrong team and causing a minor panic.

-

A casual feature idea gets labeled ‘Urgent Bug,’ pulling developers away from genuine emergencies.

-

A new customer’s login issue is marked as ‘Spam,’ which is a pretty awful first impression to make.

Your first move is to sit down and list the top 5-10 tagging mistakes that cause the most trouble. This gives you a clear target. A good AI platform should make these errors easy to spot. For example, a tool like eesel AI lets you run a simulation on your past tickets. This shows you exactly how the AI would have tagged them, so you can catch these kinds of mistakes before you ever let it loose on live customer conversations.

Step 2: Analyze your knowledge sources for conflicts and gaps

Think of your AI as a new trainee. If you hand them a stack of confusing, outdated, or contradictory training manuals, you can't be surprised when they make mistakes. Your AI’s performance is a direct reflection of the data it learns from, and false positives are often a sign that its "brain" is a bit cluttered.

Here’s a quick health check you can run on your AI's knowledge:

-

Look at your public help center: Do you have articles with conflicting info, like an old pricing page still floating around? Are some instructions just plain confusing?

-

Check your internal docs: What about your macros, canned responses, and internal wikis? Are agents all on the same page, or is everyone doing their own thing?

-

Dig into old tickets: This is where the real magic is. See how your human agents have been applying tags. You'll often find unwritten rules and inconsistencies that your AI has been trying to learn from.

The idea of manually cleaning up years of accumulated knowledge is enough to make anyone want to give up. It’s a huge project and a common reason teams get stuck. This is where newer AI tools can save you a ton of time. Instead of you doing all the heavy lifting, a platform like eesel AI can connect to all your different knowledge sources, from Confluence and Google Docs to past ticket conversations, and unify it automatically. It can even spot gaps in your documentation and help you write new articles based on what it learns from successful tickets, which helps the AI improve its own source material over time.

Step 3: Use precision and recall to set the right accuracy goals

You don't need to be a statistician to get this right. It all boils down to one simple question: which type of mistake is worse for us?

-

Focusing on Precision (Fewer False Positives): This is like your email spam filter. You'd probably rather have a stray spam message land in your inbox (a miss) than have an important email from your boss get buried in the spam folder (a false positive). For critical tags like ‘Billing Error’ or ‘Security Concern,’ you need high precision. When the AI uses these tags, it better be right.

-

Focusing on Recall (Catching Every Potential Case): Now, think about a bank’s fraud detection. They would much rather flag a legitimate purchase by mistake and have you confirm it (a false positive) than let a fraudulent charge slip through (a miss). For tags like ‘Churn Risk’ or ‘VIP Customer,’ you might want the AI to be a little over-cautious, even if it means agents have to clear a few incorrect flags. Missing even one of these tickets is too expensive.

Your next task is to sort your most important tags into two buckets: "High Precision Needed" and "High Recall Needed." This simple exercise helps you tell your AI system what you care about most.

Step 4: Fine-tune your AI with better instructions and scoped knowledge

Once you know your goals, you can start giving your AI better directions. A lot of AI systems fail because they’re black boxes; you put data in, tags come out, and you have no idea why. To really cut down on false positives, you need to be in the driver's seat.

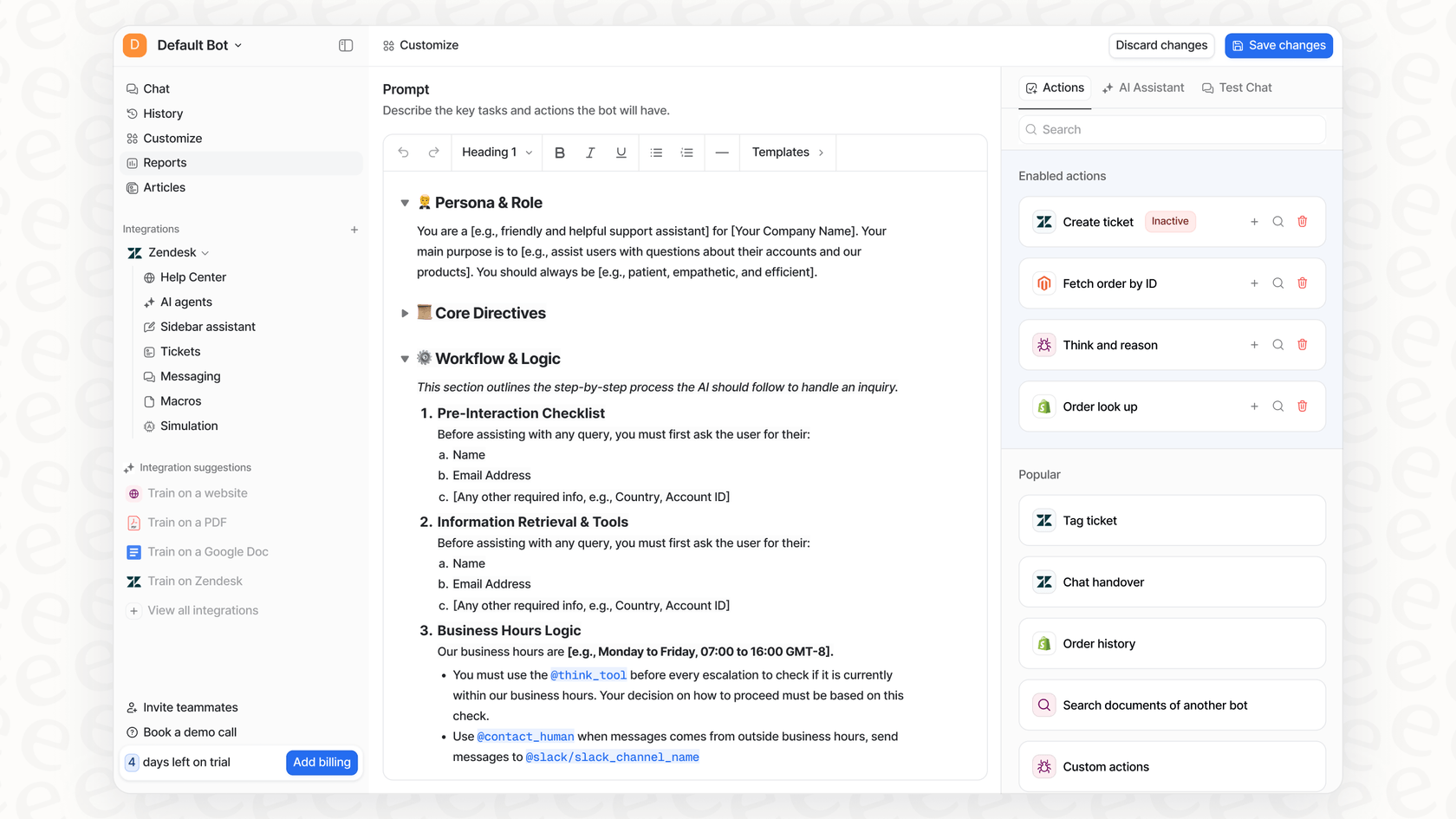

This is where having a customizable workflow makes a huge difference. Instead of being stuck with a one-size-fits-all model, a platform like eesel AI lets you take control.

-

Customize the prompt: You can use a simple editor to tell the AI exactly what each tag means. Give it examples. Give it rules for when not to use a tag. For instance: "Do not tag a ticket as 'Urgent' unless the customer uses words like 'down,' 'outage,' or 'cannot access'."

-

Scope the knowledge: This is a huge lever for improving accuracy. You can tell the AI to only use a specific set of articles for certain topics. For a billing question, you can restrict the AI to your five official billing articles and nothing else. This stops it from getting confused by old or irrelevant information and dramatically cuts down on mistakes.

-

Control the rollout: You don't have to go all-in at once. Start by having the AI tag tickets for just one team or about a single topic. This lets you build confidence and prove it works before you expand.

This kind of control makes the AI's behavior predictable and reliable because it’s operating within the guardrails you’ve set.

Test your AI before it ever touches a customer ticket

The biggest fear with any new automation is that it will go haywire in front of your customers. So how can you be sure your carefully tuned AI won't start making weird mistakes on live tickets? The answer is simulation.

Instead of just activating the AI and hoping for the best, you should be able to test it in a safe environment. This means running your AI setup over your historical ticket data to see exactly what it would have done. It's the ultimate pre-flight check.

This is a major feature to look for in an AI platform. While some tools offer vague demos, eesel AI has a powerful simulation mode that gives you real data to work with. Here’s what that looks like in practice:

-

Test on your own data: You run the simulation on thousands of your actual past tickets, not some generic examples.

-

Get a detailed report card: The simulation report shows you exactly how the AI would have tagged every ticket, pointing out potential false positives and calculating a realistic performance score.

-

Launch with confidence: Armed with a precise forecast of your automation rate and accuracy, you can turn the AI on knowing exactly what to expect.

Simulation takes the guesswork out of the equation and lets you make decisions based on data, not hope.

Common mistakes to avoid

As you work through these steps, keep an eye out for a few common traps that can trip you up.

-

Setting it and forgetting it: Your products change, and so do your customers' problems. An AI model can "drift" over time and become less accurate. You need a simple process for checking in and re-tuning it every so often.

-

Only using your official knowledge base: Some of the best information is hidden in past conversations between agents and customers. If you ignore that treasure trove of real-world context, you're leaving accuracy on the table.

-

Trying to automate everything at once: Don't go for 100% automation on day one. Pick one or two types of tickets that are easy to define, automate them successfully, and then expand from there. A gradual rollout is the key to getting it right.

The fastest way to accurate AI ticket tagging

At the end of the day, reducing AI false positives isn't about finding a "perfect" algorithm. It’s about having the right process and the right tools. The process is straightforward: figure out what your worst errors are, clean up and focus your data, set clear goals, and test everything before you go live. The right tools are the ones that make this process feel easy, giving you control over the AI’s logic and a risk-free way to simulate its performance.

When you combine a smart process with a flexible tool, you can finally get the benefits of support automation without all the noise.

Get started with eesel AI

With eesel AI, you can be up and running in minutes, simulate results on your own data, and use our workflow engine to get total control over your ticket tagging. You can start small, prove the value, and scale up when you're ready.

Ready to stop chasing down false positives and start automating with confidence? Start your free eesel AI trial today and see how it performs on your tickets.

Frequently asked questions

The initial step is to clearly define what a "false positive" means for your specific team and identify the top 5-10 tagging mistakes that cause the most significant issues. This provides a clear target for improvement.

Your AI learns from your existing data, so cleaning up conflicting or outdated information, and analyzing how human agents used tags in old tickets, directly improves the quality of its learning material. This refined input helps the AI make more accurate tagging decisions.

Precision focuses on minimizing incorrect tags (false positives), ensuring that when a tag is applied, it's highly likely to be correct. Recall, on the other hand, aims to catch all relevant tickets for a specific tag, even if it means some false positives. Understanding which type of error is more detrimental for a given tag helps you prioritize and fine-tune your AI's behavior.

You can fine-tune your AI by customizing its prompts with specific rules and examples for each tag, and by scoping its knowledge. Scoping means restricting the AI to use only a highly relevant subset of articles for certain topics, preventing confusion from irrelevant information.

The most effective method is to use a simulation mode within your AI platform to test it on your own historical ticket data. This allows you to see exactly how the AI would have tagged past tickets, identify potential false positives, and evaluate its accuracy before it interacts with live customer conversations.

It's crucial to avoid a "set it and forget it" mentality. Regularly review your AI's performance, as products and customer issues evolve. Periodically re-tuning the model and analyzing new ticket data will help maintain accuracy and adapt to changes, preventing model drift.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.