So, you’ve brought AI into your customer support workflow. That’s a great move. The promise is huge: handle the easy, repetitive questions, free up your team for more interesting work, and give customers the fast answers they want. But there’s a nagging question, isn’t there? How do you actually know if it’s working?

Too many teams are flying blind. They're trying to measure their new AI tools with old, outdated metrics, and it’s just not working. Simply looking at the number of tickets closed or how fast an initial response goes out doesn’t give you the full picture. Is the AI actually solving problems, or is it just creating more headaches for your human agents? Are your customers leaving conversations happier, or just more frustrated?

It’s time to stop chasing vanity metrics. This guide will walk you through a simple, three-part framework for measuring AI performance that actually makes sense. We’ll cover how to get an honest look at your AI's impact so you can make smarter decisions and show everyone that it's worth the investment.

Why traditional support metrics fall short

The biggest change with AI is that you’re not just managing a team of people anymore. You’re running a hybrid operation where AI and human agents work side-by-side, and that completely flips the script on what "good performance" looks like. If you keep clinging to the old ways of measuring, you’re going to get some seriously misleading results.

Let's talk about a classic metric: Average Handle Time (AHT). For years, the goal was simple: get this number as low as possible. A low AHT meant your agents were efficient. But what happens when you plug in an AI agent that swoops in and handles all the simple, one-and-done questions?

The tickets left for your human team are the tough ones. The complex, multi-layered problems that need a real person to solve them. So, naturally, the AHT for your human agents is going to go up. In a traditional report, that would look like a problem. But in this new world, it’s actually a sign that everything is working perfectly. It means your AI is doing its job, and your skilled agents are putting their brainpower where it matters most. This is exactly why we need a new way to measure things, one that looks at what the AI does on its own and how it helps the rest of the team.

A modern framework for measuring AI performance in customer support

To really understand how your AI is doing, you need to look at it from three different angles. This framework gives you the complete story, from the daily ticket grind to the impact on the business as a whole.

1. Operational efficiency metrics

This first bucket is all about the heavy lifting. These are the core numbers that tell you how much work your AI is actually taking off your team's plate and how well it's handling the load.

Automated Resolution Rate (ARR) This is the big one. Automated Resolution Rate (ARR) tells you the percentage of customer issues your AI solves from start to finish, with zero human hand-holding. It’s the clearest way to see how efficient your AI is. As your ARR goes up, it means your AI is learning and improving, which in turn frees up your team for more strategic work.

The problem with a lot of AI platforms is that you have to launch them to find out what your ARR will even be. You’re basically just crossing your fingers and hoping for the best. A much better way is to know your potential results before you ever go live. For example, eesel AI has a simulation mode that lets you test your setup on thousands of your own past tickets. It gives you a pretty accurate forecast of your ARR and cost savings, so you can launch with confidence, not just hope.

First Contact Resolution (FCR) First Contact Resolution (FCR) has always been a key metric for human teams, and it’s just as crucial for AI. It measures how often the AI solves a customer’s problem in a single interaction. A high FCR means your AI isn’t just fast; it’s accurate. Your customers aren't getting stuck in frustrating loops or having to explain their problem over and over again.

A high FCR all comes down to the quality of knowledge the AI can access. If your bot is only trained on a few help center articles, it's going to stumble when it faces real-world questions. This is where the knowledge sources you connect make all the difference. While some tools are limited, eesel AI can improve FCR by training on your past tickets and all your other knowledge sources. By learning from past resolutions in Zendesk or understanding internal docs from Confluence and Google Docs, it gets the context needed to solve problems the first time.

| Metric | Traditional View (Human Agents) | AI-First View (Hybrid Team) |

|---|---|---|

| Automated Resolution Rate | N/A (Manual process) | The main measure of AI efficiency. You want to see this grow. |

| First Contact Resolution | A key agent performance metric. | Measures how accurate and helpful the AI's knowledge is. |

| Average Handle Time | Lower is always better. | Bot AHT should be seconds. Human AHT may go up (and that's okay!). |

2. Customer experience and quality metrics

Being efficient is nice, but it doesn't mean much if your customers are having a terrible time talking to your AI. This category is all about the quality of the support experience and whether people are actually happy with the help they get.

Customer Satisfaction (CSAT) & Customer Effort Score (CES) These are your go-to metrics for checking the quality of AI interactions. CSAT tells you if customers were happy with the result, and CES tells you how much work they had to put in to get their problem solved. Just remember, a fast but wrong answer from an AI is often worse than a slightly slower, correct answer from a person. If your efficiency numbers are high but your CSAT is low (or your CES is high), that's a major red flag.

Nothing tanks a CSAT score faster than a generic, robotic-sounding AI. Customers can spot it a mile away. eesel AI helps you sidestep this by letting you create a fully customizable AI persona. You can use its prompt editor to define the exact tone of voice you want. You can also use scoped knowledge to make sure it only answers questions it's been trained on, which stops it from going off-script with unhelpful responses.

Qualitative Feedback & Escalation Analysis Numbers only tell you part of the story. To really get what’s going on, you have to dig into the why. Why are customers giving you low CSAT scores? Why are they asking to speak to a human? Looking at the reasons for escalation is like striking gold. It can show you exactly where the gaps are in your AI's knowledge or where its logic is breaking down.

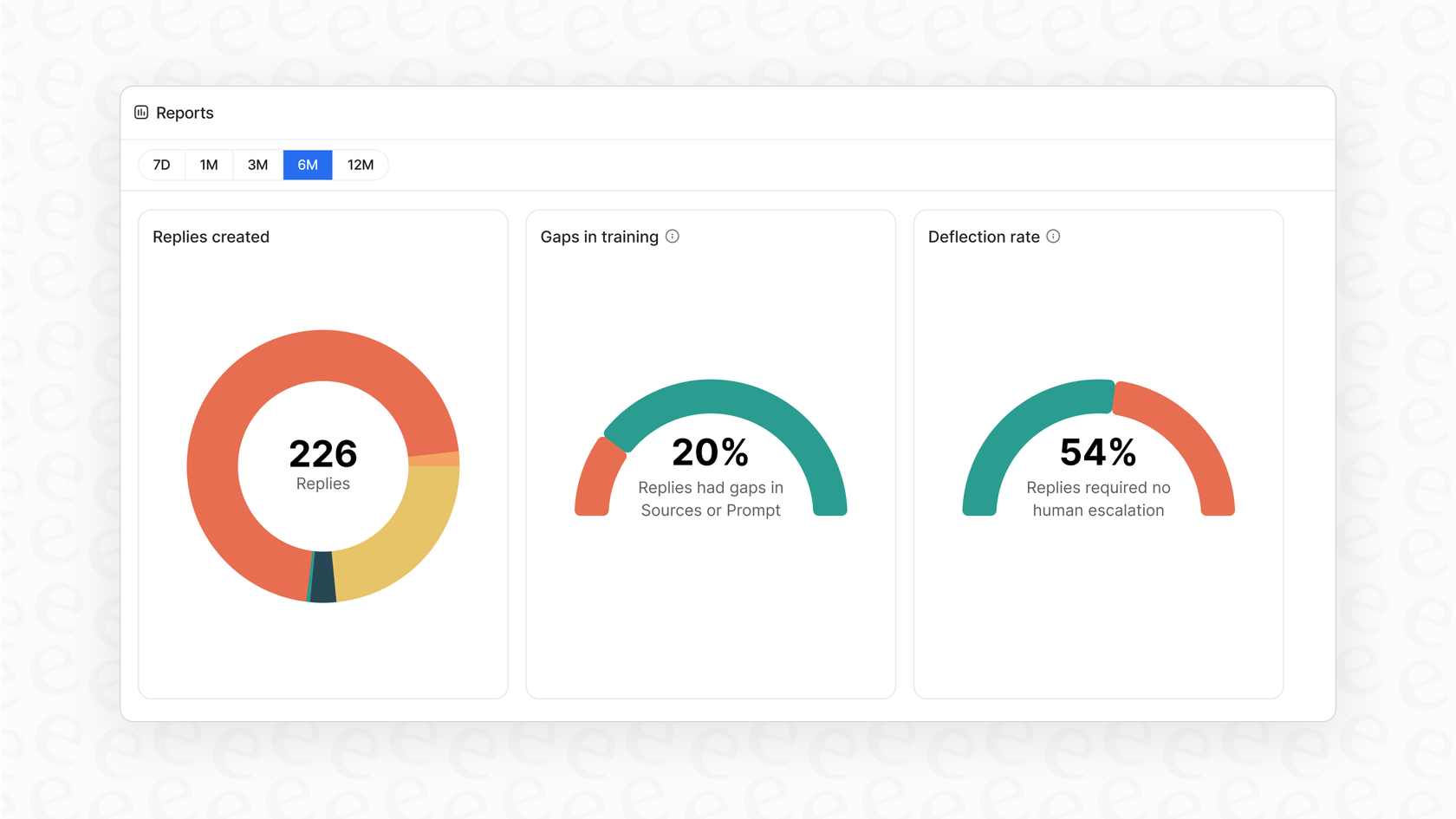

You need a tool that gives you more than a simple dashboard. Lots of platforms will show you what happened, but not why. The actionable reporting in eesel AI is built to pull out these insights for you. It spots trends in why people escalate and points to specific gaps in your knowledge base. This gives you a clear to-do list for making your AI, and your whole customer experience, better.

3. Business impact and ROI metrics

Last but not least, you have to connect your AI's performance to the things your leadership team really cares about: money, time, and people. This is how you prove the return on investment (ROI) for your AI tool.

Cost Per Resolution This is a simple but really effective metric. The formula is easy: (Total Agent Costs + Total AI Software Costs) / Total Number of Resolutions. If your AI implementation is going well, this number should go down over time. As your ARR gets better, you'll rely less on people for the simple stuff, which lowers your costs.

But be careful of a common pricing trap. Many AI tools charge you on a per-resolution basis. This model creates unpredictable costs and, weirdly, punishes you for doing well. The more tickets your AI handles, the higher your bill gets. In contrast, eesel AI uses transparent, flat-rate plans. This makes calculating ROI a breeze and keeps your costs predictable, so you can grow without worrying about a surprise invoice.

Agent Productivity & Retention A great AI tool doesn't just help customers; it helps your agents. By taking over the repetitive tasks, the AI should give your team more time to focus on building relationships, offering proactive support, and solving the really meaty problems. This makes for happier agents, which means less churn, a huge cost saving in itself.

Tools that work alongside your agents are a big part of this. For instance, an AI Copilot, which is a key part of eesel AI, can draft replies for agents in your brand's tone, using info from past tickets and knowledge bases. This makes their jobs faster and less tedious, and helps new hires get up to speed in no time.

How to successfully implement your measurement plan

Knowing what to measure is step one. Step two is actually putting a process in place to do it right. Here’s a simple plan to get you started.

Establish a baseline Before you switch on any AI, you need to know your starting line. Spend a week or two tracking your current performance across the key metrics we've talked about: FCR, AHT, CSAT, and cost per resolution. This baseline is your source of truth. Without it, you’ll never be able to prove how much of a difference the AI is making.

Test and deploy with confidence (not anxiety) One of the biggest fears leaders have is turning on an AI and just watching it cause chaos. With platforms that don’t offer a real testing environment, that fear is totally justified. You're forced into a "big bang" launch where you just have to hope for the best.

eesel AI was designed from the ground up to get rid of that anxiety. The whole process is built around being safe and rolling things out gradually.

-

First, use the simulation mode. You can run the AI over thousands of your old tickets to see exactly how it would have responded, what its ARR would have been, and where it has knowledge gaps.

-

Then, roll it out in small steps. Instead of turning it on for everyone, you can activate the AI for just one or two specific types of questions, like "password resets" or "order status."

-

Monitor and expand. Watch how it performs in a live but controlled setting. As you get more comfortable with its performance, you can slowly give it more types of questions to handle.

Create continuous feedback loops Measuring performance isn't a one-and-done deal; it's something you should always be doing. Use the insights you get from your metrics to constantly make the system better. If you see that the AI keeps struggling with billing questions, that's your cue to add more billing docs to its knowledge base. This cycle of measuring, analyzing, and improving is what turns a good AI into a great one.

AI performance is only as good as the platform it's built on

Measuring AI performance in customer support requires looking at the whole picture, efficiency, customer happiness, and business results. The old ways of measuring just don’t work anymore. True success isn't just about having an AI; it's about having the right platform that gives you the tools to test, measure, and improve it without any of the usual stress.

The best platforms, like eesel AI, are built for this. With a super simple setup that gets you live in minutes, risk-free simulation to see your impact ahead of time, and predictable pricing, eesel AI gives you everything you need to confidently bring AI into your support team.

Ready to see what AI can really do for your team? Start your free trial with eesel AI and get your first performance report in minutes.

Frequently asked questions

Traditional metrics like Average Handle Time (AHT) can be misleading with AI. When AI handles simple queries, the AHT for human agents might increase because they're left with more complex issues, which is actually a sign of success. You need metrics that account for this hybrid operation.

Focus on Automated Resolution Rate (ARR) to see how much work the AI handles completely, and First Contact Resolution (FCR) to gauge its accuracy. Also, closely monitor Customer Satisfaction (CSAT) and Customer Effort Score (CES) for the quality of AI interactions.

Alongside CSAT and CES, actively analyze qualitative feedback and reasons for escalation. This helps uncover specific pain points or knowledge gaps in your AI, ensuring it delivers helpful and not just fast, responses.

Calculate your Cost Per Resolution to see if AI is lowering operational expenses. Also, track agent productivity and retention, as offloading repetitive tasks can lead to happier, more efficient human teams and reduced churn.

Start by establishing a baseline. Before deploying any AI, track your current FCR, AHT, CSAT, and cost per resolution for a few weeks. This baseline provides a clear benchmark to prove the AI's impact later on.

Look for platforms that offer simulation modes to test on past tickets before going live. Then, roll out the AI in small, controlled steps for specific question types. Monitor performance closely and gradually expand its scope as you build confidence.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.