Let's be real, there's a ton of hype around OpenAI's new image generation models, GPT-Image-1 and its smaller sibling, GPT-Image-1 Mini. But if you've spent any time in developer communities, you've probably seen the other side of the story. The questions pop up constantly: "Why do my API images look so much worse than the ones from ChatGPT?" or "Did the quality just get nerfed overnight?"

It’s a common frustration. You see the incredible potential, but the results you get from the API just don't seem to match what you see in the demos. This guide is here to clear things up. We'll give you an honest, balanced look at what these models can do, dig into why the API and web UI results are so different, break down the pricing, and talk about where they actually make sense in a business workflow.

What are GPT-Image-1 and GPT-Image-1 Mini?

Before we get into the weeds, let's cover the basics. GPT-Image-1 is OpenAI's newest and most powerful model for creating and editing images. They use a fancy term for it: "natively multimodal." All that really means is that it was built from the ground up to understand text and images together, in the same thought process.

Understanding the core technology

Older models often felt like they had separate "brains" for language and vision that were bolted together. GPT-Image-1 uses a single, unified architecture. This helps it grasp context and nuance much more effectively, leading to images that are a better match for your prompts. The whole design is focused on blending inputs (text and images), offering a massive range of artistic styles, and building in safety features from the start.

What's the difference with GPT-Image-1 Mini?

So, where does the Mini version fit in? Simply put, GPT-Image-1 Mini is the faster, more budget-friendly option. It's made for jobs where speed and cost matter more than getting every single pixel perfect.

A good way to think about it is like the difference between a high-end DSLR camera and a really good smartphone camera. The DSLR (GPT-Image-1) gives you incredible quality and fine-grained control, but it's more expensive and takes more work. The smartphone camera (GPT-Image-1 Mini) is quick, easy, and the results are fantastic for most everyday needs. Both are useful tools, just optimized for different things.

Reviewing GPT-Image-1's capabilities and performance

Now that we know what they are, let's talk about how they perform in the real world. This is where we get past the marketing and into the good, the bad, and the frustratingly inconsistent.

Where it shines: Style-hopping and powerful editing

One of the most impressive things about GPT-Image-1 is its sheer versatility. It can jump between photorealistic product shots, artistic watercolors, or clean 3D renders without breaking a sweat. It’s a seriously powerful creative partner.

The editing tools are also a huge leap forward. You can use inpainting to select a specific area of an image and change it with a new prompt, like swapping a shirt color or removing a distracting object. Then there's outpainting, which lets you extend the canvas and add more to the scene. For creative teams, these features can slice a ton of time off workflows that used to involve bouncing back and forth with photo editing software.

Where it struggles: Getting text right via the API

Okay, but here's where the frustration kicks in. A very common complaint you'll see all over the internet is how badly the model handles text when you're using the API. If you've ever tried to generate a product mockup with a brand name on the label or a street sign with specific text, you've probably seen it spit out garbled, nonsensical characters. It's almost like alphabet soup.

Even with all the advancements, getting typography right inside a generated image remains one of the toughest problems for AI. The model has to understand letter shapes, spacing, and context all at once, and for some reason, the API version often falls flat compared to the polished results you see in the ChatGPT interface.

The big community question: Is the quality getting worse?

Beyond just text, many developers have a nagging feeling that the overall image quality from the API has dropped over time. In threads on the OpenAI community forums, you'll find people who built products on the API suddenly reporting that their outputs are "extremely bad and completely off."

This feeling of being "nerfed" is a massive risk for any business that needs consistent results. When the core model you've built a feature around can change its behavior without warning, it makes it incredibly difficult to promise a reliable product to your customers. It’s a tough lesson in the risks of building your business on top of a black box.

API vs. ChatGPT UI: Why are the results so different?

This is the big one. The question that's driving everyone crazy. You use the exact same prompt in the ChatGPT website and the API, and you get two completely different images. The good news? It's not a bug. The bad news? It's a feature, and it's not well-documented.

The secret helper: Prompt rewriting and post-processing

Turns out, when you use the ChatGPT web interface, you’re not talking straight to the model. There’s a secret helper in the middle, a kind of AI copilot. This layer often takes your simple prompt and quietly expands it behind the scenes, adding tons of detail about style, composition, and lighting before passing it to the image model.

graph TD subgraph API Workflow A[User writes simple prompt] --> B{GPT-Image-1 API}; B --> C[Raw Image Output]; end subgraph ChatGPT UI Workflow D[User writes simple prompt] --> E[AI Copilot adds detail]; E --> F{GPT-Image-1 Model}; F --> G[Post-processing applied]; G --> H[Polished Image Output]; end

On top of that, it’s widely believed that the web UI applies some post-processing to the final image. Things like automatic sharpening, color correction, or a contrast boost can make the output look way more polished than the raw, unfiltered image you get straight from the API.

Tips for getting better results from your API calls

So how do you fight back and get the API to give you what you want? It takes a bit more work, but it's definitely possible.

-

You have to be the copilot. Since the API doesn't have that hidden prompt rewriter, you have to do the heavy lifting yourself. Don't just ask for "a cat wearing a red hat." Get specific: "A photorealistic image of a fluffy ginger tabby cat wearing a small, knitted red beanie hat. The lighting is soft and warm, coming from the side. Close-up shot, detailed fur texture." The more detail you give it, the less it has to guess.

-

Don't just trust the defaults.

It doesn't make logical sense, but it shows the value of experimenting. One user on Reddit made a surprising discovery: setting the quality parameter to auto sometimes produced better images than high.

One user on Reddit made a surprising discovery: setting the quality parameter to auto sometimes produced better images than high. -

Generate in batches. Consistency can be a real issue. Don't expect the first image to be perfect. A standard practice in professional workflows is to generate three or more variations of an image at once (by setting "n=3" in your API call) and then just pick the best one. It costs a little more, but your chances of getting a great result go way up.

Pricing, applications, and the bigger picture for AI in business

Okay, let's talk money and how this technology actually fits into a real business.

A full breakdown of GPT-Image-1 and Mini pricing

The cost comes down to which model you use, the quality you select, and the image size. OpenAI's pricing is per-image, so it's good to know the costs before you start building.

Here's the official breakdown:

| Model | Quality | 1024 x 1024 | 1024 x 1536 | 1536 x 1024 |

|---|---|---|---|---|

| GPT Image 1 | Low | $0.011 | $0.016 | $0.016 |

| Medium | $0.042 | $0.063 | $0.063 | |

| High | $0.167 | $0.25 | $0.25 | |

| GPT Image 1 Mini | Low | $0.005 | $0.006 | $0.006 |

| Medium | $0.011 | $0.015 | $0.015 | |

| High | $0.036 | $0.052 | $0.052 |

Source: OpenAI Pricing Page

Common uses in marketing and product design

With these capabilities, it's no surprise that businesses are getting creative. Some of the most common uses we see are:

-

Spinning up on-brand social media content quickly.

-

Creating tons of ad variations for A/B testing.

-

Visualizing new product ideas before building a physical prototype.

-

Mocking up UI elements for apps and websites.

Beyond static images: Solving entire support workflows

Making a cool graphic for a help article is one thing. But what if you could use AI to solve the customer's problem so they never even needed that article in the first place? That's a totally different ballgame, and it's where the real business impact of AI is found.

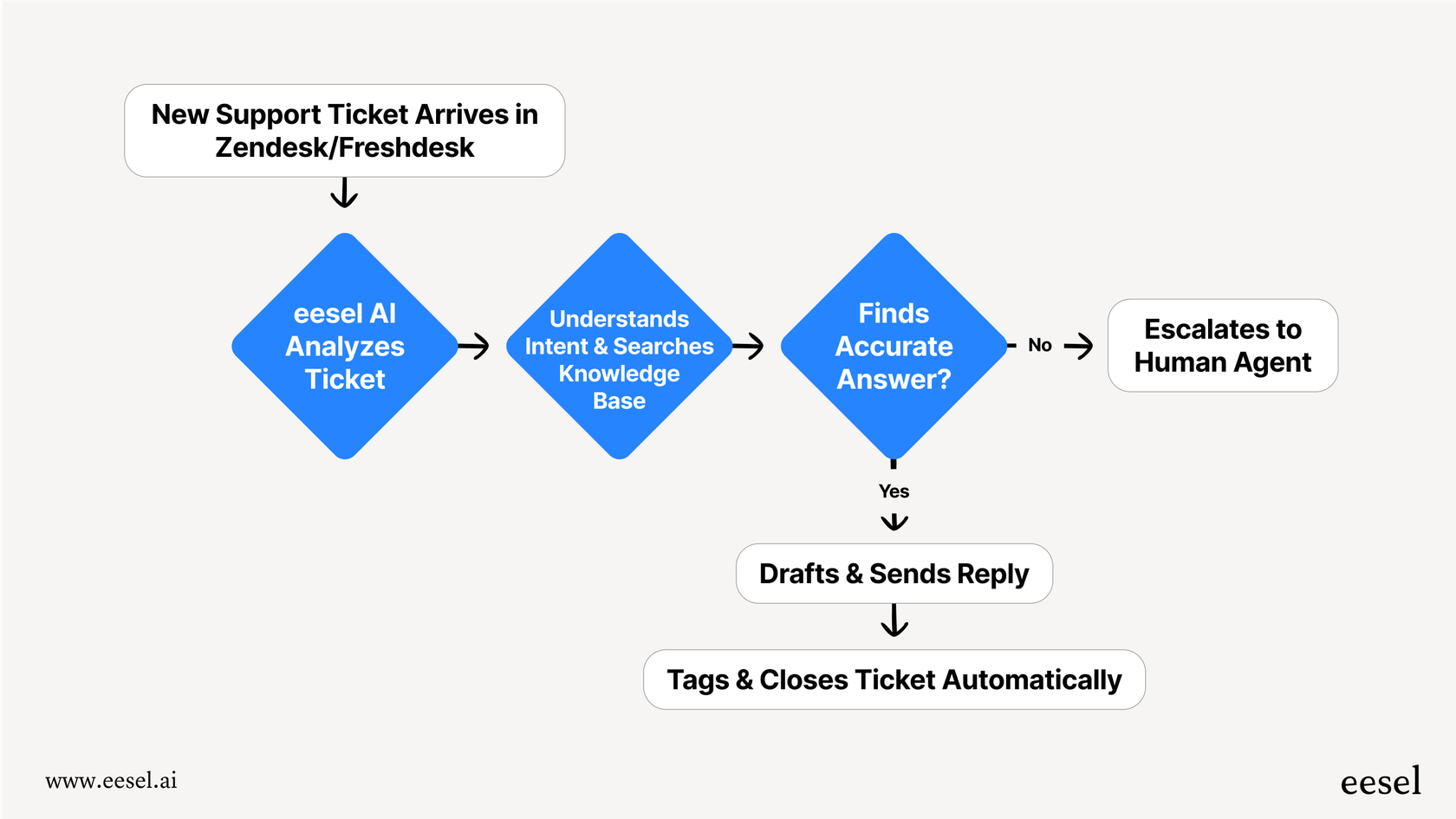

This is where tools like eesel AI come in. Instead of just being a tool for making assets, eesel AI is a full system for automating customer support.

It's different because it's built to solve the problems we just talked about:

-

It connects to your brain. eesel AI plugs directly into the tools you already use, like Zendesk, and learns from your knowledge bases in places like Confluence. This means its answers are actually accurate and specific to your business.

-

It's ready in minutes. Trying to build a reliable support tool on a raw API is a massive engineering headache. With eesel AI, you can connect your help desk with a click and have a working AI agent running in minutes. It's truly self-serve.

-

You get control and predictability. Worried about the inconsistent API quality? A support tool can't afford to be a gamble. eesel AI solves this with a powerful simulation mode. You can test your agent on thousands of your real, historical tickets to see exactly how it will perform before it ever speaks to a live customer. You know its resolution rate from day one.

The final verdict: Is GPT-Image-1 right for you?

So, what's the bottom line? Is GPT-Image-1 worth it? The honest answer is: it depends on your goal.

The good stuff:

-

It has incredible creative range and can generate almost any style you can imagine.

-

The editing tools are genuinely useful and can speed up creative work.

-

The API is easy to get started with for experiments and prototypes.

The headaches:

-

The quality between the API and the web UI is frustratingly inconsistent.

-

The risk that the model's quality can change without warning is very real.

-

It still fumbles specific tasks, especially when it comes to rendering clear text.

Our take is this: GPT-Image-1 is an amazing tool for creative exploration, churning out marketing assets, and rapid prototyping. But when it comes to core business functions like customer support, where you need reliability, consistency, and deep integration, you're much better off with a dedicated platform built for that job.

From generating pictures to solving problems

So there you have it. GPT-Image-1 is a wild, powerful tool, but it’s definitely not a magic wand. Knowing the real difference between the slick web UI and the raw API is the key to getting better results and avoiding a lot of frustration.

At the end of the day, the real win with AI in business isn't just making pretty pictures, it's about building smart systems that solve tangible problems.

If you're ready to move beyond making assets and start automating your customer support with confidence, see how eesel AI can help.

Frequently asked questions

GPT-Image-1 is the more powerful model, offering higher quality and control, suitable for detailed creative tasks. GPT-Image-1 Mini is its faster, more budget-friendly counterpart, optimized for speed and cost where absolute pixel perfection is not the top priority.

The ChatGPT web interface includes a "secret helper" that enhances and expands prompts before sending them to the image model. It also likely applies post-processing steps like sharpening or color correction, which are absent in the raw API output.

Yes, the blog highlights that the API version frequently produces garbled or nonsensical text. Generating legible and contextually appropriate typography inside images remains a significant challenge for the model.

To get better API results, you should provide extremely detailed and specific prompts yourself. Experiment with different parameters, and generate images in batches (e.g., "n=3") to increase your chances of getting a satisfactory output.

Yes, many developers express concern that the API's image quality has declined over time, leading to inconsistent results. This unpredictability poses a significant risk for businesses that require reliable and stable outputs.

GPT-Image-1 is typically more expensive, with prices ranging from $0.011 to $0.25 per image based on quality and size. GPT-Image-1 Mini offers lower costs, generally between $0.005 and $0.052 per image for similar configurations.

These models are well-suited for creative exploration, generating diverse marketing assets, creating ad variations for A/B testing, and rapid prototyping of product or UI concepts. However, for critical, consistent functions like customer support, dedicated platforms are often recommended.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.