It feels like OpenAI is dropping new models every other week, right? Just when you get your head around one, another one shows up with a whole new pricing sheet that looks like a tax form.

If you've ever scrolled through Reddit and seen posts like or "Help me understand this API bill," you're definitely not the only one. It’s way too easy to get lost in the jargon, and getting hit with a surprise invoice at the end of the month is no fun for anyone.

That’s exactly why we’re digging into the GPT image 1 mini pricing today. We'll break it down piece by piece so you know exactly what you’re paying for. Getting a handle on these costs is a must, whether you're a developer building an app, a marketer churning out social content, or a business trying to use AI without the finance department knocking on your door.

What is GPT image 1 mini?

So, what even is GPT image 1 mini? Think of it as the more budget-friendly sibling to the big, powerful GPT Image 1. OpenAI basically created it for everyone who needs a solid image generator that won't drain their wallet.

It’s a multimodal model, which is just a fancy way of saying it understands both text and images. You can give it a text prompt, or even an existing image to riff on, and it will generate a new picture for you.

It's the perfect workhorse for tasks where you need a lot of images without paying top dollar for every single one. It's great for cranking out initial concepts, creating social media assets, or building out prototypes where "good enough" is genuinely good enough.

A breakdown of the GPT image 1 mini pricing model

Alright, let's get into the numbers. OpenAI's pricing for this can feel a bit layered at first. There’s the simple cost for each image you make, and then there’s a second, token-based cost that often trips people up. Let's pull both apart.

Per-image costs by quality and resolution

The most straightforward part of the bill is the fee you pay every time the model spits out an image. The price is tied to the quality and resolution you pick, which is nice because it gives you direct control over your spending.

Here’s the official breakdown from the OpenAI Pricing Page:

| Quality | 1024 x 1024 | 1024 x 1536 | 1536 x 1024 |

|---|---|---|---|

| Low | $0.005 | $0.006 | $0.006 |

| Medium | $0.011 | $0.015 | $0.015 |

| High | $0.036 | $0.052 | $0.052 |

Description: A visually engaging chart that displays the cost per image based on quality and resolution. It should use three distinct columns for the resolutions (1024x1024, 1024x1536, 1536x1024) and three rows for quality tiers (Low, Medium, High), with clear price labels in each cell, matching the data in the table. Each quality tier could have an associated icon (e.g., a simple sketch for Low, a clear photo for Medium, a detailed illustration for High).

From a practical standpoint, "Low" quality is perfect for quick tests and internal drafts when you’re just trying to see if an idea works. "Medium" is a good sweet spot for web content and social media. You should probably only switch to "High" when you need extra detail for things like hero images or important marketing visuals.

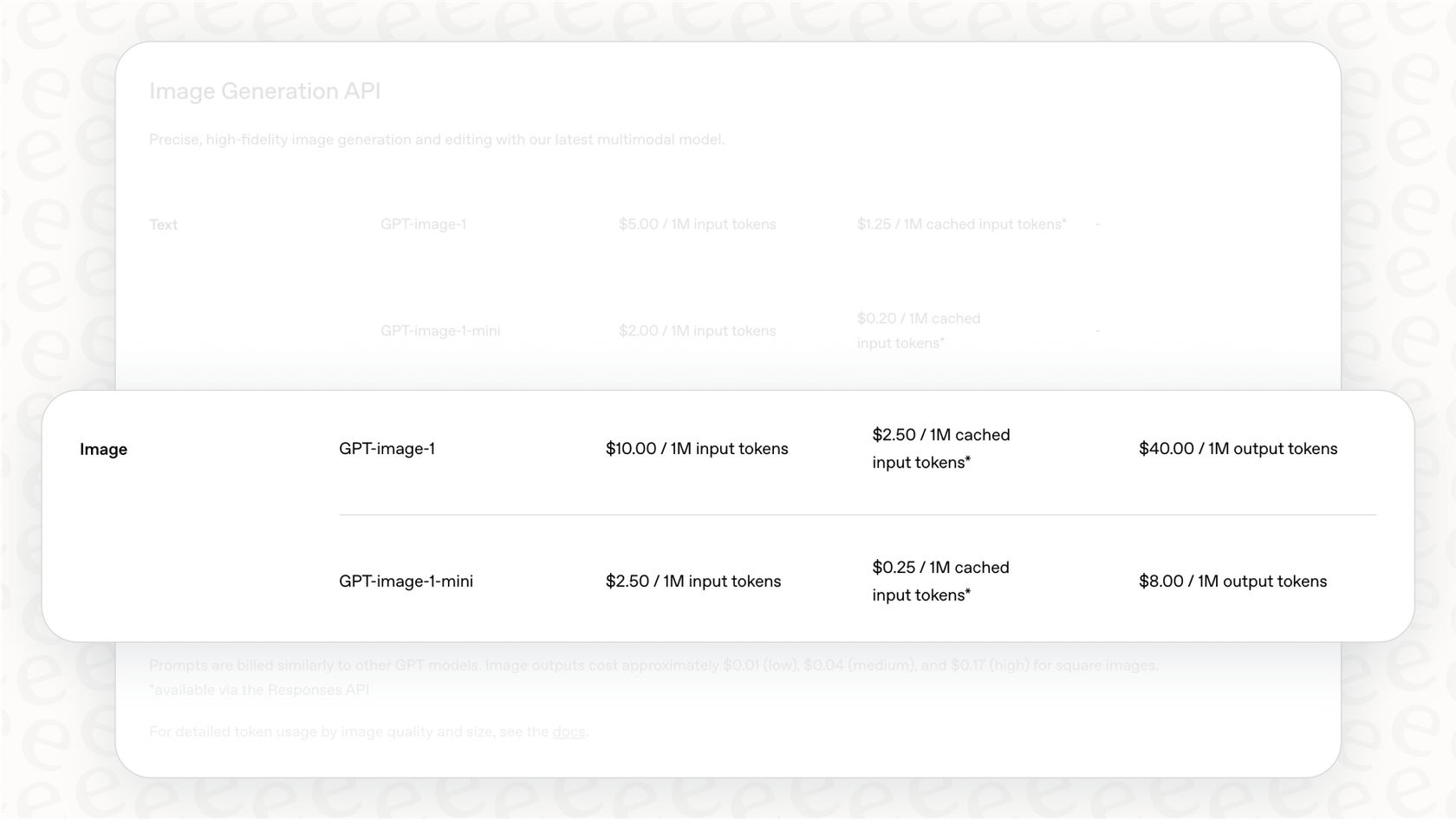

Token-based costs explained

And here’s where it gets a little more confusing. Along with the per-image fee, you also get charged for "tokens," which are tiny bits of data the model has to process. It’s a common headache, but you have to understand it to know what your final bill will look like.

The token costs for "gpt-image-1-mini" are split into three types:

| Token Type | Price per 1M Tokens |

|---|---|

| Text Tokens (Input) | $2.00 |

| Image Tokens (Input) | $2.50 |

| Image Tokens (Output) | $8.00 |

So what do these actually mean?

-

Text Tokens: This is just the cost for the model to read your text prompt. A short prompt will cost next to nothing, but it's still on the bill.

-

Image Tokens (Input): You’ll only pay this if you upload an image for the model to edit or use as inspiration.

-

Image Tokens (Output): This is the one that can sneak up on you. It’s the cost associated with the data that makes up the final image. A low-quality 1024x1024 image uses about 272 output tokens, costing a tiny fraction of a cent. A high-quality image, however, can use thousands of tokens, which will add a more noticeable charge to your bill.

GPT image 1 mini vs. GPT Image 1: Pricing comparison

So, how much are you really saving by going with the 'mini' version? A lot, actually. When you put it next to the standard GPT Image 1 model, the difference is pretty stark.

| Cost Component | GPT Image 1 Mini | GPT Image 1 (Standard) | Savings |

|---|---|---|---|

| Low Quality Image (1024x1024) | $0.005 | $0.011 | ~55% |

| High Quality Image (1024x1024) | $0.036 | $0.167 | ~78% |

| Text Input Tokens (per 1M) | $2.00 | $5.00 | 60% |

| Image Output Tokens (per 1M) | $8.00 | $40.00 | 80% |

The savings are big across the board. That 80% price cut on output tokens is a huge deal, since that’s often the most unpredictable part of the cost. It makes "gpt-image-1-mini" a really smart choice for a whole lot of projects.

How to calculate real-world costs and manage your budget

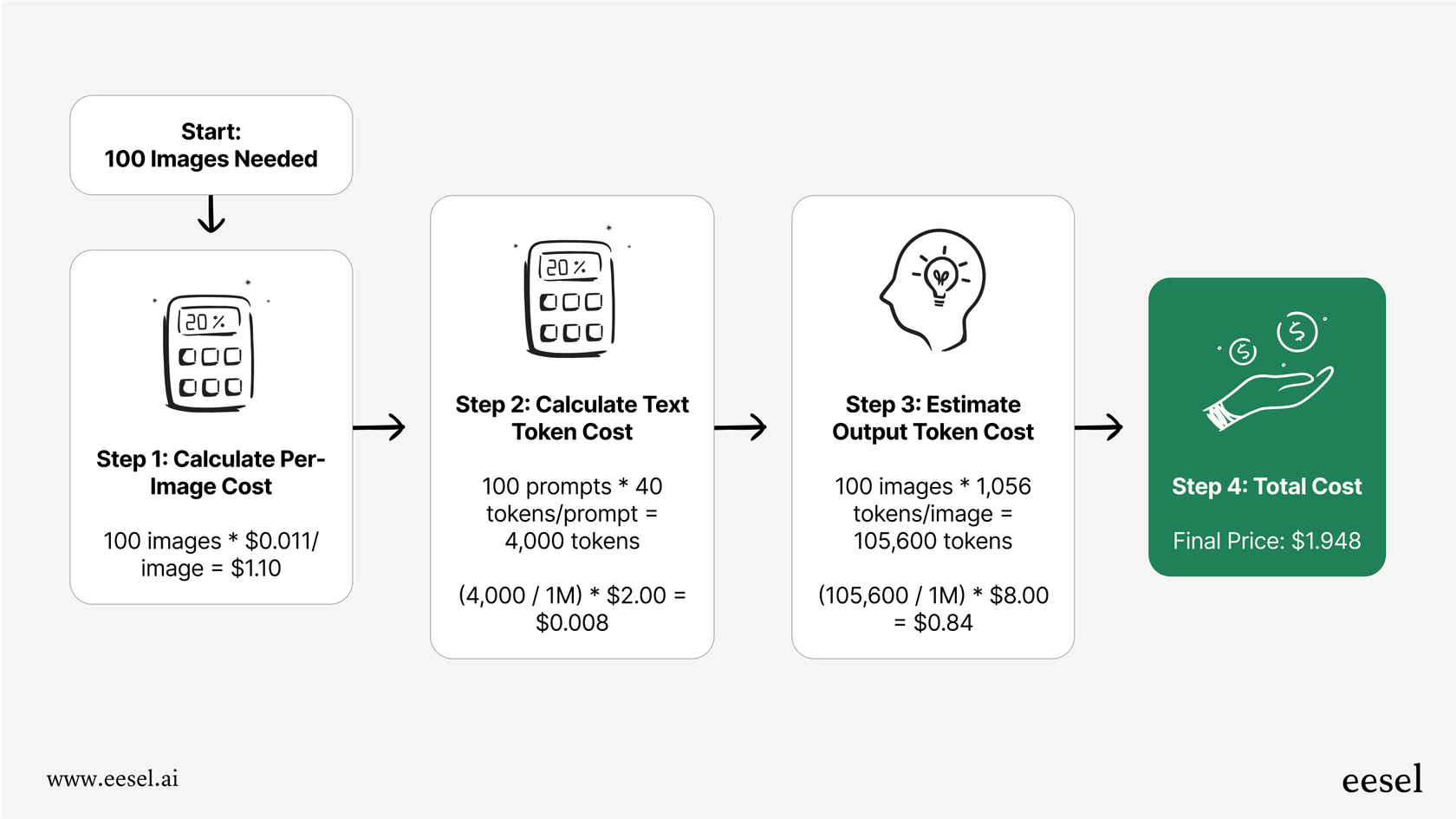

Pricing tables are one thing, but what does this look like for an actual project? Let’s walk through a quick example to connect the dots.

A pricing example: Cost calculation for a common use case

Let's say your marketing team needs 100 images for a social media campaign. They need medium quality, 1024x1024 resolution, and each image will have a unique 30-word prompt.

Here's a back-of-the-napkin calculation for the total cost:

-

Step 1: Figure out the Per-Image Cost: This one's easy. It's 100 images at the medium quality price.

-

100 images × $0.011/image = $1.10

-

Step 2: Figure out the Text Token Cost: A 30-word prompt is roughly 40 tokens.

-

40 tokens/prompt × 100 prompts = 4,000 text tokens.

-

(4,000 / 1,000,000) × $2.00 = $0.008. Yep, pretty much nothing.

-

Step 3: Estimate the Output Token Cost: A medium-quality 1024x1024 image uses around 1,056 output tokens.

-

1,056 tokens/image × 100 images = 105,600 output tokens.

-

(105,600 / 1,000,000) × $8.00 = $0.84

-

Step 4: Add it all up:

-

$1.10 (per-image) + $0.008 (text input) + $0.84 (image output) = $1.948

So, for less than the price of a cup of coffee, the team gets 100 unique images. Not bad at all.

What can unexpectedly drive up your costs?

The pay-as-you-go model is flexible, but there are a few traps that can lead to a bigger bill if you're not paying attention.

-

Forgetting to change the quality setting: A lot of API tools and scripts default to "high" quality. If you don't check, you could be spending almost four times as much per image without even realizing it.

-

Trial and error: We all know it takes a few tries to get the prompt just right. Just remember that every single attempt, even the ones you throw away, costs money.

-

Super long prompts: The more detailed your prompts, the more input tokens you use. It's a tiny cost per prompt, but it can add up if you're generating thousands of images.

-

API errors: Sometimes a request to the API will fail but still use some resources. It’s a good idea to have solid error handling in your code to keep this from happening too often.

Trying to manage unpredictable API costs is a common growing pain for teams adopting AI. It really shows why platforms with clear, fixed-cost plans can be so valuable, especially for something as important as customer support.

How GPT image 1 mini pricing compares to other models

To give you the complete picture, it helps to see how "gpt-image-1-mini" stacks up against the other big names in image generation.

GPT image 1 mini vs. DALL-E 3

DALL-E 3, another model from OpenAI, is also available through an API. Its pricing is simpler: around $0.04 for a standard 1024x1024 image and $0.08 for an HD one. If you're looking for the most budget-friendly API, "gpt-image-1-mini" easily wins, especially at its low and medium quality levels.

GPT image 1 mini vs. Midjourney

Midjourney plays a different game with a subscription model. Their basic plan is about $10 a month for around 200 images, which comes out to roughly $0.05 per image. If you create a ton of images every month and like a predictable bill, Midjourney could be cheaper. But if you need the flexibility of a pay-as-you-go API or just have lighter needs, "gpt-image-1-mini" gives you more control.

Comparative pricing table

Here’s a quick summary to help you see it all at a glance:

| Model | Pricing Model | Approx. Cost per Image (Standard Quality) | Best For |

|---|---|---|---|

| GPT image 1 mini | API (Pay-as-you-go) | ~$0.011 - $0.036 | Flexible API usage for low-to-medium volumes. |

| DALL-E 3 | API (Pay-as-you-go) | ~$0.04 | API users who want a simple balance of quality and cost. |

| Midjourney | Subscription | ~$0.03 - $0.05 | High-volume creators who prefer a fixed monthly bill. |

Description: A visually appealing comparison chart of the three models: GPT image 1 mini, DALL-E 3, and Midjourney. Each model should have its own card with its logo, pricing model (e.g., API vs. Subscription), and approximate cost per image. Use icons to denote the "Best For" category for each model, making it easy to see which use case fits which tool.

Using GPT image 1 mini pricing to pick the right model and keep AI costs in check

There’s no doubt that GPT image 1 mini is a fantastic, cost-effective tool. It makes AI image generation accessible for projects that would have been too expensive before. The trick is to just make sure you understand both parts of its pricing: the per-image fee and the token costs.

Our advice is pretty simple: for most projects, the 'mini' model is the smartest choice unless you absolutely need the best possible quality from the standard GPT Image 1. Always start on the "low" or "medium" quality settings to keep an eye on your spending, and only bump it up when you really have to.

Getting your image generation costs under control is a great first step. But for a lot of companies, the same AI cost puzzle shows up in other places, especially customer support where unpredictable per-ticket fees can blow up a budget.

That’s where it helps to think differently. At eesel AI, we provide AI agents and copilots for your helpdesk with transparent, predictable pricing. Our plans are based on overall usage, not confusing per-resolution fees, so you can automate support and help your team without dreading the monthly invoice. You can even simulate how our AI would perform on your past tickets to see the ROI upfront. It’s the same idea of controlling costs, just applied where it can make the biggest difference: your customer experience.

Frequently asked questions

GPT image 1 mini pricing refers to the cost structure for OpenAI's budget-friendly image generation model. It includes both a per-image fee, which varies by quality and resolution, and additional token-based costs for input text and image data.

GPT image 1 mini pricing offers significant savings compared to the standard GPT Image 1, with an approximate 55-78% reduction in per-image costs and up to 80% savings on image output tokens. This makes the 'mini' version a much more economical choice for most projects.

The primary factors influencing GPT image 1 mini pricing are the chosen image quality (Low, Medium, High) and resolution, which determine the per-image fee. Additionally, output token costs, driven by image complexity and size, can also noticeably affect the final bill.

Yes, you can control GPT image 1 mini pricing by carefully selecting image quality and resolution. Starting with "Low" or "Medium" quality for drafts and only using "High" for essential visuals helps manage per-image costs, while being mindful of prompt length and API errors can also reduce token expenses.

GPT image 1 mini pricing is generally more budget-friendly than DALL-E 3's API, especially at its lower quality tiers. Compared to Midjourney's subscription model, GPT image 1 mini offers pay-as-you-go flexibility, making it better for lighter, unpredictable needs rather than high-volume, fixed-cost scenarios.

GPT image 1 mini pricing includes both a per-image fee and token-based costs. You pay for text input tokens, image input tokens (if you provide an image), and crucially, image output tokens, which account for the data generated in the final image.

Yes, GPT image 1 mini pricing is well-suited for generating a large volume of images, like for a social media campaign, especially when using medium quality. As demonstrated in the blog, 100 images at medium quality cost under $2, making it very efficient for such tasks.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.