So, OpenAI's GPT-5 Pro is here, and it feels like everyone is talking about it. It’s been billed as the most powerful, "research-grade" model out there, promising to push the boundaries of AI reasoning and accuracy for developers and businesses.

But what does that actually mean for you? This article is a straightforward look at what GPT-5 Pro can do when you call it through the API. We'll get past the marketing hype and look at its real-world performance, break down the surprisingly high costs, and figure out where it actually makes sense to use it (and where it doesn't).

It all boils down to one question: is this powerful new tool the right choice for your business? Or is it an expensive engine that’s just too complicated to build with? Let’s dig into the latest GPT-5 Pro in the API reviews and find out.

What is GPT-5 Pro?

Let's get straight to it: GPT-5 Pro is OpenAI's heavy-hitter model. It's built for jobs where getting it right is everything, demanding the highest level of accuracy and logical thinking.

The "Pro" isn't just a label; it means the model uses a lot more computing power to "think" through a prompt before it gives you an answer.

It’s definitely designed for high-stakes work. With a massive 400k token context window, you can access it through a $200/month ChatGPT Pro subscription for individual use. More importantly for businesses, it’s available as a pay-as-you-go model in the API.

-

Best for: High-stakes industries like finance, legal, and healthcare, plus complex software development and deep research.

-

Key features: Advanced reasoning, a much lower chance of making things up (hallucinations), and excellent at following instructions.

-

Access: Available in the ChatGPT Pro interface for playing around with, and directly via the OpenAI API for building it into your own tools.

Performance and benchmarks

I’ve seen some reviews describe GPT-5 Pro's performance as "jagged," and that feels about right. While it's breaking records on a lot of academic tests, it isn't automatically "better" at everything. It’s a specialist tool, brilliant for deep, analytical work but often overkill for simpler chats where a faster, cheaper model would be just fine.

Let's look at what those benchmark scores actually mean for your business.

Reasoning and math breakthroughs

If you need an AI that's good with numbers and logic, this is probably it. It's getting near-perfect scores on advanced math benchmarks like AIME and HMMT. For instance, recent benchmarks from Vellum.ai show it hitting a perfect 100% on the AIME 2025 test when allowed to use Python tools.

For you, that means you can lean on it more for things like financial analysis, data modeling, and scientific research. Its performance on reasoning tests like GPQA, which uses questions at a PhD level, shows it makes fewer logical mistakes when you ask it to summarize dense, technical material.

State-of-the-art coding capabilities

For anyone writing code, GPT-5 Pro looks pretty impressive. It's leading the pack on coding benchmarks like SWE-bench, which tests how well an AI can solve real-world issues from GitHub. Users are reporting that it writes more coherent code, produces fewer bugs on the first try, and can tackle complex software architecture prompts that older models would fumble.

But it's not all smooth sailing. Developers in Reddit threads are quick to point out a pretty big limitation: the API has a 49k token limit per message. If you’re working with a large codebase, that’s a real headache. You can't just feed it an entire repository for analysis. So you're forced to use workarounds like Retrieval-Augmented Generation (RAG), which can sometimes undermine the very performance you're paying a premium for.

Reliability and safety: A new standard

What's really turning heads is GPT-5 Pro's reliability. It has a dramatically lower rate of making things up or getting facts wrong, especially on sensitive topics. On HealthBench, a benchmark for medical questions, its error rate is just a fraction of what we saw with previous models.

But just having a smarter model doesn't magically fix everything. How do you make sure that accuracy is used safely, stays true to your brand's voice, and is tailored to your business's specific knowledge? You still need a smart way to apply that accuracy.

The real cost: What the reviews say

Most GPT-5 Pro in the API reviews mention the price, and yeah, it’s a bit of an eye-opener. Its power comes with a price that makes you stop and think, not just casually upgrade.

You can get access in two ways, each with its own cost model.

The ChatGPT Pro plan

For individuals or small teams, the $200/month per-user plan gives you interactive access. It’s perfect for high-value, one-off tasks like deep research or detailed content creation. But it wasn't built for integrating into applications, and paying per user gets incredibly expensive if you want to roll it out to a team, like for customer support.

API pricing and hidden costs

If you're building applications, you'll use the API, and this is where costs can get a bit wild. According to data from OpenRouter, the pricing is a huge leap from older models: $15 for every 1 million input tokens and a whopping $120 for every 1 million output tokens.

Here’s a quick comparison with other popular models:

| Model | Input Cost / 1M Tokens | Output Cost / 1M Tokens |

|---|---|---|

| GPT-5 Pro | $15.00 | $120.00 |

| GPT-4o | $2.50 | $10.00 |

| Claude 4.1 Opus | $15.00 | $75.00 |

Here’s the thing, though: that token cost is just the beginning. To build a real, working application with the GPT-5 Pro API, you also have to factor in the cost of engineering time for prompt design, workflow logic, knowledge integration, and endless testing.

This is why thinking about cost differently can be a big deal. Instead of paying per token, platforms like eesel AI offer clear, predictable plans that don't charge you for every single resolution. This is a lifesaver for teams in customer support, where the number of tickets can swing wildly from day to day. You get the benefits of a top-tier model without the fear of a massive surprise bill.

Practical use cases and challenges

The raw power of GPT-5 Pro is great for certain jobs where precision is everything and the high cost is worth it: deep research, financial modeling, legal document analysis, and generating complex code.

But for something fast-moving and dynamic like customer support, using the raw API is like getting a Formula 1 engine dropped on your doorstep with a note that says "good luck." The engine is amazing, but you still need to build the chassis, the steering, and find a driver who knows what they're doing. And this is where things usually grind to a halt.

Implementation challenges

- Connecting your knowledge: GPT-5 Pro starts as a blank slate. It has no idea about your company's return policy, how to troubleshoot your product, or what a customer said last week. Getting that information from places like Confluence, Google Docs, or your Zendesk history means building and maintaining your own complex RAG systems.

- Defining what it can do: The model is great at writing text, but it can't triage a ticket, add the right tags, or look up an order in Shopify by itself. Every single one of those "actions" needs to be coded, tested, and looked after by your engineering team.

- Testing it all safely: How do you test a powerful and expensive AI on live customer chats without causing chaos? With the raw API, there's no "practice mode." You’re pretty much testing in a live environment, which is both risky and expensive.

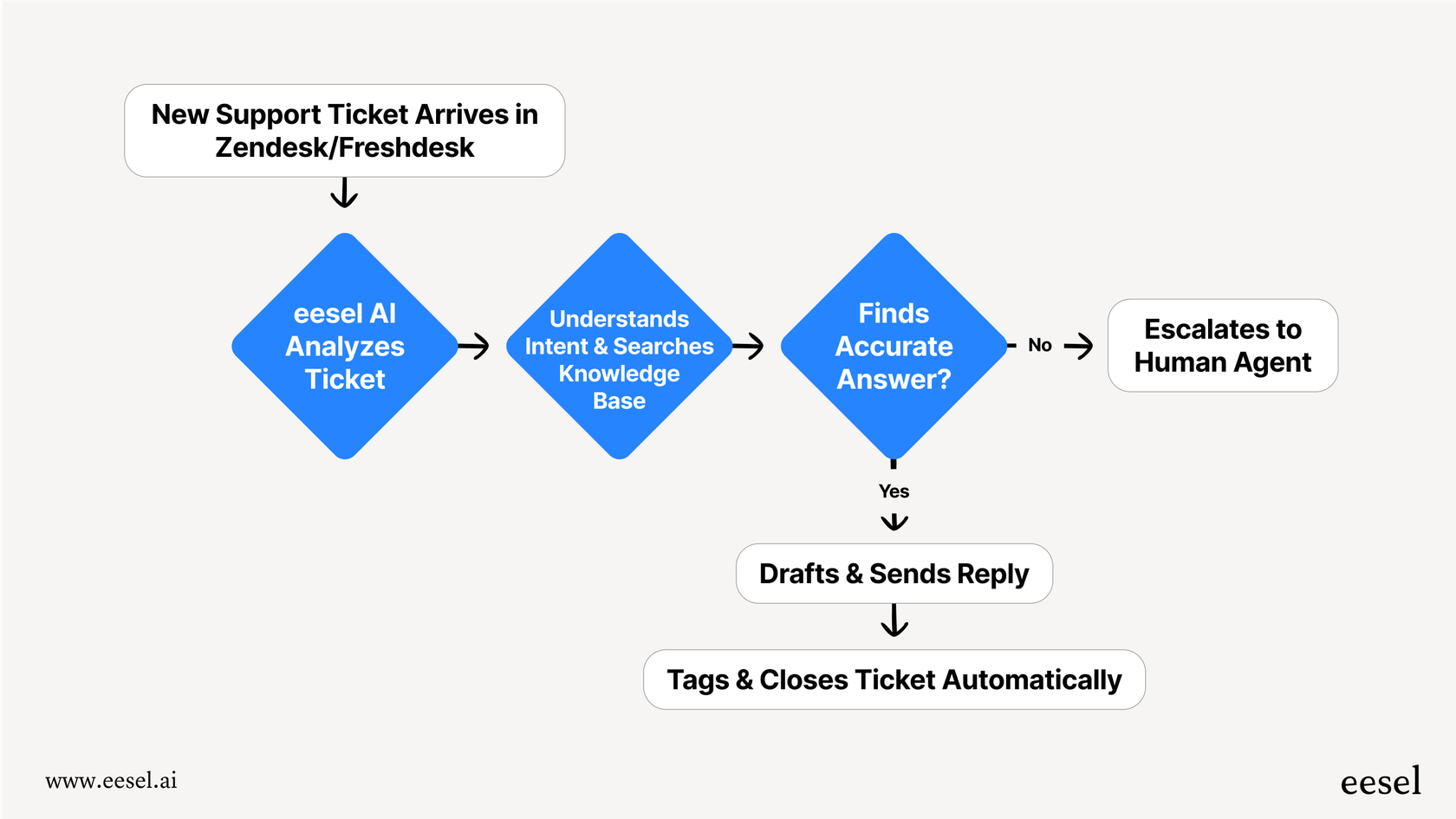

This is the exact gap that tools like eesel AI are designed to fill. Think of eesel AI as the rest of the car, the part that makes the powerful engine useful for support teams.

Instead of a months-long build, you can solve these problems right away:

-

Unified knowledge: With one-click integrations, you can securely connect all your knowledge sources in a snap. eesel AI even trains on your past tickets to automatically learn your brand's voice and common solutions.

-

Customizable actions: Our no-code workflow builder lets you map out exactly how you want the AI to behave. You can create rules for when to escalate a ticket, who to send it to, or how to check an order status through another tool.

-

Simulation mode: This is especially useful. Before you go live, you can run your AI setup on thousands of your past tickets. It gives you a clear forecast of how it will perform and what its resolution rate will be, so you can launch with confidence.

-

Go live in minutes: The whole idea is to get you up and running in minutes, completely on your own. It turns a massive engineering project into a simple setup process.

A powerful engine that needs a vehicle

There's no doubt GPT-5 Pro in the API is a huge step up in AI capability. It's a seriously powerful engine. But for most companies, especially for a job like customer support, that raw power is tough to handle on its own because of the high cost and technical work involved.

To really get the most out of models like this, you need a system that tames that complexity for you. That's what platforms like eesel AI do. They provide the vehicle, turning raw AI power into a reliable, affordable, and fully controllable support automation engine that you can get running in minutes.

Ready to put the world's most powerful AI to work for your support team without the engineering headache? Try eesel AI for free.

Frequently asked questions

GPT-5 Pro is highlighted for its "research-grade" power, offering unparalleled reasoning, accuracy, and a dramatically lower rate of hallucinations. It excels in tasks requiring deep analytical thinking, making it suitable for high-stakes industries.

Reviews praise its near-perfect scores on advanced math benchmarks and its state-of-the-art coding capabilities, solving real-world GitHub issues. It also makes fewer logical mistakes on PhD-level reasoning tests.

For businesses, API pricing is significant: $15 per 1 million input tokens and $120 per 1 million output tokens. This is substantially higher than other popular models like GPT-4o.

It's ideal for deep research, financial modeling, legal document analysis, and complex code generation where precision is critical. However, for simpler, faster interactions, its high cost and slower speed might make it an overkill.

Key challenges include connecting it to proprietary knowledge bases, defining specific actions it can perform, and safely testing its behavior in live environments. These often require significant engineering effort to overcome.

Yes, the reviews suggest that platforms like eesel AI can help. These tools provide a "vehicle" that manages complexity, integrates knowledge, enables no-code workflow building, and offers predictable pricing, making powerful AI more accessible and affordable.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.