Gpt-5 Pro in the API: A practical guide for support teams

Kenneth Pangan

Stanley Nicholas

Last edited October 9, 2025

Expert Verified

Of all the recent AI news, the arrival of OpenAI's GPT-5 Pro has definitely made some waves. It’s being sold as their most powerful reasoning model yet, a serious tool for tackling really complex problems. But what does using GPT-5 Pro in the API actually mean for your business, especially if you want to level up your customer support?

If you're asking that question, you're in the right place. We’re going to cut through the hype and give you a practical look at what GPT-5 Pro is, its key features, the surprisingly high costs, and the real-world headaches of trying to build a support tool directly with the API. Let's dig into when it makes sense to build from scratch and when it's smarter to buy a solution.

What is GPT-5 Pro in the API?

Let’s get one thing straight: GPT-5 Pro isn't just another version of ChatGPT. It's OpenAI's top-tier large language model, built specifically for complex tasks that require the AI to "think" through multiple steps before giving an answer.

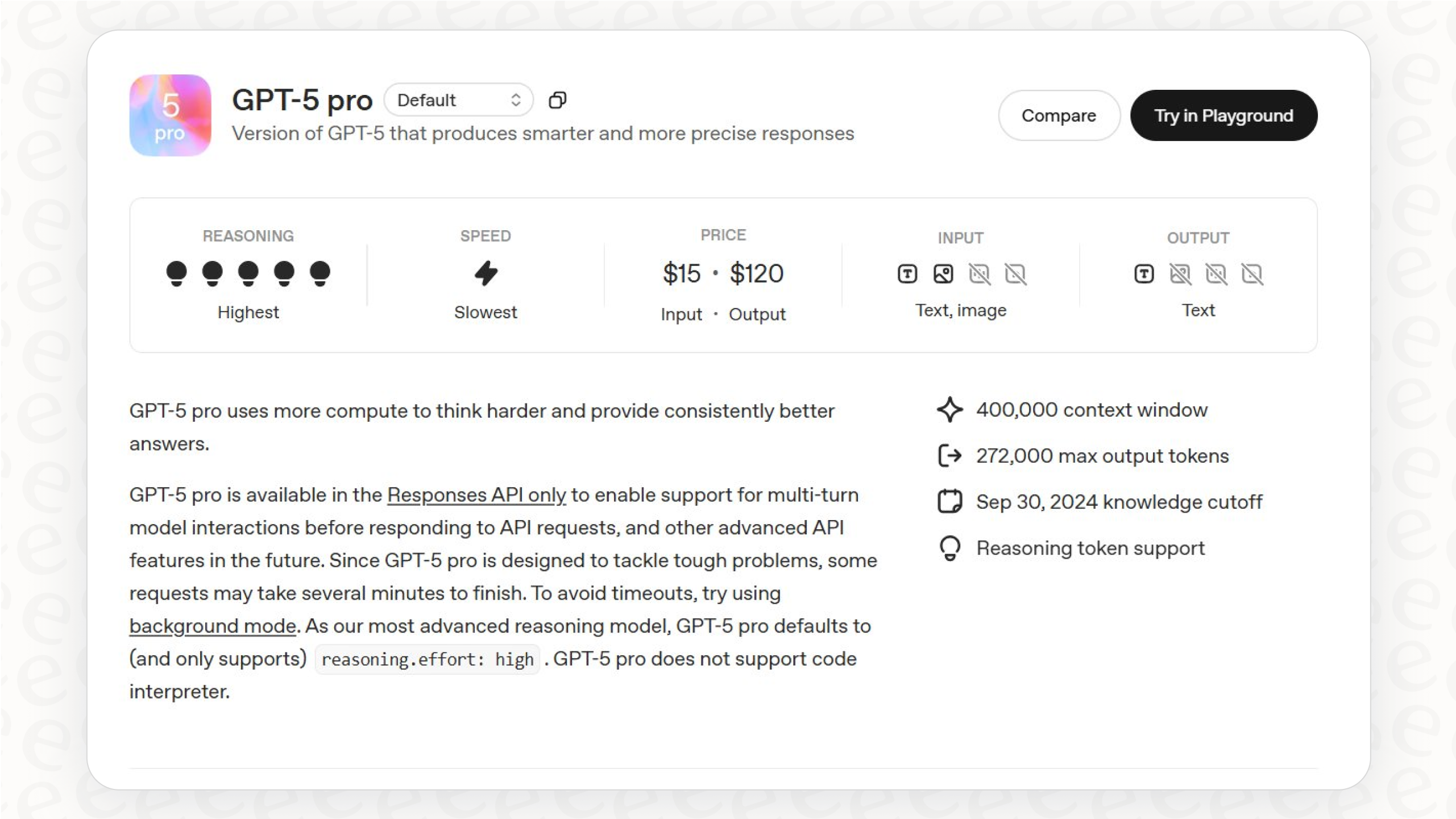

It's only available through the API, specifically the "v1/responses" endpoint, and it's a completely different (and more powerful) model than the standard "gpt-5" you might see elsewhere. Its specs tell you a lot about how it's meant to be used: it has a huge 400,000 token context window, but all that processing power means it’s slow to respond. By default, its "reasoning.effort" is set to "high", so it’s always in deep-thought mode.

Think of it like a powerful, raw engine. It’s an amazing piece of tech for developers building something brand new, but it’s not a ready-to-go solution for a specific business problem like automating customer support.

Key features and new controls

The real magic of using GPT-5 Pro in the API comes from its advanced abilities and the fine-grained controls that let developers tweak its behavior. These are the nuts and bolts you’d use to build a sophisticated AI application.

Advanced reasoning and multi-step tasks

This is where GPT-5 Pro stands out. It’s great at jobs that require breaking a problem down, planning out the steps, and then following complex instructions. For a support team, that could look like analyzing a tricky technical ticket full of error logs, figuring out a multi-step fix, or even writing a code snippet for a customer. It's designed for the messy, nuanced problems that simpler models just can't handle.

New API parameters: Verbosity and reasoning_effort

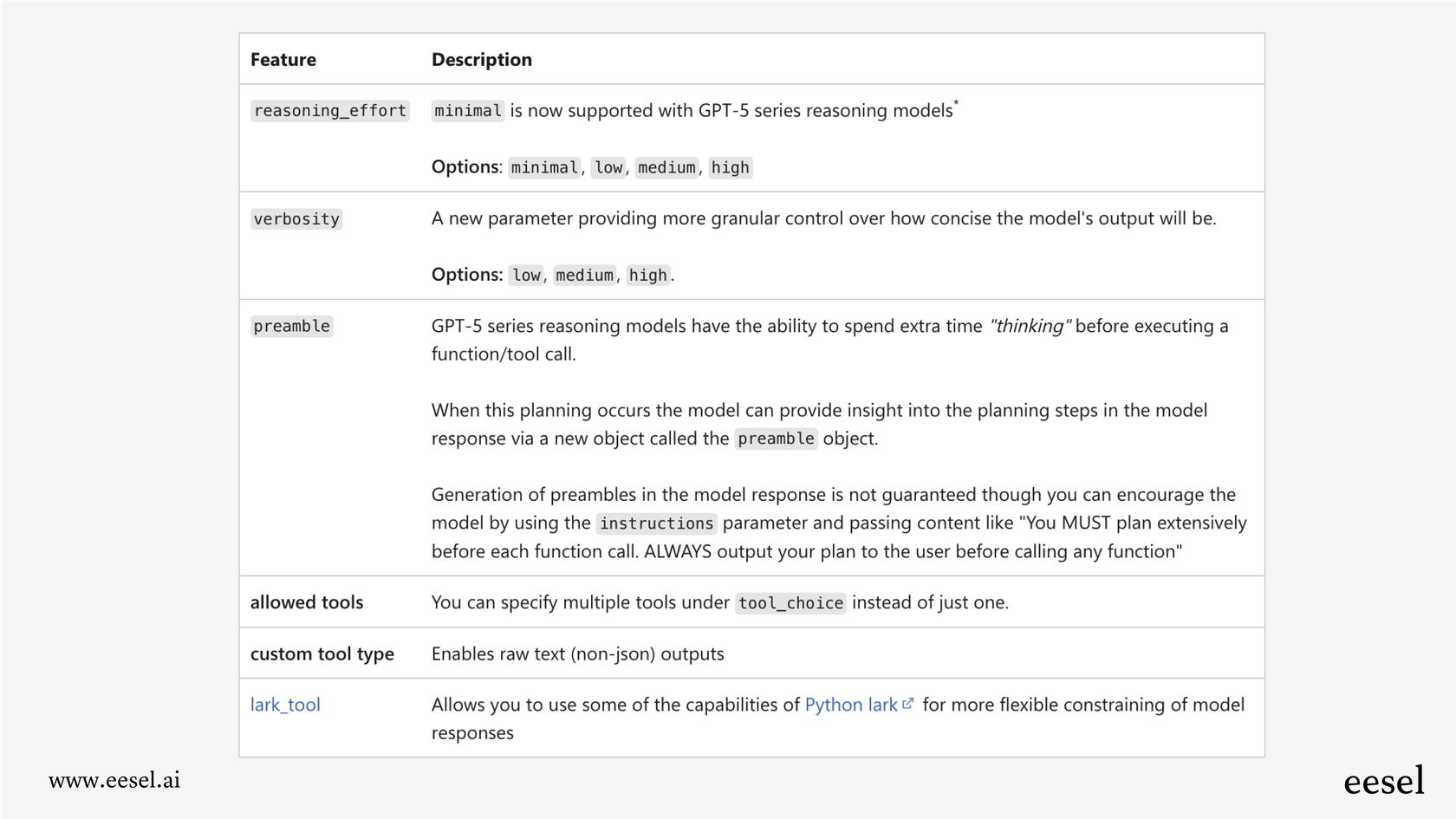

OpenAI introduced two new parameters that give developers a lot more control.

-

"verbosity": This setting ("low", "medium", or "high") lets you decide how detailed the model's final answer should be. If you need a quick, straight-to-the-point response, you can set it to "low". If you need a full, step-by-step explanation, "high" is the way to go.

-

"reasoning_effort": This parameter ("minimal", "low", "medium", "high") tells the model how much thinking to do before it even starts writing. As mentioned, GPT-5 Pro defaults to "high", which is what makes it so capable, but also so slow.

These controls are great, but they have to be implemented and managed by a developer through code. In contrast, platforms like eesel AI give you that same level of control over your AI's tone and actions through a simple, no-code prompt editor. This lets support managers shape the AI's personality and workflow themselves, without needing to touch a line of code.

Structured outputs and tool use

GPT-5 Pro is also quite good at generating structured data like JSON and calling external tools (something developers call "function calling"). This is a must-have for any useful support agent. You don't just want your AI to talk; you need it to do things. That could mean grabbing live order info from a Shopify database, creating a ticket in Jira, or updating a customer’s profile in your CRM.

While the API gives you the basic components for these actions, a solution like the eesel AI Agent has these features ready to go. It gives you a straightforward way to connect to your existing tools like Zendesk and create custom API calls, saving your team from the complex and lengthy development work needed to build these integrations from the ground up.

Understanding the real cost

The power of GPT-5 Pro is impressive, but it comes with a price tag that’s not only high but also hard to predict. This is a huge deal for any team, especially in a cost-conscious department like customer support.

The steep price of advanced reasoning

Let's not beat around the bush: GPT-5 Pro is expensive. A quick look at the official OpenAI pricing makes that clear. The cost for output tokens is a whopping 12 times higher than the standard "gpt-5" model.

| Model | Input (per 1M tokens) | Output (per 1M tokens) |

|---|---|---|

| gpt-5-pro | $15.00 | $120.00 |

| o3-pro | $20.00 | $80.00 |

| gpt-5 | $1.25 | $10.00 |

And remember, these prices are for one million tokens. A single complex support ticket could burn through thousands of tokens, especially since all the behind-the-scenes "thinking" it does also counts towards your output token bill.

Performance, latency, and trial costs

All that thinking doesn't just cost money; it costs time. In some early tests, developer Simon Willison reported that a single, fairly simple query to GPT-5 Pro took over six minutes to finish. He also found that asking it to generate one SVG image of a pelican on a bicycle cost him $1.10.

Now, think about that in a support context. If you wanted to see how well your custom-built AI could handle your past support chats, running it on just 1,000 old tickets could easily set you back over $1,000 in API fees alone, with no guarantee the results will even be useful. That's a big, risky bet just for a test run.

This is where a dedicated platform has a clear edge. eesel AI has a powerful simulation mode that lets you test your AI on thousands of your actual past tickets in a safe environment. You can see exactly how it would have replied, get accurate forecasts on how many issues it could solve, and tweak its behavior before it ever talks to a real customer. This is paired with eesel AI's transparent and predictable pricing, which is a flat monthly fee, not a charge per ticket resolved. Your bill won't suddenly jump just because you had a busy support month.

The reality of building a complete support solution

Using the GPT-5 Pro in the API is just the first step on a long and costly road. Turning that API access into a fully functional, reliable, and smart support solution is a huge engineering project.

You need more than just an API key

To build a support agent that actually works well, you need to construct an entire system around the model.

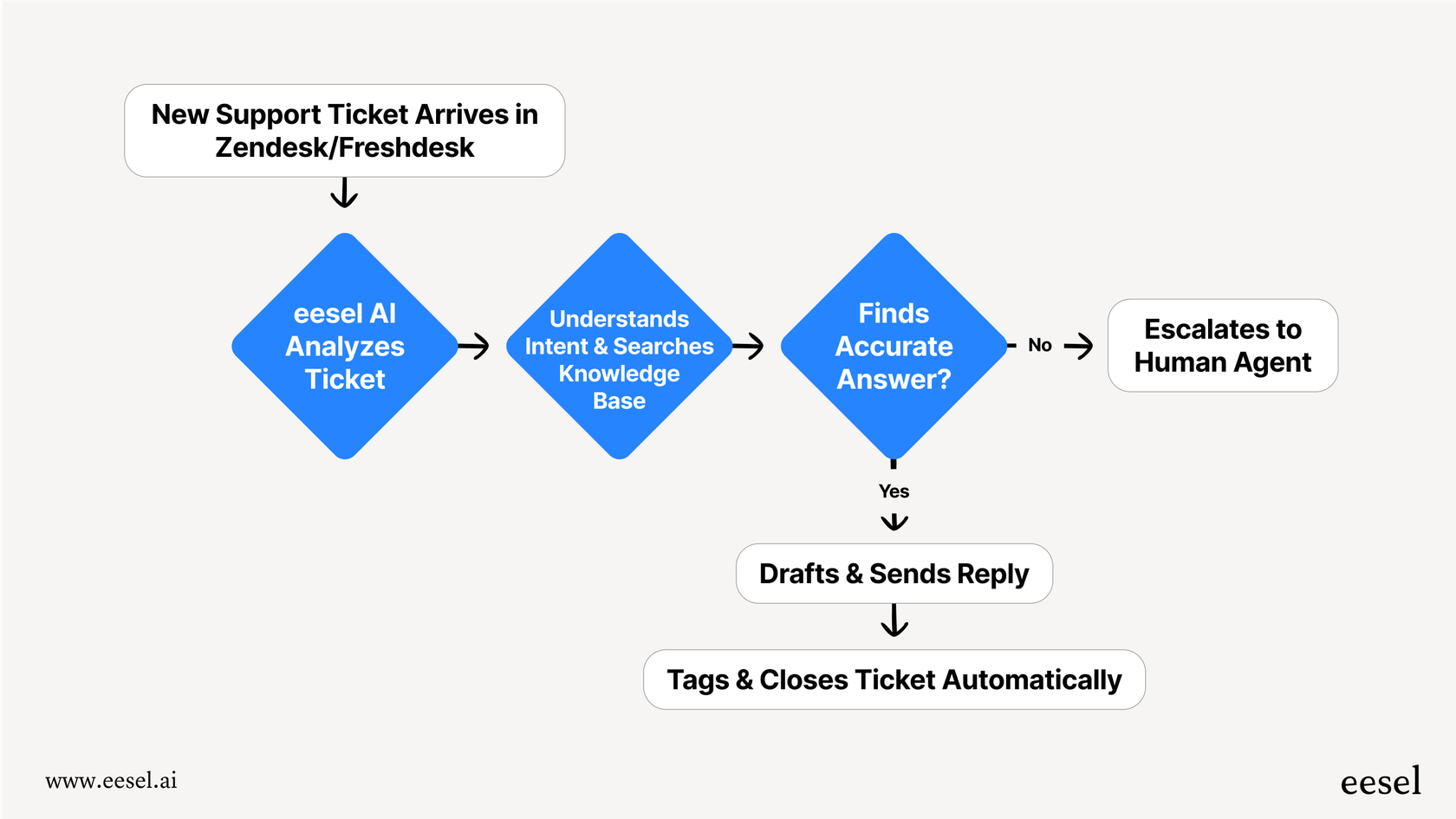

Knowledge connectivity First, you need a system to feed the model the right information from your knowledge base. This means building and maintaining a complex system (often called a RAG pipeline) that can pull from all your different knowledge sources.

A workflow engine Next, you have to build the logic that decides when the AI should answer, when it should pass a ticket to a human, how it should tag and categorize issues, and what external tools it needs to use.

Integrations Then comes the custom code. You'll need to write connections to your help desk, your internal wikis like Confluence, your product databases, and all the other systems your business relies on.

Analytics and reporting Finally, you’ll need a dashboard to track how the AI is performing, see where it's struggling, and figure out if you're getting a return on your investment.

Building all of this from scratch is a project that can take months, if not years, and requires a dedicated team of expensive AI engineers.

The platform advantage: Speed, control, and focus

This is where a platform like eesel AI completely changes the equation. It gives you all of these necessary pieces right out of the box, turning a massive engineering project into a simple setup process.

With eesel AI, you don't have to build a thing. You can use one-click integrations to instantly connect all of your knowledge, whether it's in past tickets, your help center, or documents tucked away in Google Docs.

It also includes a fully customizable workflow engine that lets your support team, not your engineers, decide exactly which tickets get automated and what actions the AI is allowed to take.

Ultimately, using a platform frees up your team to focus on what they're best at: making customers happy. You get to use the power of the latest AI without the headache of building and maintaining all the complicated plumbing that makes it work.

This video provides an overview for developers on the changes, pricing, and models related to using the GPT-5 Pro in the API.

GPT-5 Pro in the API: Build for development, buy for deployment

GPT-5 Pro in the API is an incredible tool for developers who are building brand-new applications from the ground up. Its reasoning power opens up a ton of new possibilities.

However, for a specific, high-stakes job like customer support, the extreme cost, slow performance, and massive engineering work required to build a custom solution just don't make sense for most companies. The road from a raw API key to a reliable, automated support agent is long, complicated, and expensive.

A dedicated AI support platform gives you the power of models like GPT-5 Pro in a package that is quicker to deploy, easier on the budget, and designed specifically for the way support teams work.

Ready to use next-generation AI without the engineering overhead? Try eesel AI for free and see how quickly you can get your frontline support automated.

Frequently asked questions

GPT-5 Pro is OpenAI's most advanced reasoning model, exclusively available via API, distinct from the standard "gpt-5". It's engineered for highly complex, multi-step tasks requiring deep thought, making it a powerful raw engine for developers.

Its key benefits include advanced reasoning capabilities for complex problem-solving, handling multi-step tasks, and generating structured outputs. It also supports sophisticated tool use, enabling the AI to interact with external systems for actions like fetching order details or creating tickets.

The "verbosity" parameter lets developers control the detail level of the AI's response, from quick answers to full explanations. "Reasoning_effort" dictates how much "thinking" the model performs before generating an output, with higher settings enabling deeper analysis but increasing response time.

GPT-5 Pro is significantly more expensive than standard models, with output tokens costing up to 12 times more. Its "deep-thought" processing also counts towards token usage, leading to high, often unpredictable, costs and potential latency issues, even for simple queries.

Building a complete solution requires extensive engineering, including developing knowledge connectivity (RAG), a workflow engine, custom integrations, and analytics. This can be a months-to-years-long project, demanding a dedicated team of expensive AI engineers.

While powerful, GPT-5 Pro's default high reasoning effort leads to significant latency, with some queries taking several minutes to complete. This slowness makes it generally unsuitable for real-time customer support, where quick responses are crucial.

For specific, high-stakes applications like customer support, a dedicated platform is often more advisable. Platforms provide pre-built integrations, workflow engines, and analytics, significantly reducing development time, cost, and complexity compared to building from scratch with the raw API.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.