How to use Freshdesk Freddy AI to evaluate knowledge gaps for bots in 2026

Stevia Putri

Stanley Nicholas

Last edited January 16, 2026

Expert Verified

There’s nothing quite like the excitement of launching a new AI bot to streamline your support. You’ve put in the time, connected it to your knowledge base, and hit the 'on' switch. Occasionally, you might find that the AI needs a bit more information to give the perfect answer. This is a natural part of the "tuning" process, and it’s a sign that your AI can be even more helpful with the right data.

Finding and filling these knowledge gaps is a key step in getting your AI performing at its best. It’s what turns a basic bot into a sophisticated assistant that truly understands and helps your customers.

This guide will walk you through exactly how to use the tools inside Freshdesk Freddy AI to evaluate knowledge gaps for bots. We’ll cover the robust native features Freshdesk offers, and we’ll also explore a complementary method that helps you achieve even deeper insights by simulating performance against real customer questions.

What you'll need to get started

Before we jump in, let's quickly cover what you'll need to have handy. This process relies on having access to the powerful features within your Freshdesk setup.

-

A Freshdesk account: You need to be on their Pro or Enterprise plan to get access to the Freddy AI Agent.

-

The Freddy AI Agent add-on: This is a premium feature. Freshdesk offers flexible pricing based on "sessions," which you can purchase in packs. To give you an idea, it starts at about $100 for 1,000 sessions.

-

Admin access: You’ll have to be an administrator in your Freshdesk account to manage the settings and analytics for Freddy AI.

-

An existing knowledge base: Your Freddy AI bot connects to your Freshdesk solution articles, providing a reliable foundation of knowledge to build upon.

How to find knowledge gaps with Freshdesk's tools

Freshworks has built some impressive features to help you pinpoint where your Freddy AI bot can be further enhanced. The process is straightforward: you'll explore the built-in analytics and use the integrated self-testing tool to find opportunities for growth.

Here’s how to do it.

1. Go to the Freddy AI Agent analytics dashboard

First, it is helpful to see how your bot is performing in real-world interactions. Head over to your Freshdesk admin panel and find the analytics section for Freddy. This dashboard gives you the key stats that help you identify knowledge gaps.

Keep a close eye on metrics like these:

-

Resolution rate: This tracks how many queries the bot resolves. A healthy resolution rate shows your bot is on the right track, while any dips highlight where more content might be needed.

-

Unhelpful responses: This tracks every time a user provides feedback that an answer wasn't quite what they needed.

-

Unanswered questions: This shows you queries where the bot needed more information to respond. These are your most obvious opportunities for adding new knowledge base content.

2. Test the bot with the "evaluate itself" feature

Freshdesk offers a clever feature where Freddy can verify its own knowledge. You can find this in the Freddy AI Agent configuration settings. When you start this process, Freddy generates a list of questions based on your help articles and shows you how it would respond.

It’s an excellent way to catch content needs early. For example, if you have a detailed article about your return policy but have not yet documented exchanges, the self-evaluation can flag this as a clear area for a new article.

3. Dig into "unhelpful" and "unanswered" replies

Some of the best feedback comes directly from your users. The analytics dashboard organizes these interactions into two helpful categories:

-

Unhelpful responses: These provide direct insight into customer needs. If a customer notes that a response wasn't helpful, you can review it to see if the article needs more detail or a simpler explanation. It’s a great way to refine your existing documentation.

-

Unanswered questions: These are instances where Freddy identifies that it doesn't have the answer yet. This list serves as a proactive to-do list for your knowledge base articles. If multiple customers are asking about a specific new feature, you know exactly what to document next.

4. Manually add or update your solution articles

Once you’ve identified the opportunities for improvement from the reports and self-tests, the final step is to update your resources. You can easily jump into your Freshdesk knowledge base to write new solution articles or refine your existing ones.

A best practice is to create focused, clear articles for each new topic you find. This completes the standard Freshdesk process for maintaining a robust and accurate AI.

Considerations for scaling your evaluation

Following the steps above will lead to significant improvements in your bot's performance. As your support volume grows, you might want to supplement these native tools with additional strategies to ensure your AI is as comprehensive as possible.

-

Real-world question variety: Freshdesk's self-testing is a fantastic tool for verifying that your bot understands your curated documentation. To go a step further, some teams like to test their bot against the diverse and sometimes unpredictable ways that customers phrase their questions in the real world.

-

Expanding knowledge sources: Freddy is focused on your official Freshdesk knowledge base, which ensures that it only provides verified, high-quality information. However, for companies with answers spread across Google Docs or Confluence, finding a way to bridge those sources can provide even more coverage.

-

Proactive simulation: While Freshdesk's tools are excellent for monitoring performance, you might also consider proactive simulation. This allows you to forecast how your bot will perform across thousands of real-world scenarios before they even reach a customer.

How to further enhance your evaluation with complementary tools

While Freshdesk’s tools provide a solid foundation, some teams choose to use a complementary platform built for advanced simulation and broad knowledge integration. This approach allows you to see exactly how your AI will perform by using your own historical data as a benchmark.

Test against thousands of real, historical tickets

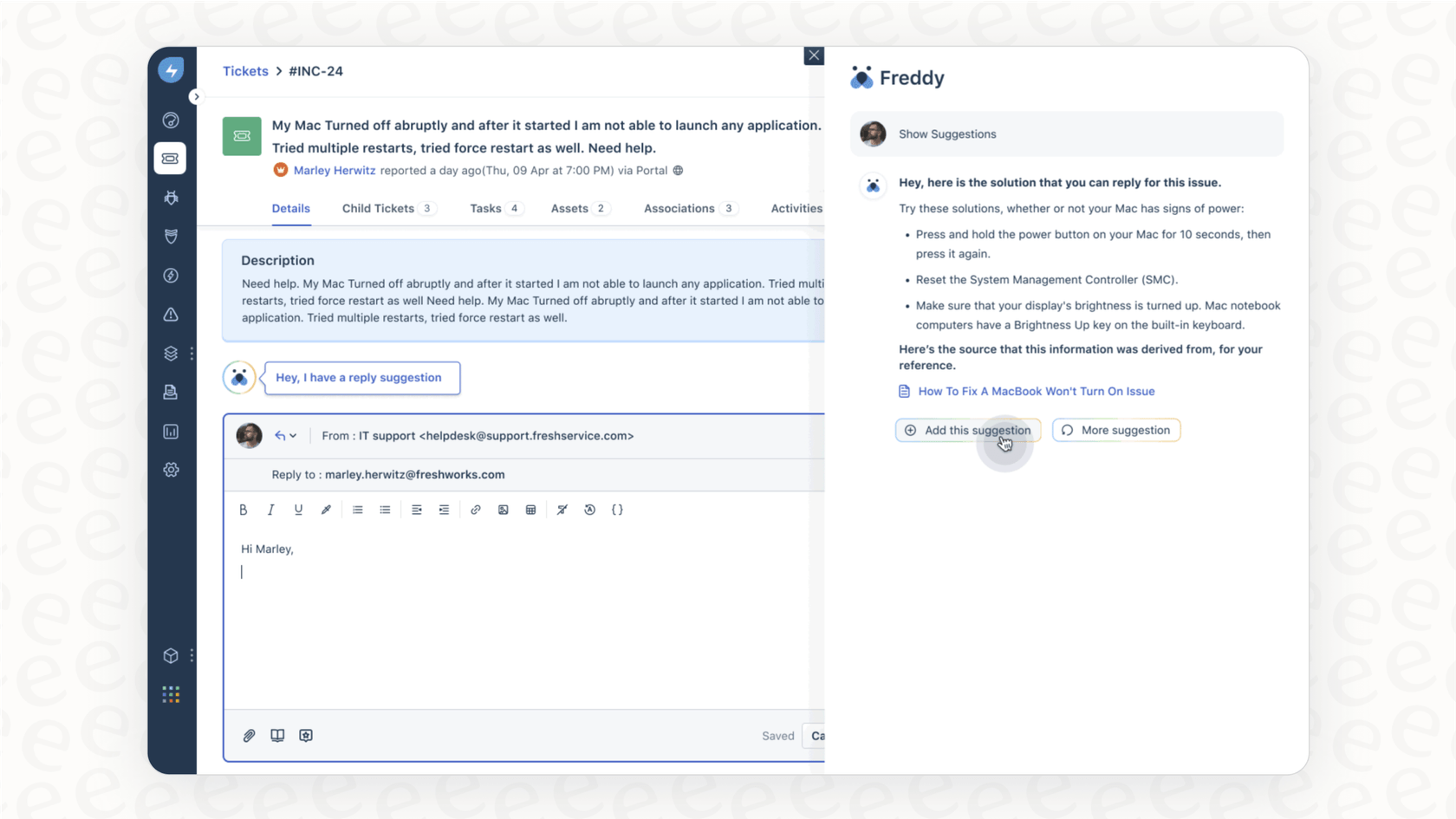

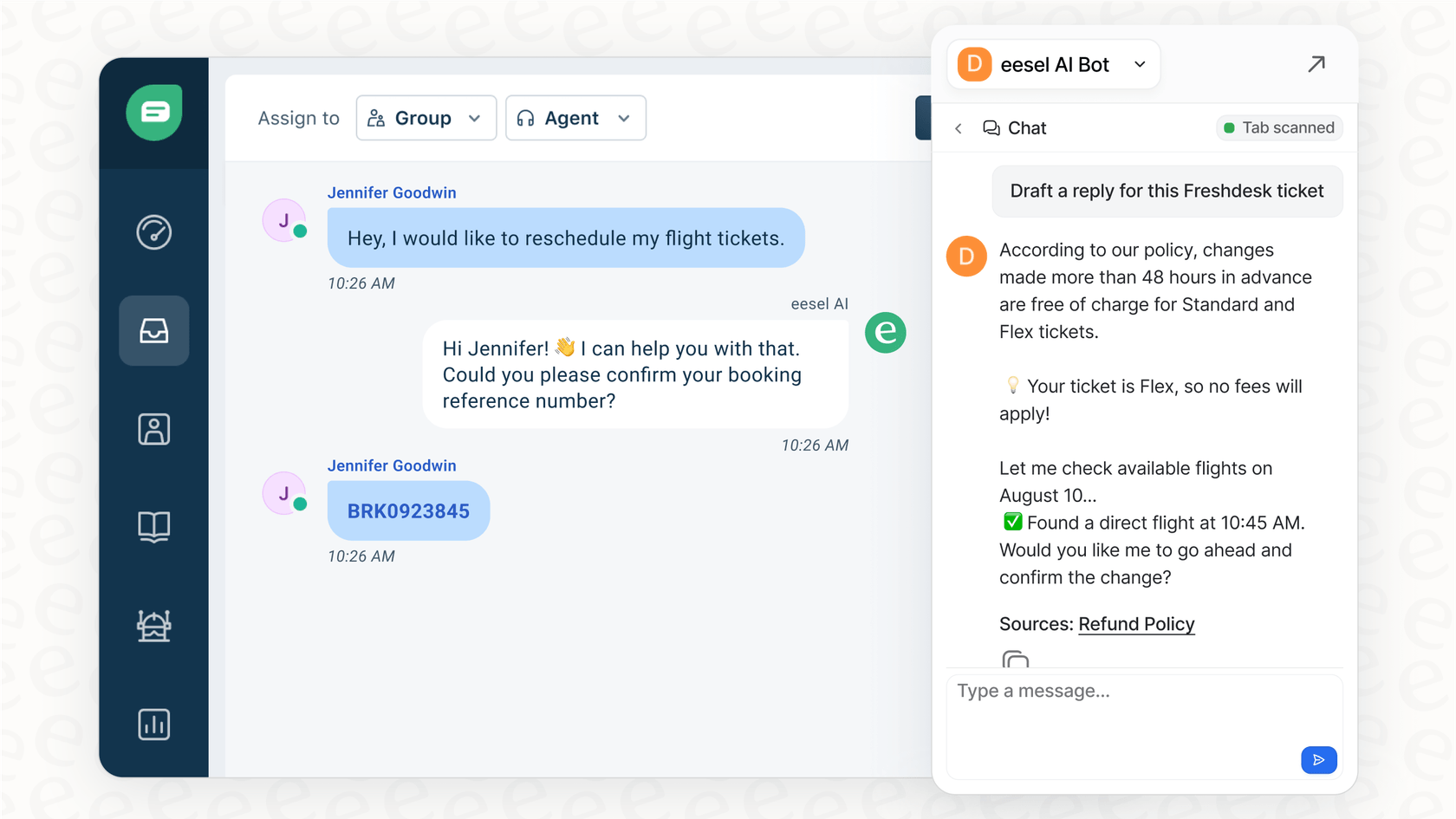

This is where a tool like eesel AI works beautifully alongside your existing setup. Rather than relying solely on generated questions, eesel AI’s simulation mode runs against your actual past Freshdesk tickets.

It takes real historical questions and shows you how the AI would have responded. This gives you a clear picture of your automation potential and helps you identify exactly where to add more information to your Freshdesk knowledge base.

Connect all your knowledge, wherever it lives

Sometimes, a "gap" is simply a matter of the information living outside of your help center. Freshdesk is the gold standard for your customer-facing knowledge, but your team might also have valuable internal data elsewhere.

eesel AI connects with over 100 sources, allowing you to bridge the gap between your help center and internal tools like Notion or Google Docs. This ensures your AI has access to a wider pool of information, making your Freshdesk setup even more powerful.

Let AI help you fill the gaps automatically

Updating your knowledge base can be a collaborative process between your agents and your AI tools. After running a simulation, you'll see a clear report of areas that need more detail. With eesel AI, you can take this a step further.

The platform can automatically generate draft knowledge base articles based on how your human agents have successfully resolved tickets in the past. If an agent provides a great solution to a complex issue, the tool can flag that interaction and create a draft article for your Freshdesk help center. This turns your team's expertise into a lasting resource that your AI can use for future customers.

Comparison: Freshdesk Freddy AI and eesel AI

| Feature | Freshdesk Freddy AI | eesel AI |

|---|---|---|

| Testing Method | Integrated self-evaluation | Historical ticket simulation |

| Realism | Verified internal focus | High (Based on actual customer issues) |

| Knowledge Sources | Primarily Freshdesk articles | Freshdesk, Confluence, Google Docs, & more |

| Gap Identification | Review of analytics & self-tests | Automated reports on gaps and trends |

| Go-Live Confidence | High (Internal validation) | High (With accurate performance forecasts) |

Combine internal testing with historical simulation

At the end of the day, evaluating knowledge gaps is the best way to build an AI support bot that your customers will love. Freshdesk Freddy AI provides the essential, reliable tools to get you started and maintain high standards for your documentation. By combining these native features with proactive historical simulation, you can create a truly comprehensive support experience.

This proactive, data-driven strategy ensures you are always one step ahead. It transforms your Freshdesk instance into an even more powerful engine for customer satisfaction, backed by an AI agent that is constantly learning and growing.

Take the next step

Ready to see how you can further optimize your automation? Consider running a complementary simulation on your historical tickets with eesel AI. It integrates seamlessly with Freshdesk, helping you get an accurate forecast of your automation potential and a clear report on how to further enrich your knowledge base for 2026 and beyond.

Frequently asked questions

To get started, you'll need a Freshdesk Pro or Enterprise account with the Freddy AI Agent add-on enabled, which is a powerful premium feature. You'll also need admin access within Freshdesk and an existing knowledge base connected to your Freddy AI bot.

The primary steps involve reviewing the Freddy AI Agent analytics dashboard for metrics like unhelpful responses and unanswered questions. You can also use the bot's "evaluate itself" feature, which is a great way to verify your content, and then update your solution articles based on the identified opportunities.

While Freshdesk's native tools are excellent for verifying your curated content, some teams may find that self-generated questions don't always capture the full variety of real customer queries. Additionally, Freddy focuses on your Freshdesk knowledge base to ensure verified accuracy, though you may sometimes want to incorporate data from other company documents.

A simulation-based approach, like with eesel AI, works alongside Freshdesk by testing your bot against historical customer tickets. This provides a detailed forecast of performance. This method uncovers gaps proactively and can help you integrate knowledge from over 100 sources into your Freshdesk workflow.

For continuous improvement, regularly review your bot's performance metrics and user feedback within Freshdesk to identify new content needs. Consider adopting advanced tools that automatically generate draft articles from successful agent resolutions to continually enrich your knowledge base.

Yes, the Freddy AI Agent add-on, which is essential to use Freshdesk Freddy AI to evaluate knowledge gaps for bots, is a premium feature. Freshdesk offers tiered session-based pricing, for example, starting around $100 for 1,000 sessions, allowing you to scale as you grow.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.