Ever tried asking a company's chatbot a simple question, only to get a link to a 5,000-word legal document? Or you ask about a specific feature, and it spits back an answer that’s technically right but totally useless. It’s like talking to a very polite, very unhelpful wall.

While general-purpose large language models (LLMs) like ChatGPT are impressive, they know a little bit about everything but not much about what actually matters to your business. For real results, you don't need a generalist; you need a specialist. That’s where domain specific LLMs come in.

This guide will walk you through what they are, why they're a much better fit for your business, the different ways to get one, and how to pick the right approach without needing a massive budget.

The core difference: general vs. domain specific LLMs

Before we jump into the "how," let's get clear on the "what." The difference isn't just about the data they're trained on; it's about the results they produce and whether you can actually trust them.

What is a general-purpose LLM?

Models like GPT-4 and Llama 3 are trained on an unbelievable amount of public data scraped from all corners of the internet. Think of them as a general practitioner doctor. They have a huge breadth of knowledge and can handle common issues, but you wouldn't ask them to perform open-heart surgery.

For a business, this creates some pretty big problems:

- They don’t know your company. They have zero clue about your product line, internal policies, or the unique way you talk to customers.

- They can "hallucinate." When they don't know something, they have a bad habit of just making things up with surprising confidence. In a business setting, that's a recipe for disaster.

- They can't access your data. They're walled off from your private knowledge bases and can't check real-time info, like a customer's order status.

What are domain specific LLMs?

A domain specific LLM is an AI model that’s been trained, fine-tuned, or given access to a focused set of information from a specific field. That field could be as broad as finance or law, or as specific as it gets: your own company's internal knowledge.

This is your specialist doctor, the cardiologist. They have deep, nuanced expertise in one area, making their advice far more valuable for specific, high-stakes problems.

The advantages for a business are pretty obvious:

- Higher Accuracy: They get your industry's lingo and your company's context, which means more precise and genuinely helpful answers.

- Increased Reliability: They are way less likely to make things up because their knowledge is grounded in a controlled set of information you provide.

- Better User Experience: They give answers that are actually tailored to what the user needs and can even match your brand's voice, creating a much smoother interaction.

Three practical approaches to building domain specific LLMs

Creating a specialist AI doesn't have to mean building a new model from the ground up, a process that can easily run into the millions. These days, there are a few practical methods to choose from, each with its own pros and cons when it comes to cost, effort, and performance.

Method 1 for domain specific LLMs: Prompt engineering (the quick-start)

The simplest way to get more specialized answers is to get really good at asking. Prompt engineering is all about crafting detailed instructions (prompts) that give a general model the context, rules, and examples it needs to act like an expert. You're essentially telling it, "For the next five minutes, you're an expert in X, and here are the rules you need to follow."

- Pros: It’s fast, cheap, and you don’t have to actually change the model. You can whip up and test new prompts in minutes.

- Cons: It can be a bit fragile; a tiny change in the prompt can send the results way off course. It's also limited by how much text the model can handle at once and doesn't fundamentally teach the model anything new.

Method 2 for domain specific LLMs: Retrieval-augmented generation (RAG) (the open-book exam)

Retrieval-augmented generation, or RAG, is like giving an LLM an open-book exam. Instead of just using what it "memorized" during training, the model is connected to an external, trusted knowledge base, like your company’s help articles, past support tickets, or internal wikis. When a question comes in, the system first finds relevant info from your knowledge base and then feeds it to the LLM as context to generate the answer.

- Pros: This approach gives you answers that are up-to-date and verifiable, which slashes the risk of hallucinations. It’s incredibly effective for any task that relies on a body of knowledge, ensuring the AI’s answers are always based on your approved info.

- Cons: A RAG system is only as good as its source material. If your documentation is a mess, the AI's answers will be too.

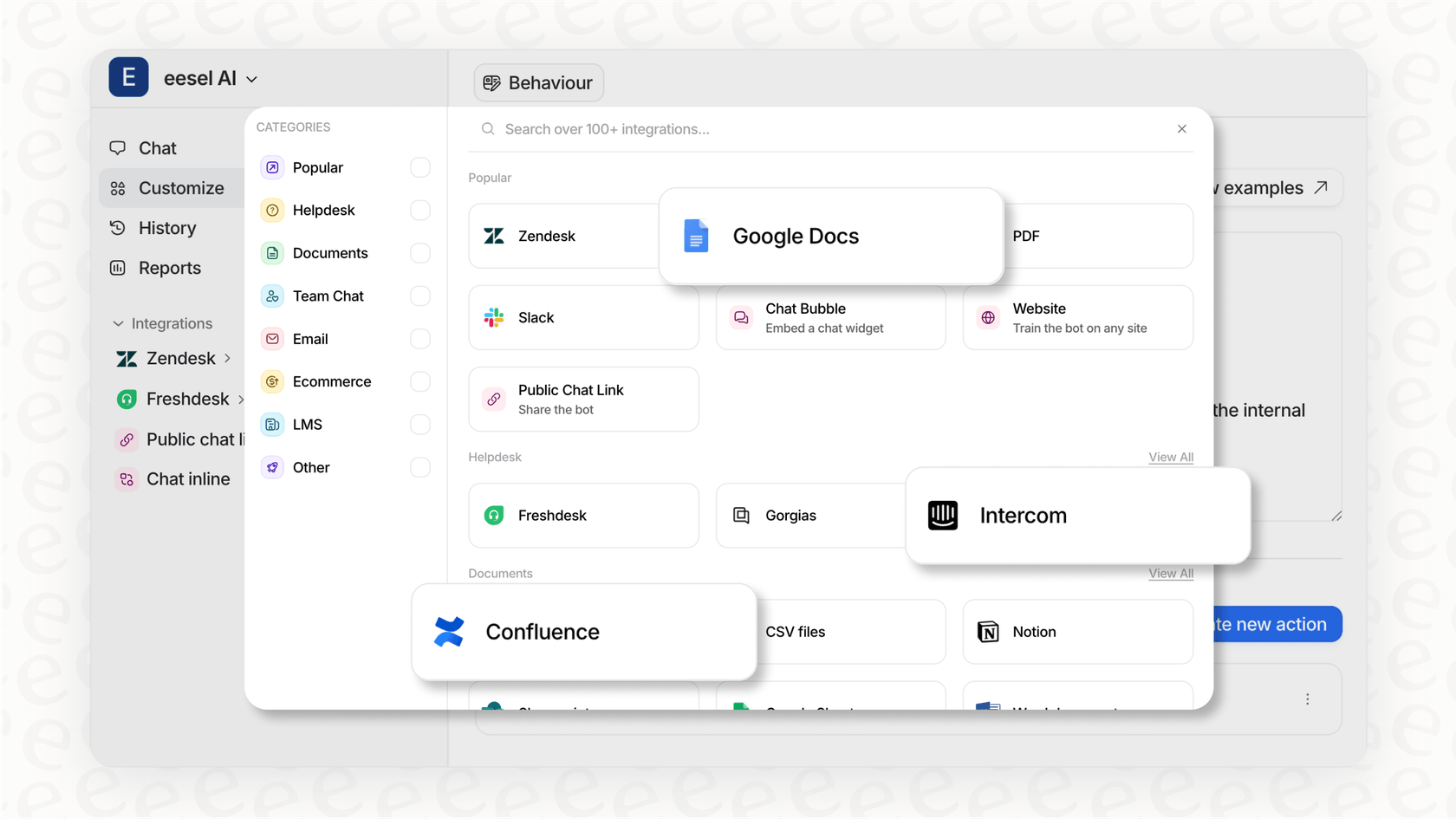

This is exactly how modern AI support tools work. An AI agent from eesel AI uses RAG to plug directly into all your company's knowledge, whether it’s in Zendesk tickets, Confluence pages, Google Docs, or even your Shopify catalog. This keeps every answer grounded in your business reality, not the generic web.

Method 3 for domain specific LLMs: Fine-tuning (the deep specialization)

Fine-tuning is where you take a pre-trained general model and put it through a second round of training, this time using a curated dataset of your own domain-specific examples. This process actually adjusts the model's internal wiring to specialize its behavior, tone, and knowledge. It's like sending that GP doctor to a residency program to become a cardiologist.

- Pros: It can bake a specific style, tone, or complex way of thinking right into the model, turning it into a genuine expert.

- Cons: This can get expensive and time-consuming, requiring thousands of high-quality examples. There's also a risk of what's called "catastrophic forgetting," where the model gets so specialized it forgets how to do some of the basic stuff it used to know.

Real-world examples of domain specific LLMs

The move from general to specialized AI is already well underway. Here are a few big examples, followed by the one domain that matters most to any business: your own.

Examples of domain specific LLMs across different industries

These models show just how powerful it is to focus on one area.

| Industry | Example LLM | Primary Use Case |

|---|---|---|

| Finance | BloombergGPT | Analyzing financial documents, sentiment analysis, answering financial queries. |

| Healthcare | Med-PaLM 2 | Answering medical questions, summarizing clinical research, assisting with diagnoses. |

| Law | Paxton AI | Legal research, contract review, and drafting legal documents. |

| Software Dev | StarCoder | Code generation, autocompletion, and debugging assistance. |

The most critical domain for domain specific LLMs: your own business knowledge

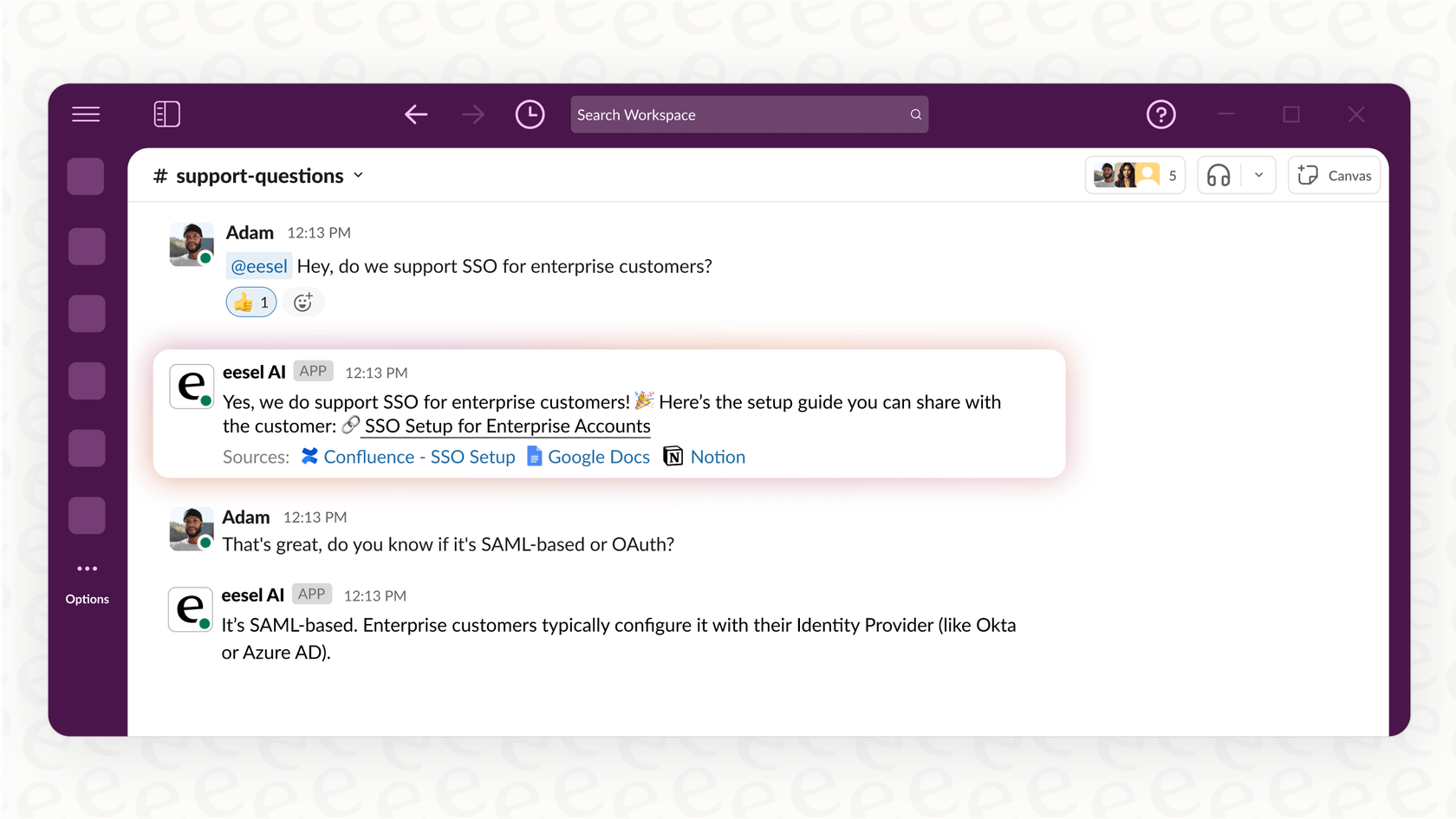

Those big industry models are impressive, but the most important domain for you? It's your own business. No off-the-shelf AI knows your product codes, your specific way of handling returns, or your friendly, empathetic brand voice. This is never more true than in customer support, where generic answers aren't just unhelpful, they're downright frustrating.

This is where a platform like eesel AI really makes a difference. Instead of giving you a generic "support" model, eesel helps you create a domain specific LLM that's an expert on your support operations. It learns from your past tickets to see how your best agents solve problems and adopts their tone. By connecting to all your wikis, documents, and tools like Slack, it becomes the single go-to expert on your business. It can then provide instant, accurate support for both your customers and your own team, turning your company’s collective knowledge into its best asset.

How to choose the right approach for your domain specific LLMs

So, with three different methods on the table, where do you start? The right choice really comes down to your goals, your budget, and how complex your needs are.

The practical path to domain specific LLMs for most businesses

Look, for most companies, you don't need to overcomplicate things. The sweet spot is a mix of smart prompting and a solid RAG system. This combination gives you the accuracy of a specialist without the headache and huge cost of fine-tuning.

And you don't have to be an AI guru to get there. eesel AI offers a powerful, ready-to-go hybrid solution that makes deploying a domain specific LLM straightforward.

- It just connects to your stuff. With one-click integrations, it instantly plugs into your knowledge sources, so it’s always working from your company's source of truth.

- You control the personality. Its prompt editor lets you shape the AI's persona, tone, and instructions without any coding. You can define a unique personality and tell it exactly how to escalate tricky issues.

- You can test it risk-free. The best part? The simulation mode lets you test your new AI on thousands of your past tickets. You can see exactly how it would have responded, get solid forecasts on resolution rates, and calculate your ROI before it ever speaks to a real customer. This gives you all the benefits of a specialized model without the high cost and risks of fine-tuning.

Your next specialist AI should be built on domain specific LLMs

Generic AI had its moment, but for real business problems, it's just not cutting it anymore. If you want to actually get ahead, you need an AI that gets you, your context, your problems, your customers.

Domain specific LLMs deliver the accuracy, reliability, and tailored experience that people now expect. They’re what turns AI from a cool party trick into a tool you can’t live without.

And you don’t need a team of data scientists or a six-month project to get started. eesel AI is the fastest and most practical way to launch a powerful, domain-specific AI for your support team and internal knowledge. It's a truly self-serve platform, so you can be live in minutes, not months.

Ready to see what a specialist AI can do for you? Sign up for a free trial or book a demo today.

Frequently asked questions

For most teams, the best entry point is a system using Retrieval-Augmented Generation (RAG). This method connects an LLM to your existing company knowledge without the complexity of fine-tuning, giving you accurate, context-aware answers right away.

For a RAG approach, it's about quality over quantity. A well-organized and up-to-date knowledge base is far more valuable than massive amounts of messy, outdated information. You can often start with just your primary help center or internal wiki.

While expert prompting helps, it can’t access your company's private or real-time data. A specialized model using RAG ensures answers are consistently grounded in your approved information, making them far more reliable and accurate for business use cases.

They dramatically reduce the risk of "hallucinations", especially when using RAG, as answers are based on your specific documents. While no AI is perfect, this grounding in factual data makes them significantly more trustworthy than general-purpose models.

Use RAG when your goal is to provide answers based on a specific body of knowledge. Only consider the more expensive and complex process of fine-tuning if you need to teach the model a unique style, format, or complex reasoning process that can't be achieved with prompting and RAG alone.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.