When Cognition AI dropped the demo for Devin, the tech world pretty much stopped what it was doing. Pitched as the "first AI software engineer," it triggered a massive wave of excitement, some pretty heated debates, and maybe a little bit of existential dread for developers everywhere.

On one hand, you had these slick demos showing Devin building entire apps from just a single sentence. On the other, a growing number of people started poking holes in the story, questioning if the reality could possibly live up to the revolutionary claims.

The whole thing is a bit of a whirlwind, and if you're trying to sort fact from fiction, you're definitely not alone. This article is here to cut through that noise. We're going to take a close look at the demos, the benchmarks, and the public Cognition AI reviews to give you a straight-up, balanced view of what Devin can and can’t do right now.

What is Devin AI?

First things first, let's get the official story straight from the source. Cognition Labs calls Devin a "fully autonomous AI software engineer." This isn't just another one of those code autocomplete tools that pop up suggestions while you type. While helpers like GitHub Copilot are great for speeding up the process of writing code snippets, Devin is designed to handle the whole project by itself.

You give it a task, and then you can literally watch it work. It opens up a familiar-looking developer environment with a command line, a code editor, and a browser. From there, it starts planning its strategy, looking up documentation, writing the actual code, fixing bugs as they appear, and deploying the finished product.

The big idea here is that Devin is supposed to copy the entire workflow of a human developer, taking a high-level problem and turning it into a working solution with very little help. It's a huge leap beyond just assisting with code; it's a real shot at creating an independent agent for building software.

The hype: What Devin promised

The buzz around Devin didn't just appear out of thin air. The initial demos and performance stats that Cognition released were genuinely impressive and felt like a major step forward for what AI could do.

Building apps from a single prompt

Honestly, the launch videos were captivating. In one demo, you see Devin build a fully playable version of the classic game Pong. In another, it puts together a whole website from the ground up in less than 20 minutes. But the cool part wasn't just the final product; it was watching the process unfold.

The videos showed Devin breaking down its tasks, using its browser to search for documentation, and rewriting its own code when it hit a snag. It seemed to learn as it went, debug its own mistakes, and push through challenges, which are all things a human developer does every day. This ability to manage a complicated, multi-step project from a single instruction is what really got everyone talking and fueled that first wave of excitement.

Seriously impressive benchmark scores

To back up what they were showing in the demos, Cognition pointed to their results on the SWE-bench benchmark. This is a test that gives AI systems real-world problems pulled from open-source GitHub projects and asks them to fix them. According to their technical paper, Devin managed to correctly solve 13.86% of these issues from start to finish.

Now, 13.86% might not sound like a groundbreaking number on its own, but it was a massive improvement over previous models, which were barely getting 2%. This wasn't just a small step up; it was a nearly seven-fold improvement on a test designed to mimic real-world complexity. It suggested that something had fundamentally changed in how this AI could reason, plan, and use its tools.

| Model | SWE-bench Score (Unassisted) |

|---|---|

| Devin | 13.86% |

| Claude 2 | 4.80% |

| Previous SOTA | 1.96% |

The reality check: Devin's limitations

Once the initial excitement started to wear off, the community began to dig a little deeper. Detailed breakdowns, forum threads, and developer analyses started painting a more complicated picture. It became clear there was a gap between the polished demos and how Devin performed in the wild.

Are the demos telling the whole story?

One of the most detailed critiques came from the YouTube channel "Internet of Bugs," which went through Devin's viral Upwork demo frame by frame. What they found raised some big questions about how the task was set up and presented.

The investigation brought up a few key points:

-

The job felt hand-picked: The task Devin was working on seemed perfectly suited to its abilities, almost as if it were chosen specifically to show Devin in the best possible light, not like a typical freelance gig.

-

The debugging was a bit fishy: At certain points in the video, it looked like Devin actually introduced errors into the code itself, only to turn around and "impressively" find and fix them later on.

-

The timeline was heavily edited: What looked like a smooth and speedy process in the demo was probably much slower in real time. It’s likely that long pauses and failed attempts were edited out to make it look more efficient.

These points don't mean Devin is a fake, but they do suggest that the demos were more like a carefully crafted highlight reel than a typical day at the office for the AI.

The 86% failure rate and the context problem

Let's circle back to that SWE-bench score for a minute. A 13.86% success rate is a fantastic technical achievement. But flip it around, and it's also an 86.14% failure rate. For a tool that's supposed to be an autonomous engineer, that's an awful lot of problems left unsolved.

This highlights a bigger issue that many developers in Cognition AI reviews have pointed out: the "context gap." Building software in the real world is messy. It's full of vague requests from clients, unstated assumptions, and constant back-and-forth with team members. A bug ticket almost never contains all the information you need to fix it. A human engineer has to ask follow-up questions, understand the business reasons behind a feature, and make judgment calls based on experience.

As one person on the freeCodeCamp forums put it, Devin just doesn't have that context. It's brilliant at carrying out a perfectly defined task, but it starts to struggle when it runs into the kind of ambiguity that’s part of almost every real engineering job.

More of a smart intern

After all the testing and analysis, the consensus that's forming in the developer community is that Devin is less of an independent senior engineer and more of a super-advanced intern who still needs supervision.

It can be an amazing tool for handling specific, clearly defined tasks. But it still needs a human to give it clear directions, keep an eye on its work, and jump in when it gets stuck, which, according to the numbers, happens most of the time. The dream of handing an AI a vague business idea and getting back a fully-built piece of software is, for now, still just a dream.

Beyond the engineer: Lessons from agentic AI

The whole story of Devin offers a really important lesson for any business thinking about adopting AI. It's tempting to go after the moonshot, the fully autonomous agent that can replace an entire department overnight. But the real, immediate value isn't in replacing complex, creative jobs. It's in automating the right kind of work.

The lesson from Devin: Start with structured, repeatable tasks

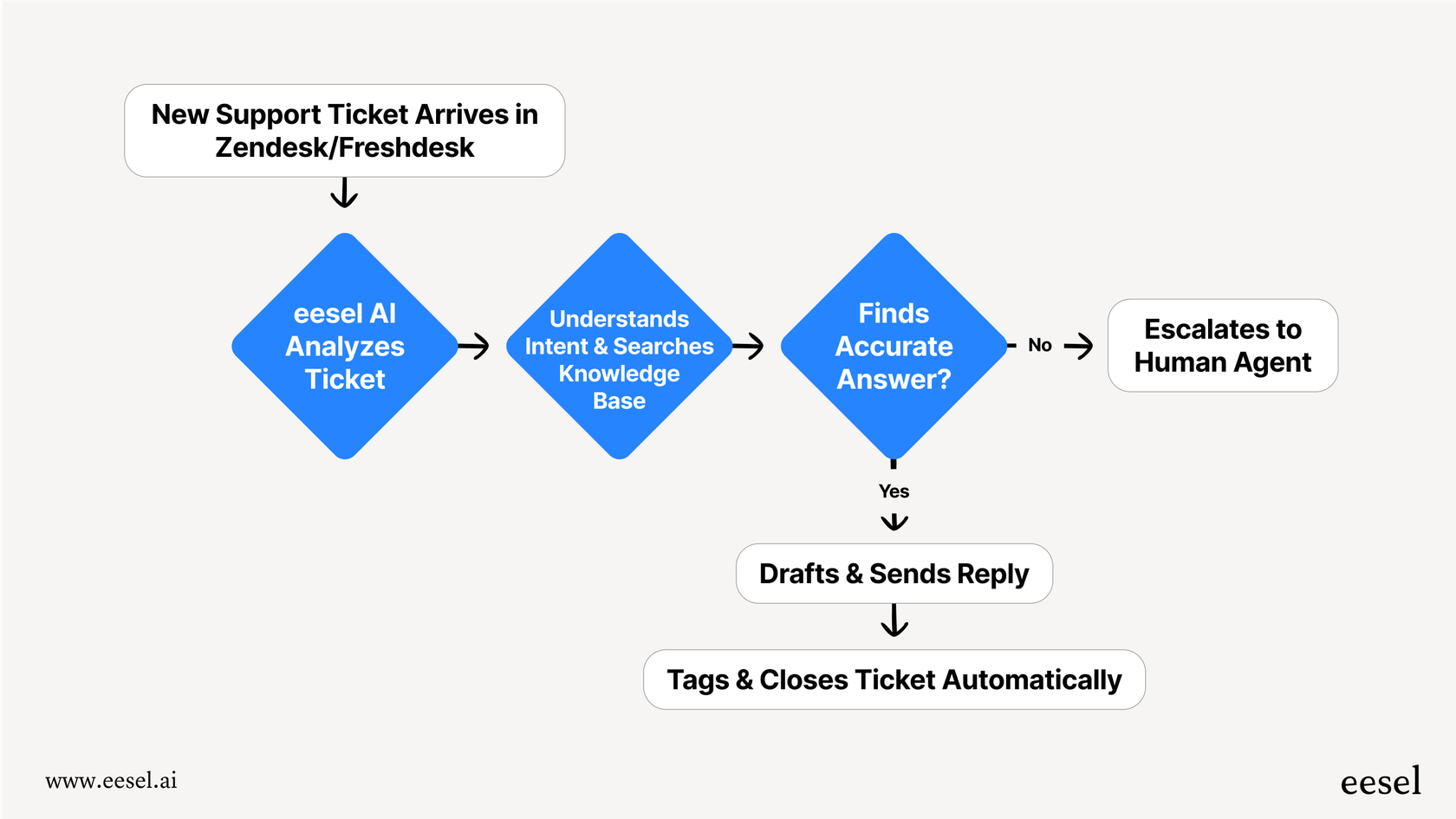

The most successful AI agent rollouts you see today are focused on high-volume, structured processes where the rules are clear and the results are easy to measure. A perfect example is customer support or an internal IT helpdesk. Every single day, these teams handle thousands of similar requests: "I need to reset my password," "Where is my order?" or "How do I fix this common issue?"

These are the ideal environments for AI automation. The problems are well-defined, the answers are usually already in a knowledge base or past support tickets, and you can easily measure success with things like how quickly issues are resolved and how happy customers are. This is where AI agents can deliver huge, tangible returns right now.

The need for control and simulation

Devin's "black box" approach, where you give it a command and cross your fingers, makes for a cool demo but is a bit terrifying for a real business. When you're dealing with live customers or business-critical systems, you simply can't afford an 86% failure rate. You need reliability, oversight, and complete control.

This is where a platform like eesel AI offers a much more practical way to get started with automation. It’s designed from the ground up to give businesses the tools they need to deploy AI agents safely and effectively.

-

Go live in minutes, not months: Access to Devin is still very limited and secretive. In contrast, eesel AI is completely self-serve. You can connect it to your helpdesk, like Zendesk or Freshdesk, and all your knowledge sources in just a few clicks. You don't have to sit through mandatory sales calls or long onboarding.

-

Test without the risk: One of the best things about eesel AI is its powerful simulation mode. Before your AI agent talks to a single real person, you can run it on thousands of your past support tickets. This gives you a clear, accurate prediction of how it will perform and lets you tweak its behavior in a totally safe environment.

-

You're in the driver's seat: You don't just get one unpredictable agent. Instead, eesel AI gives you a fully customizable workflow builder. You get to decide exactly which kinds of tickets the AI handles, what its personality and tone should be, and what specific actions it's allowed to take, whether that's escalating a ticket to a human agent or looking up order info in your Shopify store.

Devin's price tag: What we know

As of right now, Cognition AI hasn't released any public pricing for Devin. This is pretty standard for new, high-end AI tools aimed at big companies. It almost certainly means that getting access involves a lengthy sales process, with contracts that probably start in the tens of thousands of dollars per year, if not more.

For most businesses, that kind of model just isn't practical. You need pricing that's transparent and predictable, allowing you to start small, prove that it's worth it, and scale up without being locked into a massive contract or getting hit with surprise charges.

Is Devin the future of software engineering?

So, what's the final verdict on Devin? It's undeniably a remarkable piece of technology. It marks a real step forward in AI's ability to handle complex, multi-step tasks and gives us an exciting peek into a future where autonomous agents are a key part of our work.

But, as the Cognition AI reviews and critical analyses have shown, the reality on the ground is a bit more complicated. Devin is an impressive tool, but it's not the autonomous replacement for human developers that it was initially made out to be. For businesses that want to get real, concrete results from AI today, the focus probably shouldn't be on the futuristic moonshot. It should be on practical, controllable, and reliable automation for the tasks that are begging for it.

Your next step: Automate workflows you can control

If you're ready to move past the hype and start using an AI agent that puts you in complete control, take a look at how eesel AI can start automating your customer support or internal helpdesk workflows in just a few minutes.

Frequently asked questions

The overall sentiment from the Cognition AI reviews is mixed. While there's excitement about its potential as a "first AI software engineer," many reviews highlight a significant gap between the initial demos and its real-world performance, seeing it as a powerful tool with limitations.

No, many detailed analyses in the Cognition AI reviews suggest the demos were heavily curated and edited. Critics noted that tasks might have been hand-picked, debugging could be misrepresented, and timelines compressed, indicating a "highlight reel" rather than typical performance.

The Cognition AI reviews acknowledge Devin's 13.86% success rate on SWE-bench as a significant technical leap over previous models. However, they also point out that this still translates to an 86% failure rate, highlighting its struggles with real-world ambiguity and context.

Most Cognition AI reviews conclude that Devin is more akin to a "super-advanced intern" rather than an autonomous senior engineer. It requires human supervision, clear instructions, and intervention when it encounters complex, undefined problems.

Based on the Cognition AI reviews, Cognition AI has not released public pricing or wide availability for Devin. It's generally understood to be a high-end tool likely requiring bespoke contracts and a lengthy sales process, probably starting in the tens of thousands annually.

The Cognition AI reviews imply that Devin is best suited for specific, clearly defined tasks with unambiguous instructions. It excels when the scope is narrow, and the required actions are well-structured, but struggles with the vague requests common in real-world engineering.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.