Let's be honest, generative AI feels a bit like magic. You type in a prompt, and a few seconds later, you've got an email, a chunk of code, or even a whole website. It's seamless. But that "effortless" feeling is hiding a pretty big secret: the machine behind the curtain is incredibly power-hungry, and its appetite is growing like crazy.

With the release of newer models like GPT-5 on the horizon, researchers are starting to sound the alarm about just how much energy these things consume. This isn't just an abstract environmental problem, it's quickly becoming a real business issue. The hidden costs of running these massive, do-it-all models are getting too big to ignore.

In this article, we’ll pull back the curtain on the "what" and "why" of AI's energy demands. We'll look at the true environmental impact and show you a smarter way for your business to adopt AI that's both sustainable and way more effective.

What is ChatGPT energy use?

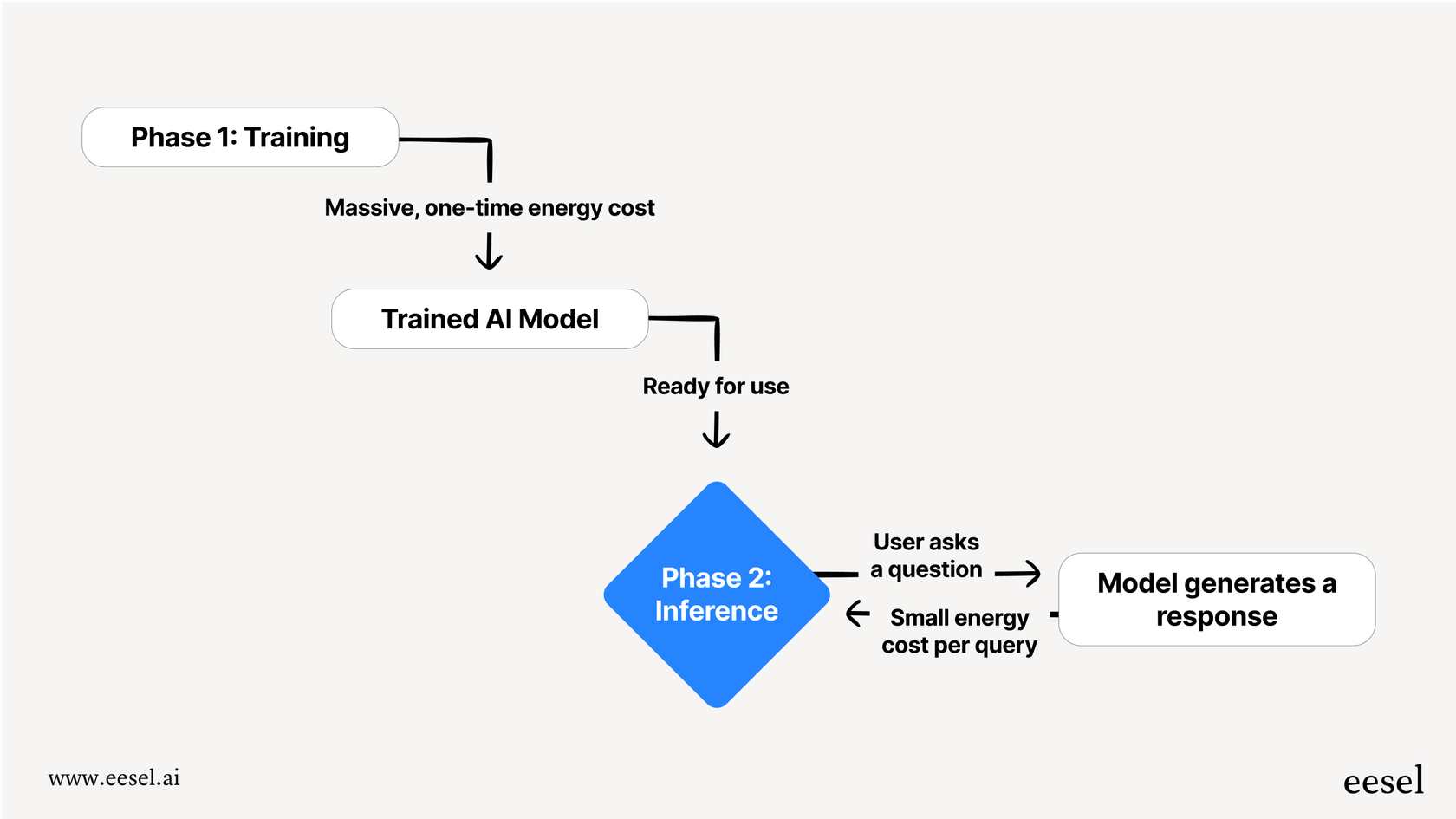

When we talk about an AI guzzling energy, it’s not just one thing. The process is broken down into two main phases: training and inference. The easiest way to think about it is like an AI going to school, and then an AI actually going to work.

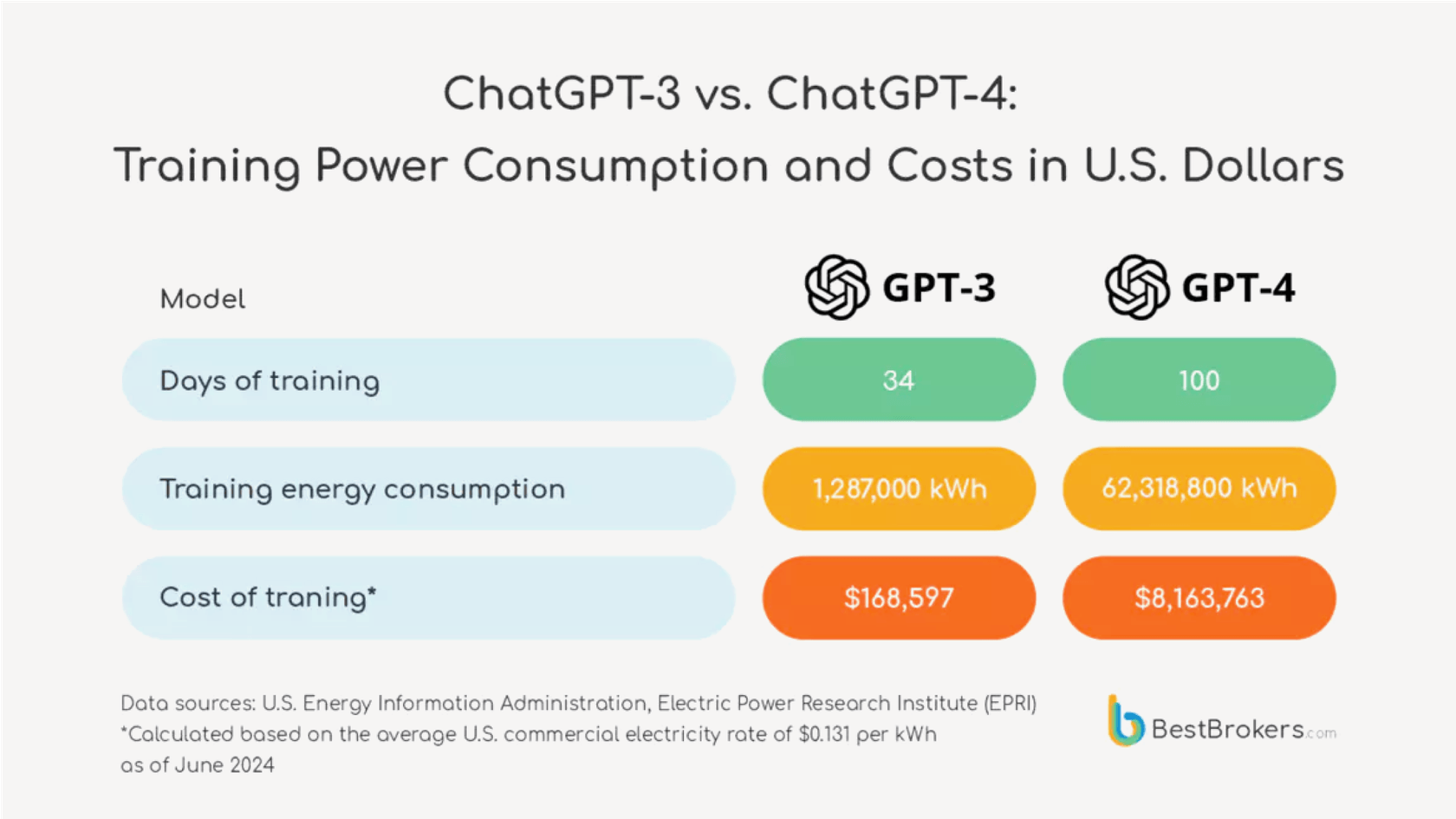

Training This is the AI's "schooling" phase, and it's incredibly intense. It involves feeding a model a colossal amount of data, often a huge slice of the internet, so it can learn patterns, language, and concepts. This takes a massive amount of computing power running 24/7 for weeks or even months. It's a huge, one-time energy cost that has to be paid for every new version of a model.

Inference Inference is what happens when you actually use the AI, like asking ChatGPT a question. The model takes what it learned during training to "infer" the best answer. While a single query uses a tiny fraction of the energy needed for training, it’s the sheer volume that’s mind-boggling. For a tool handling billions of requests a day, the total energy spent on inference over its lifetime can easily blow past the initial training cost.

A closer look at the surprising ChatGPT energy use

The jump in power demand from one model generation to the next isn't a small step up, it's a giant leap.

Let's put some real numbers to this. In mid-2023, asking a model like ChatGPT for a simple recipe might have used around 2 watt-hours of electricity. Now, fast forward to the upcoming GPT-5. Researchers at the University of Rhode Island’s AI lab estimate the new model could use an average of 18 watt-hours for a medium-length response, and sometimes as much as 40 watt-hours.

That’s a huge increase. It shows that each new generation of these do-everything models is becoming exponentially more power-hungry.

To give you a bit of perspective, 18 watt-hours is enough to power an old-school incandescent light bulb for 18 minutes. Now, think about the bigger picture. ChatGPT reportedly handles around 2.5 billion requests a day. If all of those were handled by GPT-5, the total daily energy consumption could be enough to power 1.5 million US homes. Suddenly, that "effortless" AI response doesn't seem so free, does it?

| Model Version | Average Energy per Query (Watt-hours) | Real-World Equivalent |

|---|---|---|

| GPT-4 Era (2023) | ~2 Wh | Running an incandescent bulb for 2 minutes |

| GPT-4o | ~0.34 Wh (Altman's figure) | A high-efficiency lightbulb for a few minutes |

| GPT-5 (Estimated) | ~18 Wh | Running an incandescent bulb for 18 minutes |

Secrecy and hidden costs of ChatGPT energy use

Trying to figure out the real environmental cost of AI is a surprisingly murky business, mainly because the companies building these models are incredibly secretive about how many resources they use.

Where is the official data on ChatGPT energy use?

OpenAI and its competitors haven't released official, model-specific energy figures since GPT-3 came out in 2020. OpenAI's CEO, Sam Altman, did share on his blog that a query uses about 0.34 watt-hours, but those numbers came without any context about which model he was talking about or any data to back it up, making them pretty much impossible to verify.

This lack of transparency is a big problem. As one climate expert pointed out, "It blows my mind that you can buy a car and know how many miles per gallon it consumes, yet we use all these AI tools every day and we have absolutely no efficiency metrics." Without clear data, it's hard for any business to make an informed decision about the true cost of the tools they're using.

Uncovering the hidden energy cost of ChatGPT and how much power AI really uses.

ChatGPT energy use: It's about more than just electricity

The environmental price tag goes way beyond the electricity bill. There are a few other hidden costs that don't get talked about nearly enough.

- It’s thirsty work: Data centers get incredibly hot, and cooling them down requires a shocking amount of fresh water. Just training GPT-3 was estimated to have used over 700,000 liters of water. As models get bigger, their thirst for water grows, putting a real strain on local resources.

- Hardware and e-waste: Generative AI runs on specialized, power-hungry processors (GPUs). Making these chips has its own environmental footprint, from mining raw materials to the energy used to build them. The constant race for more powerful hardware also means older chips get tossed aside faster, adding to a huge e-waste problem.

- Built to be replaced: The AI world moves at lightning speed. New models are released every few months, making the old ones obsolete almost overnight. This means that the massive amount of energy that went into training a model that gets replaced so quickly is essentially wasted.

A smarter way forward: Efficiency beyond high ChatGPT energy use

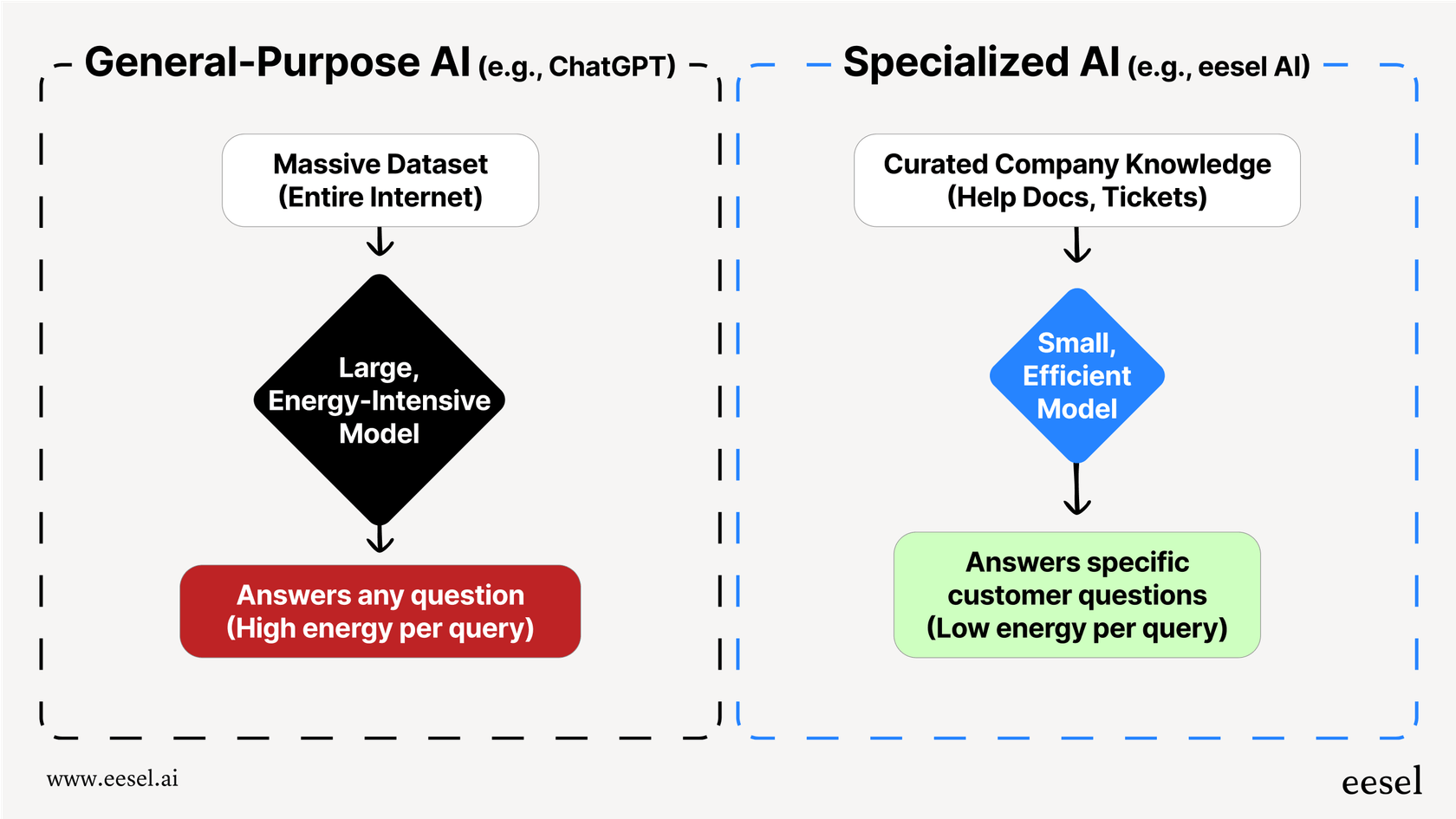

The current strategy of just building bigger and bigger general-purpose AI models is a brute-force approach. It isn't sustainable, and for most businesses, it isn't even the best tool for the job.

The issue is that models like ChatGPT are designed to know a little bit about everything, from Shakespearean sonnets to quantum physics. To do that, they have to be enormous, which makes them incredibly energy-intensive. But if you just need to answer a customer's question about your shipping policy, using a massive general AI is complete overkill. It's like using a supercomputer to do basic math, it’s just not efficient.

There's a much smarter way to do this: using smaller, specialized AI models that are trained only on the data they need to do their job. This approach is much more efficient by design. Training an AI on a curated set of your company's help articles, past support tickets, and product docs takes a tiny fraction of the energy needed to train it on the entire internet.

The result is not just a more sustainable AI, it's a better one. A specialized AI gives answers that are more accurate, consistent, and perfectly on-brand because it isn't getting distracted by irrelevant information from public websites.

This is the whole idea behind what we do at eesel AI. Instead of asking you to rip out your existing systems and replace them with a single, power-hungry AI, eesel plugs directly into your help desk and knowledge sources. It learns from your content in the tools you already use, like Zendesk, Confluence, and Google Docs, to create an efficient and accurate AI support agent. This lets you skip the massive energy drain and operational headaches of a general-purpose model while giving better, more relevant answers to your customers.

Your AI strategy and the impact of ChatGPT energy use

The energy consumption of large AI models isn't just a topic for researchers anymore, it's a real business issue. With each new generation, models like GPT-5 are getting exponentially more power-hungry, and their true cost is hidden behind a wall of secrecy and includes a lot more than just electricity.

For most business needs, especially in customer service or internal support, using a giant, general-purpose AI isn't the most effective or responsible choice. It’s inefficient, expensive, and often gives less accurate answers for your specific use case.

A specialized, integrated approach is a much more sustainable, cost-effective, and precise alternative. By training an AI on your own knowledge, you can build a system that's both powerful and efficient.

If you’re ready to build a powerful and efficient AI support system without the massive energy bill, see how eesel AI works with your existing tools to deliver accurate, on-brand answers. Start a free trial or book a demo today.

Frequently asked questions

The high energy demand signals inefficiency. Using a massive, general-purpose model for a specific business task is like using a supercomputer for simple math, it's overkill and often leads to less accurate, slower results for your specific needs.

It's much more than just electricity. The total environmental footprint includes massive water consumption for cooling data centers, the resources needed to manufacture specialized hardware, and a growing e-waste problem as older technology is quickly replaced.

Yes, the increase is significant. Estimates suggest newer models can be exponentially more power-hungry, with some queries using nearly ten times the electricity of previous versions. This trend of "bigger is better" is not sustainable.

Major AI companies are very secretive about their resource consumption, treating it like a trade secret. This lack of transparency makes it difficult for businesses and consumers to understand the true environmental and financial costs of the tools they use.

Absolutely. A specialized model is trained only on your relevant company data, which requires a tiny fraction of the energy. This results in a much more efficient system that is not only sustainable but also provides more accurate and relevant answers for your specific use case.

While training is incredibly energy-intensive, the sheer volume of daily queries (inference) can easily surpass the initial training cost over the model's lifetime. For a tool with billions of daily users, the cumulative energy spent on inference becomes the dominant factor.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.