If you're in IT or support, you know the feeling. Your day starts, and immediately it’s a firehose of notifications, alerts, and the same old tickets. It often feels like you’re just a professional alert-clearer, bouncing from one minor issue to the next without ever getting to the projects that really matter.

People talk about AI monitoring and alerting as a way to fix this. The promise is that it can quiet the noise, handle the repetitive stuff, and give your team some breathing room.

But what does that mean in practice? It’s not just about getting fewer Slack pings. It’s about moving from constantly putting out fires to actually preventing them. This guide will break down what you actually need to know, from the different kinds of AI monitoring out there to what a good platform should really do for you.

What is AI monitoring and alerting?

Simply put, AI monitoring and alerting uses AI to keep an eye on things and flag when something needs attention. The term gets thrown around a lot, and it can be confusing because it actually refers to two completely different jobs. Getting the distinction right is pretty important.

1. Keeping an eye on the machines (AIOps)

This is the classic use case. Think of all the alerts pouring in from your servers, networks, and apps. AIOps tools like Datadog or PagerDuty use AI to sift through that flood, group related alerts, and tell your DevOps team what's actually on fire versus what's just a bit of smoke. The goal here is simple: keep the systems up and running and reduce alert noise for the tech team.

2. Checking in on your AI agents' performance

This is a newer and more customer-facing approach. Instead of watching machines, you're monitoring the AI agents, like support bots, that talk to your customers. The focus isn't just on whether the bot is "on." It's about whether it's any good at its job. Is it giving correct answers? Is it actually solving problems? Are customers happy with the interaction? This is about the quality of the service, not just the health of the system.

So while both use AI, they have totally different goals. One looks after your infrastructure's health, and the other looks after the quality of your AI-driven customer experience. You wouldn't use a stethoscope to check your car's engine, and you wouldn't use an engine diagnostic tool to check someone's pulse. Same idea here.

What to look for in modern AI monitoring and alerting platforms

Okay, let's get practical. When you're looking at different tools, what actually matters? It’s not about the flashiest tech demo. It’s about features that give you real control and confidence.

The ability to test on real data, risk-free

Letting a new AI talk to your customers can be nerve-wracking. How do you know it won't say something weird or mess up a simple request? A lot of platforms show you a polished demo that works perfectly, but that doesn't tell you how it will handle your specific customer questions.

A great platform lets you run a simulation first. You should be able to point it at thousands of your past support tickets and see exactly how it would have handled them. It should show you what it would have solved, what it would have passed to a human, and where it would have struggled.

For instance, a tool like eesel AI lets you do just that. You can run a full simulation on your historical data before ever flipping the "on" switch. This gives you a clear picture of what kind of resolution rates and savings you can expect, so you can make adjustments and deploy it knowing it's ready.

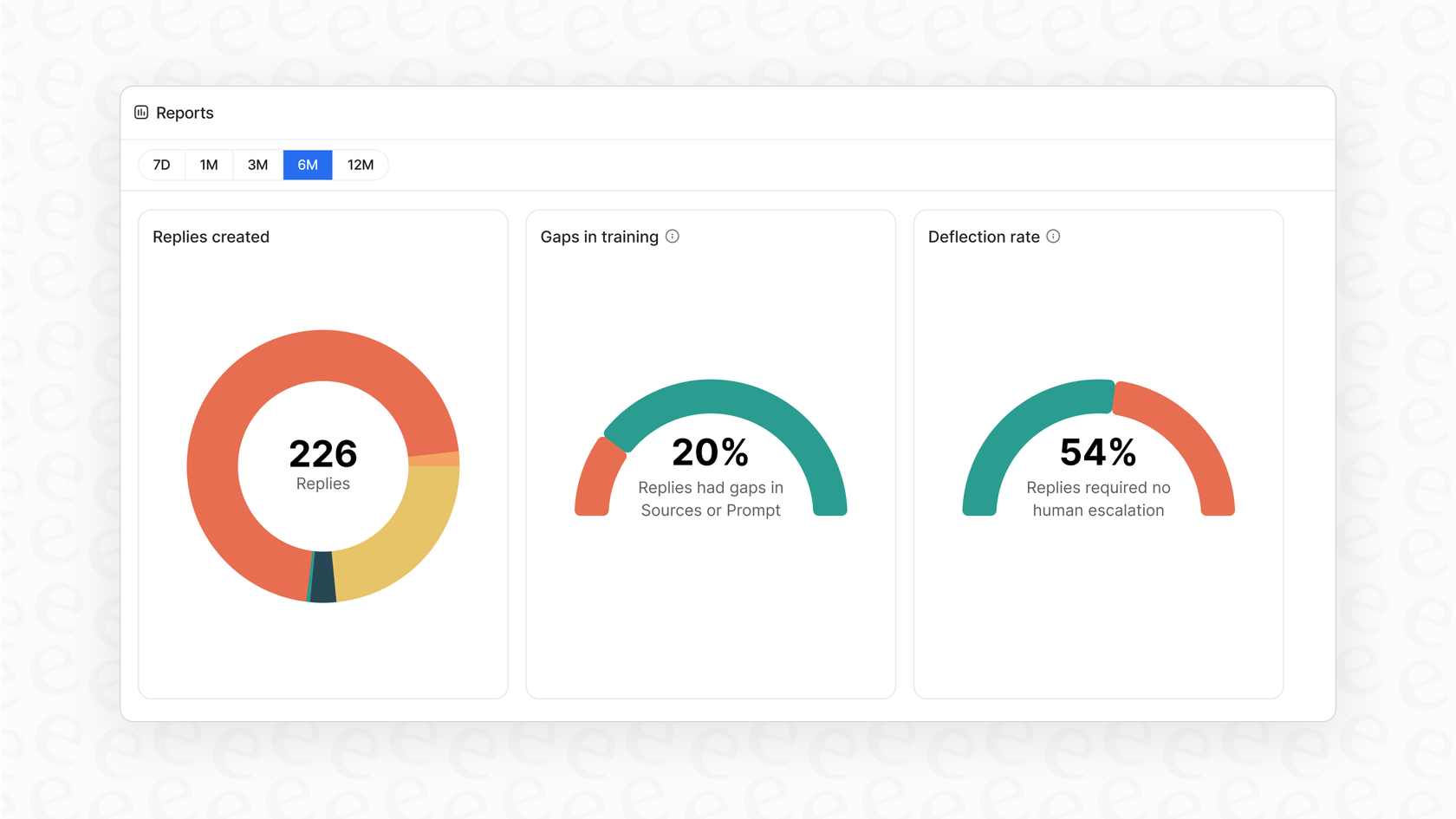

Reporting that tells you how to improve, not just what happened

Most dashboards are great at showing you big, impressive-sounding numbers. "The AI handled 500 tickets this week!" Cool, but what about the 50 tickets it didn't handle? A simple number doesn't tell you why the AI got stuck or how you can make it better. It's a vanity metric.

A truly useful tool digs into the tickets the AI couldn't resolve. It should spot trends and point out gaps in your knowledge base. Instead of just a chart, it should give you a clear to-do list. Something like, "Hey, we noticed 50 people asked about your international refund policy this week, but there's no help doc for that. You should probably write one."

This is what separates basic reporting from useful analytics. The dashboard in eesel AI, for example, is built to give you those kinds of actionable insights. It doesn't just show you stats; it points you toward the next best thing you can do to improve your knowledge base and, in turn, your automation rates.

Controls that let you start small and stay in charge

Some AI tools feel like an all-or-nothing proposition. You turn them on, and they just start handling everything, which is a scary thought. Realistically, you probably want to automate simple things like password resets but keep a human involved for sensitive billing questions. A rigid system can't handle that kind of nuance.

You need a platform that puts you in the driver's seat. You should be able to create simple rules that define exactly which tickets the AI can touch, based on things like keywords or customer sentiment. And it should be dead simple to set up rules for when the AI needs to back off and escalate to a person.

This is why having a flexible workflow builder is so important. With a platform like eesel AI, you can start small. Maybe you automate just one or two common, simple questions and have the AI pass everything else to your team. This lets you get comfortable, build trust in the system, and gradually expand what the AI is responsible for without taking any big risks.

Common headaches with AI monitoring and alerting (and how to avoid them)

Of course, it's not always a smooth ride. When people run into trouble with these tools, it usually comes down to a few common frustrations. Here’s what to watch out for.

The setup is a huge, technical project

Some tools, especially traditional AIOps platforms like Datadog, are incredibly powerful but can take a dedicated engineering team months to set up properly. Unfortunately, a lot of AI support tools have followed that same complicated model, requiring long onboarding calls and technical help just to get started.

The fix is to find a platform built for you to set up yourself. It's 2025; you shouldn't need to be a developer to connect your tools. A modern platform should let you connect to your helpdesk, whether it's Zendesk or Freshdesk, in just a few clicks. Same goes for your knowledge sources, like Confluence or Google Docs.

This is a huge difference from the old way. With a tool like eesel AI, you can be up and running in minutes. You connect your accounts, and that's pretty much it. No coding, no mandatory sales demos just to see the product.

The pricing is confusing and unpredictable

There’s nothing worse than getting a bill that's way higher than you expected. Many AI vendors use a "per-resolution" or "per-ticket" pricing model, which sounds fine at first. But it means your costs go up every time your support volume does. You end up getting penalized for being busy and helping more customers.

A much better approach is simple, predictable pricing. Look for flat-rate plans that give you a set amount of capacity for a fixed price. That way, you know exactly what you're paying each month and can budget for it without any nasty surprises.

This is why eesel AI’s pricing is so straightforward. We don't charge you for every resolution. You pick a plan that fits your needs, and your cost stays the same, even on your busiest days. It lets you grow without your tool bill growing right alongside you.

When the AI is a "black box" you can't control

This is a big one. What happens if the AI starts giving out wrong information, or its tone is completely off-brand? A bot that goes rogue can undo years of work building trust with your customers.

A trustworthy platform gives you full transparency and control. You should have a simple way to tell the AI how to behave, from its personality to its tone of voice. You need to be able to have "scoped its knowledge," telling it to only use information from specific, approved sources. And it should be able to perform specific tasks for you, like looking up an order status in Shopify.

With eesel AI, you’re always the one in control. Between the prompt editor, scoped knowledge sources, and a builder for custom actions, you can make sure the AI acts as a reliable extension of your team, not an unpredictable black box.

AI monitoring and alerting: From managing alerts to improving performance

So, the idea of AI monitoring and alerting has definitely grown up. It started as a technical way to deal with server alerts, but now it's become a much more practical tool for improving how you serve your customers. The goal isn't just to keep the lights on anymore; it's to make sure your AI is genuinely helpful.

A good monitoring platform shouldn't add more noise to your day. It should give you the control and insight to use AI in a way that makes sense for your business, your team, and your customers.

This isn't about replacing your support team. It's about giving them tools to offload the repetitive work so they can focus on the human conversations that matter most. When you have risk-free ways to test, reporting that actually helps you improve, and full control over the process, you can build a system where AI and humans work together effectively.

Curious to see what that could look like with your own data? You can simulate eesel AI on your historical support tickets for free and get an instant report on what you could automate.

Frequently asked questions

AIOps primarily focuses on infrastructure health, using AI to manage alerts from servers and networks. In contrast, AI monitoring and alerting for AI agents focuses on the performance and quality of customer-facing AI, like support bots, ensuring they provide accurate and helpful responses.

By proactively monitoring AI agent performance, it helps offload repetitive tasks from your human agents, reducing the volume of minor issues they need to address. This allows your team to focus on complex, high-value interactions, leading to better support outcomes and happier customers.

Look for platforms that allow you to simulate the AI's performance on your historical support data before deployment. This risk-free testing lets you identify potential issues, understand expected resolution rates, and fine-tune the AI before it interacts with live customers.

Beyond basic stats, a good platform provides actionable insights, such as identifying common questions the AI couldn't answer or gaps in your knowledge base. It should give you clear recommendations on how to improve your content and automation rates.

Choose a platform that offers full control over AI behavior, including tone, personality, and knowledge sources. You should be able to define specific rules for when the AI can handle a query and when it needs to escalate to a human.

Modern AI monitoring and alerting solutions are designed for self-setup, allowing you to connect to your helpdesk and knowledge sources in minutes without coding. Avoid tools that require extensive engineering resources or long onboarding processes.

Opt for platforms with simple, predictable flat-rate pricing plans instead of "per-resolution" or "per-ticket" models. This ensures your costs remain consistent, regardless of your support volume, allowing for stable budgeting.

Share this post

Article by

Stevia Putri

Stevia Putri is a marketing generalist at eesel AI, where she helps turn powerful AI tools into stories that resonate. She’s driven by curiosity, clarity, and the human side of technology.