If you're a product manager, you've probably noticed that building an AI product is a whole different beast than traditional software. It’s not just about following the usual agile playbook. Most product teams are wading through what the UK's Incubator for Artificial Intelligence calls a "fog of uncertainty." You're working with systems that don't always give the same answer twice, are completely dependent on data, and have to meet a new set of user expectations, all without a clear roadmap.

This guide is here to help you navigate that fog. We'll give you a practical framework for AI for product managers that focuses on three things: new workflows that welcome experimentation, smart prompts that get work done, and the right metrics to prove your product is actually working.

What makes the role of AI for product managers unique?

Before we get into the how-to, it’s important to understand why things are so different. Your core skills as a PM being user-focused and data-driven are still your foundation. But AI throws a few new challenges into the mix that change how you approach your work. It's less about following a predictable plan and more about guided exploration.

Here are the key things that set AI product management apart:

- It all starts with data. AI products aren't just built with code; they're shaped by data. This means that data quality, how you collect it, and how it’s labeled are now top-tier product concerns. If your data is a mess, your product will be too. Simple as that.

- Embracing uncertainty. Traditional software is predictable. You click a button, and the same thing happens every single time. AI models, on the other hand, work in probabilities. A big part of your job is to manage this ambiguity and design experiences that help users trust the AI, even when it isn't 100% certain.

- Keeping humans in the loop. AI gets smarter with feedback. Your product workflow needs a built-in way for users or your internal teams to correct the AI's mistakes. This creates a feedback loop that constantly improves the product.

- Building in ethical guardrails. Things like managing bias, protecting user privacy, and being transparent about how the AI works aren't just legal checkboxes anymore. They are core product features. This is why a tool like eesel AI is built to train only on a company's own secure data, so you know every answer is relevant, private, and comes from a source you can trust.

Rethinking your process: Essential workflows

The old product workflows often need a refresh when you're working with AI. You’re not moving in a straight line from an idea to a shipped feature. Instead, you're in a constant cycle of experimenting, learning, and tweaking.

The discovery workflow

Your discovery phase is no longer just about asking, "What features should we build?" Now, you’re asking, "What user problem can we solve with AI that we couldn't before?" This stage is less about detailed mockups and more about exploration. You'll be talking with data scientists from day one to see what's technically possible and running small tests to find out if a model can even solve the problem before you dedicate a whole sprint to it. You're not just designing a solution; you're discovering if one exists.

The development and experimentation workflow

This isn’t a standard development sprint. Think of it more like a science experiment running inside your product team. The team works in a tight loop: train a model, test how it performs, and then fine-tune it based on what you learn. PMs, data scientists, and ML engineers have to be in constant communication.

A huge part of this is creating a safe space to test things, even if they fail. You can't just throw a new AI model at your customers and cross your fingers. For example, eesel AI lets teams run its AI Agent in a simulation mode, using past support tickets to see how it would perform. This lets PMs check the AI's accuracy and estimate cost savings in a sandbox environment before it ever talks to a real customer, taking the risk out of the launch.

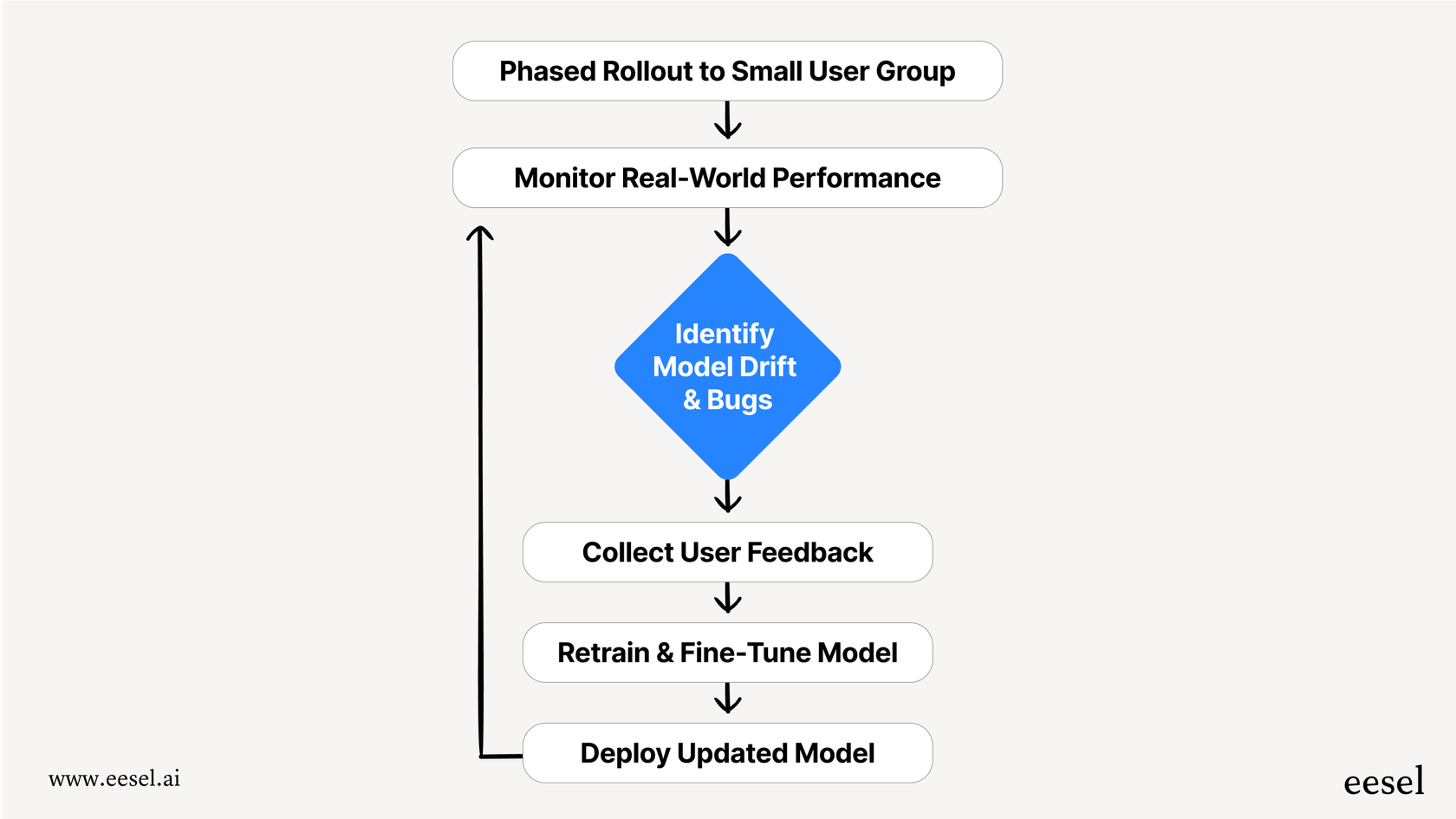

The launch and iteration workflow

Launching an AI product is really just the starting line. As a PM, you need to plan for a phased rollout. Start with your internal teams or a small group of users to get data on how the AI performs in the real world. After you launch, the work becomes about monitoring for "model drift" which is when the AI's performance gets worse over time because real-world data changes. You'll also need to set up clear feedback channels so you can keep retraining the AI and making sure it stays effective.

Crafting effective prompts: A key skill

With generative AI becoming more common, a well-crafted prompt is a product manager's secret weapon. This helps in two ways: you can use prompts to speed up your own work, and you can build prompt-based controls into the products you're managing.

Using prompts to speed up tasks

AI can be a great assistant for the everyday tasks of product management. By writing clear prompts with enough context, you can automate parts of your analysis, brainstorming, and documentation. This frees you up to focus on the big-picture thinking that actually pushes the product forward.

| Task | Example Prompt |

|---|---|

| Synthesizing User Feedback | "Analyze these 50 user interview transcripts and pull out the top 5 pain points in our onboarding. For each one, give me three direct quotes as proof." |

| Generating User Stories | "You are a senior product manager. Write three user stories for a feature that lets admins set user-specific permissions. Make sure to include detailed acceptance criteria." |

| Competitive Analysis | "Look at the G2 and Capterra reviews for competitors X and Y from the last 6 months. Put their most-praised features and most common complaints into a simple comparison table." |

Building prompt configuration: A strategy for product managers

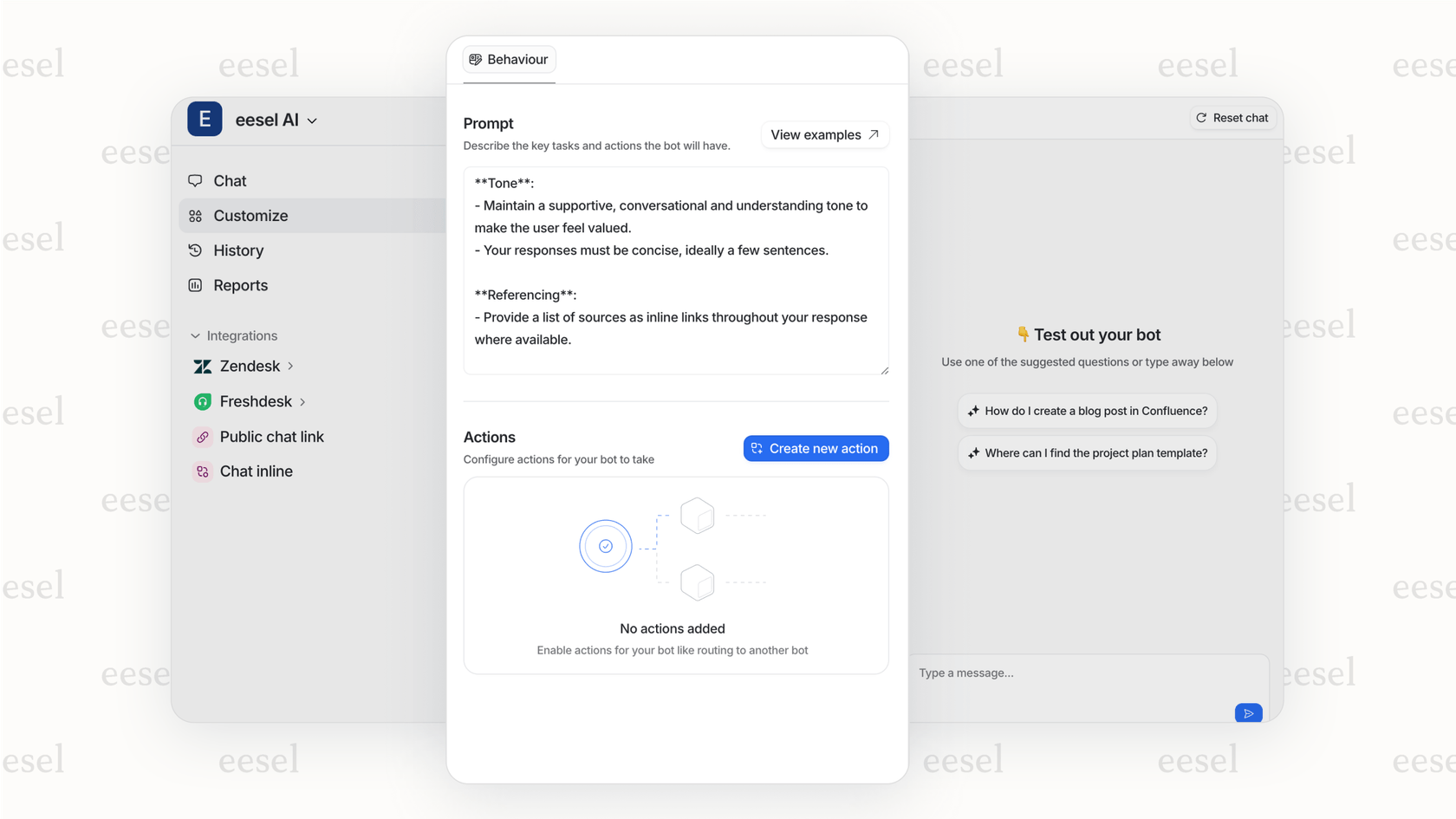

The best AI products aren't mysterious black boxes; they give the user some control. We're seeing more and more AI products give non-technical users a way to guide the AI's behavior using plain English. Instead of a screen full of confusing toggles, they just get a simple text box.

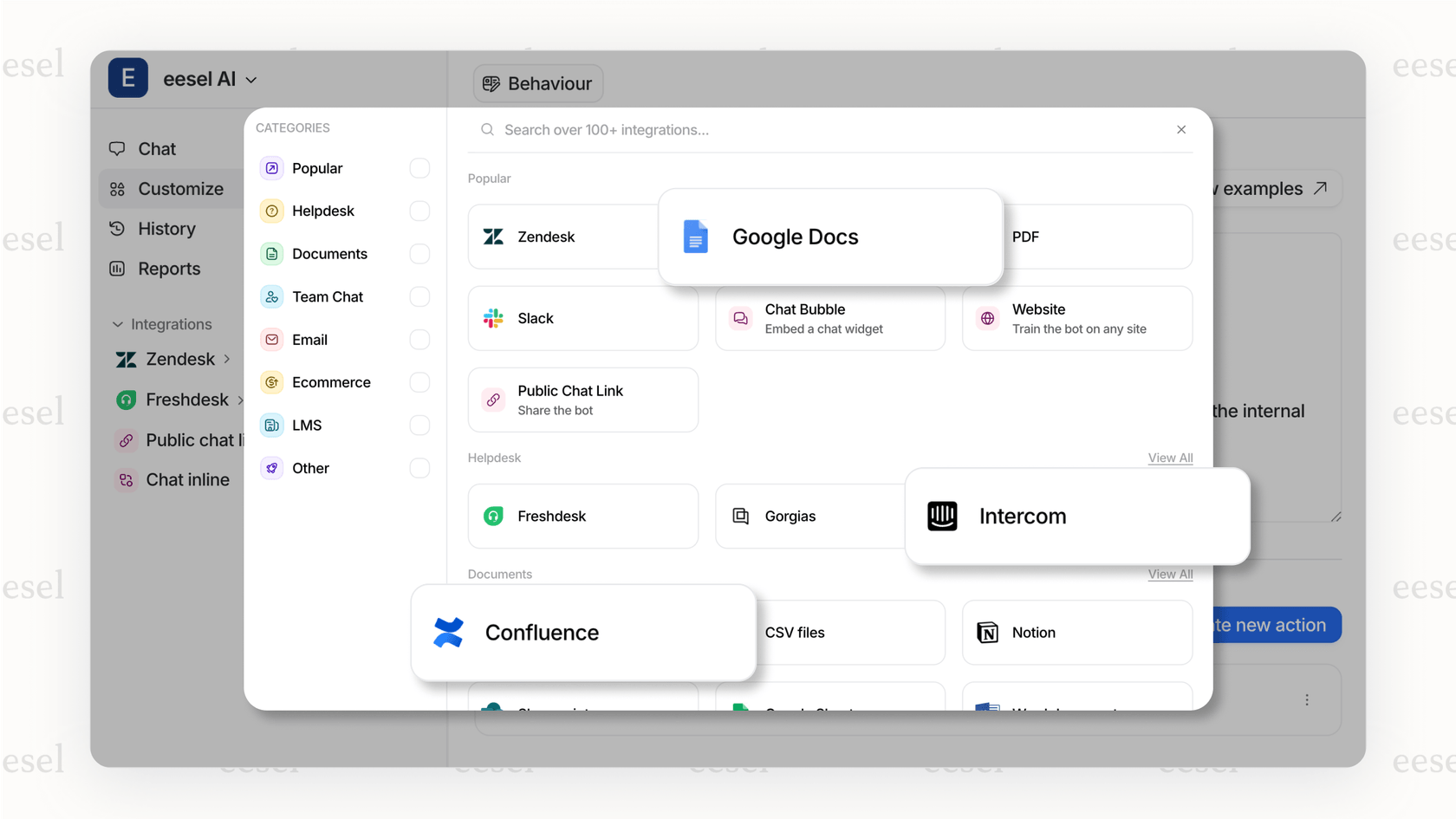

This is a core idea behind eesel AI. Its AI Agent isn't some rigid system you're stuck with. Instead, it lets admins set its personality, tone of voice, and rules for when to escalate an issue, all with simple prompts. This turns a complicated piece of AI into a tool that a Head of Support can easily manage without writing code or bothering the engineering team.

Beyond vanity metrics: How AI for product managers measure what matters

Standard SaaS metrics like monthly active users or how long people spend in the app don't always tell the whole story for AI products. You need to measure not just if people are using the feature, but if the AI is actually accurate, effective, and trustworthy.

Core AI model metrics

You don't need a PhD in data science, but you do need to speak the language. Understanding the basic metrics your technical team uses to check the model's health is a must.

- Accuracy, Precision, and Recall: These three metrics answer some pretty basic questions. How often is the AI right? When it gives an answer, how reliable is that answer? And is it finding all the things it's supposed to find?

- Latency: How fast does the AI give you a response? For anything user-facing, a slow answer is a non-starter, no matter how accurate it is.

Business-focused metrics

At the end of the day, an AI feature has to provide real value to the business. Your metrics should connect the dots between the model's performance, user outcomes, and your company's goals.

- Task Success Rate: Did the user actually get done what they were trying to do with the AI's help?

- Deflection Rate: In a customer support setting, this is the percentage of questions that the AI handled completely on its own, without a human needing to jump in.

- User Trust Score: You can track this with simple in-app surveys, like asking "How confident are you in this recommendation? (1-5)" to see how user confidence changes over time.

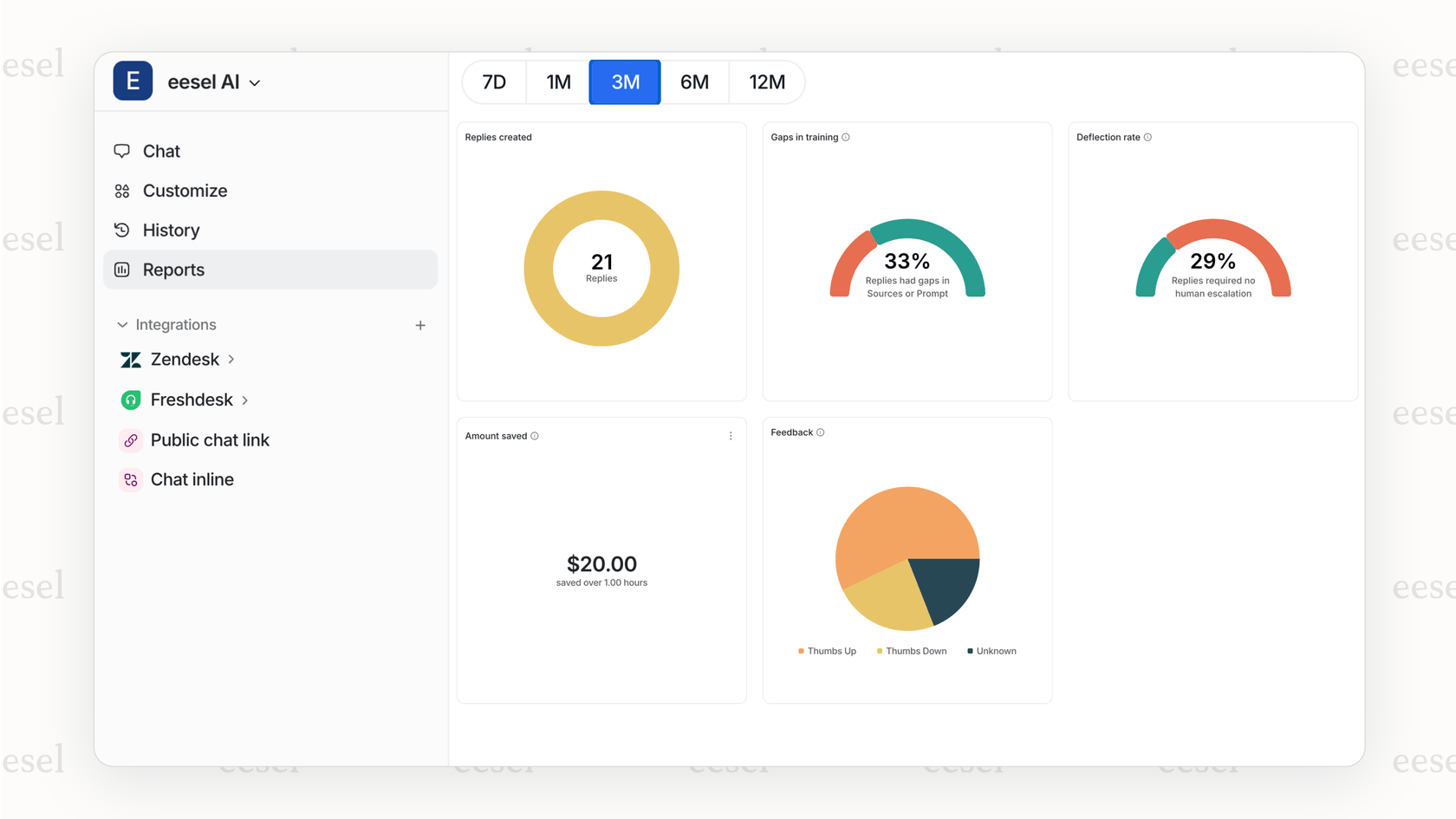

- ROI and Efficiency Gains: To get and keep buy-in from stakeholders, you have to show a return on investment. This is why tools like eesel AI have clear dashboards that show AI interactions, deflection rates, and estimated cost savings. This helps product teams prove the business impact of their AI features from day one.

| If You Usually Track... | For AI, Also Track... | Why It Matters |

|---|---|---|

| Feature Adoption Rate | Model Interaction Rate | Are people actually using the AI, or are they ignoring it? |

| Time on Task | Task Completion Time with AI | Is the AI making users faster, or is it getting in the way? |

| Customer Satisfaction (CSAT) | AI-Specific CSAT / Trust Score | Are users happy with the AI's answers, not just the product overall? |

Making AI for product managers work for you and your product

Being a great AI product manager is about more than just knowing the technology. You have to adapt how you work with new processes built for experimentation, new skills like writing good prompts, and new ways of measuring success that focus on effectiveness and trust. The goal isn't to just ship "AI features" for the sake of it. It's to build smart, reliable systems that solve real problems and earn your users' confidence, one interaction at a time.

Ready to see it in action?

If you want to see what this looks like in a real tool, eesel AI was designed from the ground up with these modern workflows, prompt-driven controls, and clear metrics in mind. It works with the tools you already use, like Zendesk, Slack, and Confluence, to bring value right away. Start a free trial or book a demo today to see how.

Frequently asked questions

No, you don't need a deep technical background. Your role is to understand the user problem and the business value, while collaborating closely with data scientists on what's technically feasible. Focus on learning core concepts like accuracy and latency so you can speak the same language as your technical team.

Start with a well-defined user problem that AI is uniquely suited to solve, rather than just trying to add AI for its own sake. From there, run a small experiment or proof-of-concept with your data science team to validate if an AI model can actually solve that problem before you commit to a full-scale project.

Focus on metrics that connect AI performance directly to business outcomes. Instead of just reporting model accuracy, show how that accuracy translates into a higher task success rate for users, a better deflection rate for support tickets, or direct cost savings.

The key is to design a "human-in-the-loop" process from the beginning. Give users an easy way to flag incorrect answers and provide feedback, which can then be used to retrain and improve the model. Also, for critical tasks, have clear rules for when the AI should escalate to a human expert.

Yes, you can, as very few companies have perfect data. Data preparation is a standard part of any AI project, and the discovery phase is the perfect time to assess the quality of your data. You can then budget time for the cleaning and labeling required to build a reliable model.

It's becoming a significant skill, but it doesn't have to consume your whole week. Think of it as a new way to conduct analysis and create documentation more efficiently, freeing up your time for strategy. If your product has user-facing prompt controls, then defining the guidelines for those prompts will become a key design task.

Share this post

Article by

Kenneth Pangan

Writer and marketer for over ten years, Kenneth Pangan splits his time between history, politics, and art with plenty of interruptions from his dogs demanding attention.